Reno-based chip startup Positron has secured a $230 million Series B to accelerate production of its AI inference processors, positioning the three-year-old company as a fresh challenger to Nvidia’s dominant GPUs. The round, which includes backing from the Qatar Investment Authority, lifts Positron’s total raised to just over $300 million and underscores investor appetite for alternatives amid surging demand for cost- and energy-efficient AI compute.

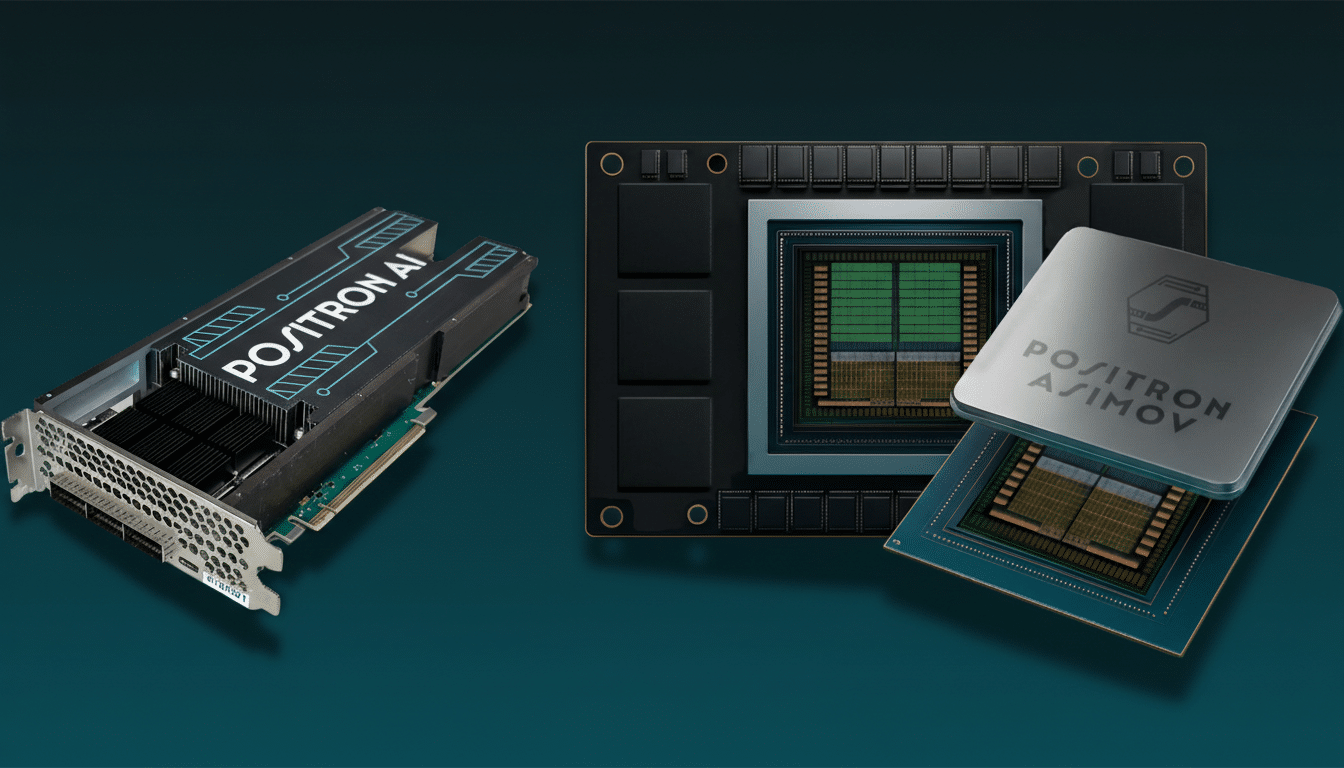

Positron says its first-generation Atlas chip, manufactured in Arizona, can deliver performance on par with Nvidia’s H100 while drawing under one-third of the power. The company is targeting inference rather than model training—a strategic fork in the road as enterprises pivot from building large models to deploying them in real-world products at scale.

- Why This Round Matters for AI Inference Competition

- Why Positron Is Betting on Inference Over Training

- Performance Claims and the Power Efficiency Math

- Investors and Manufacturing Signals Behind Positron

- Why Software and Tooling Remain the Real Competitive Moat

- The Competitive Landscape in AI Inference Accelerators

- What to Watch Next as Positron Scales AI Inference

Why This Round Matters for AI Inference Competition

Nvidia still commands well over 80% of the AI accelerator market by most analyst estimates, but supply constraints and total cost of ownership are pushing hyperscalers to diversify. Even top Nvidia customers like OpenAI have been exploring alternative compute to hedge risk and rein in operating costs. Investor-led initiatives, including a $20 billion AI infrastructure joint venture with Brookfield Asset Management, highlight how capital is flowing into non-GPU stacks that promise better efficiency per dollar and per watt.

For data center operators facing power caps and rising electricity rates, energy efficiency is no longer a nice-to-have. Inference now accounts for the bulk of AI cycles in production environments, and the economics are unforgiving: shaving latency and power draw directly impacts margins for search, recommendation, and conversational AI workloads used by hundreds of millions of end users.

Why Positron Is Betting on Inference Over Training

Training giant foundation models grabs headlines, but inference is where the bills stack up. Batching is limited, latency targets are strict, and models are updated continually. Positron’s focus on inference allows it to optimize silicon for batch-1 throughput, memory bandwidth locality, and low-precision arithmetic—think FP8 and INT4—without carrying the architectural overhead needed for frontier-scale training clusters.

This specialization is already a playbook for several contenders. Groq has emphasized tokens-per-second for LLMs, AMD’s MI300X has gained traction for memory-heavy inference, and Intel’s Gaudi line has competed on price-performance. Positron is entering a hotly contested lane—but one where sustained innovation can quickly translate into better TCO for cloud and enterprise buyers.

Performance Claims and the Power Efficiency Math

Nvidia’s top-bin H100 modules consume hundreds of watts per card—up to roughly 700W in certain server configurations—so matching H100-class throughput at under one-third the power would be a meaningful advantage. Lower chip power cascades through the data center: less cooling, denser racks, and improved power usage effectiveness. For inference clusters that run near 24/7, even single-digit efficiency gains compound; claims at this scale, if validated, could reshape procurement models.

The proof point will be standardized benchmarking and real customer deployments. MLPerf Inference submissions, public model serving demos, and head-to-head trials on popular workloads—Llama, Mixtral, vision transformers, and RAG pipelines—are the metrics buyers will scrutinize. Without a mature software stack, however, raw silicon advantages rarely make it to production.

Investors and Manufacturing Signals Behind Positron

The presence of the Qatar Investment Authority reflects a broader sovereign trend of funding AI infrastructure that reduces dependency on a single supplier. Positron’s prior backers include Valor Equity Partners, Atreides Management, DFJ Growth, Flume Ventures, and Resilience Reserve—an investor roster familiar with capital-intensive, deep-tech scaling.

Manufacturing in Arizona is notable as the U.S. leans into the CHIPS and Science Act to rebuild domestic semiconductor capacity. While most startups rely on established foundries for wafers and advanced packaging, proximity to North American manufacturing can streamline logistics and help enterprise buyers meet compliance and resilience goals in their supply chains.

Why Software and Tooling Remain the Real Competitive Moat

Nvidia’s strongest defense remains its software ecosystem: CUDA, TensorRT, and a decade of optimized kernels that make deployment feel turnkey. For Positron, a production-grade toolchain is non-negotiable—ONNX and PyTorch 2.x backends, graph compilers, kernel libraries for attention and KV cache handling, and drop-in compatibility with popular inference servers such as vLLM and text-generation frameworks.

Enterprises will also expect observability, quantization toolkits, and managed firmware updates, plus vendor support SLAs that match cloud-provider standards. The companies that translate developer friendliness into consistent latency under load—while keeping tokens-per-dollar and watts-per-token ahead—will win repeat purchases.

The Competitive Landscape in AI Inference Accelerators

AMD has landed marquee cloud wins with MI300X for memory-bound LLM inference, and Intel’s Gaudi accelerators have competed aggressively on cost. Startup peers from Cerebras to d-Matrix and Tenstorrent are pursuing diverse architectures and software stacks to chip away at Nvidia’s incumbency. Analysts from Omdia and Gartner have noted broader buyer willingness to pilot multiple accelerators as supply loosens and toolchains mature.

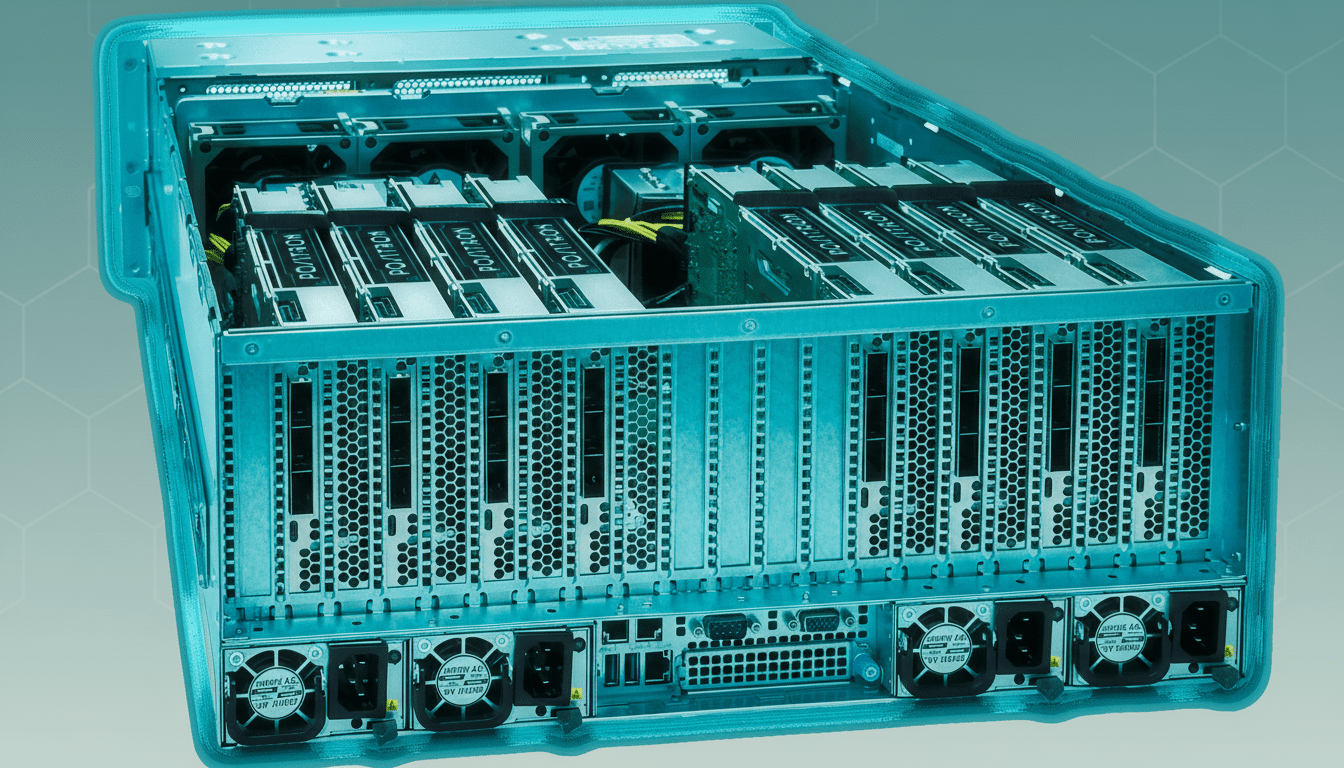

In this environment, Positron’s pitch is crisp: H100-class results with dramatically lower power draw, tailored for inference-first fleets. If Atlas can deliver across common production models and integrate cleanly into existing MLOps pipelines, it will find an audience among cloud platforms, colocation providers, and enterprises battling GPU shortages and energy ceilings.

What to Watch Next as Positron Scales AI Inference

Key milestones include public benchmarks, developer kits, and early-access programs with reference customers in search, advertising, and enterprise software. Supply-chain clarity—wafer starts, packaging capacity, and board availability—will determine how quickly Positron can scale deliveries. Watch also for partnerships with data center operators, where power efficiency can turn into preferential rack space and financing.

Nvidia’s lead remains formidable, but the market is no longer winner-take-all. With $230 million in fresh capital and a tight focus on inference economics, Positron now has the runway to prove that a lean, power-efficient ASIC can carve out meaningful share in the next phase of AI deployment.