YouTube is rolling out its most ambitious update yet to automatic dubbing, opening access to all creators, expanding language coverage to 27, and debuting a more natural-sounding Expressive Speech mode. The platform is also piloting automatic lip sync for translated tracks, a step that could make dubbed videos look as convincing as they sound.

Auto Dub Widens to All Creators Across YouTube

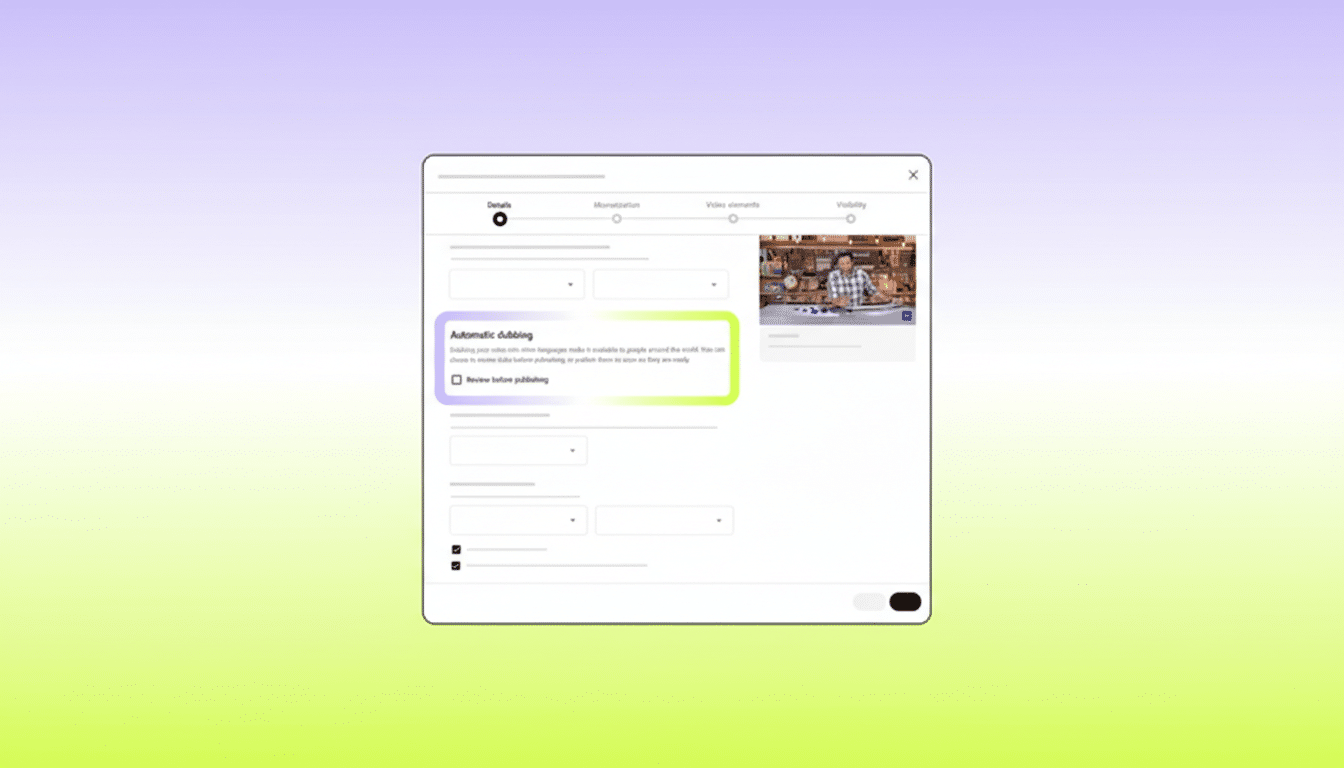

Auto dubbing began as a limited experiment for select members of the YouTube Partner Program with a modest set of nine languages. With this expansion, the tool is now generally available, letting any creator generate alternate-language tracks directly within YouTube’s workflow. For channels that rely on global reach, this removes a significant bottleneck—no third-party vendors, no separate uploads, and no juggling playlists for different audiences.

The language roster has also grown sharply to 27, widening coverage across regions where creators often see demand but lacked the resources to localize. This is particularly meaningful on a platform with over 2 billion logged-in monthly users and more than a billion hours watched daily, according to company disclosures. Even small channels can now test international demand without upfront costs.

Expressive Speech Sounds Less Robotic in Dubs

The headline upgrade is Expressive Speech, which aims to carry over the speaker’s rhythm, intonation, and emphasis so translations feel less flat. Instead of a monotone render, the dub mirrors how the creator talks—pauses, punchlines, and all—making educational explainers, comedy, and storytelling more engaging in other languages.

At launch, Expressive Speech is available for English, French, German, Hindi, Indonesian, Italian, Portuguese, and Spanish. That initial set spans high-demand markets and key growth regions. Under the hood, the feature draws on advancements in prosody modeling seen across Google’s research efforts, such as work stemming from the Aloud project, to better map timing and emphasis from one language to another.

Consider a fitness coach in Brazil publishing a high-energy routine. With Expressive Speech, the Italian or Hindi dub won’t just translate instructions; it can better preserve the motivational cadence that keeps viewers moving. For creators who rely on tone—teachers, reviewers, and vloggers alike—this is where AI dubbing starts to feel credible.

Lip Sync Pilot Targets Visual Believability

Audio quality is only half the battle; mismatched mouth movements can break the spell. YouTube is now testing automatic lip sync for translations, aligning visible speech with the dubbed track. While still in pilot and not broadly available, the capability suggests YouTube is tackling the “uncanny” gap that plagues many AI dubs.

If the pilot delivers consistent alignment—especially across challenging phonemes—it could lift completion rates for dubbed content. Video platforms and research labs have pursued similar “viseme” mapping for years, but reliable, real-time alignment at YouTube’s scale would be a milestone.

Control, Safety, and Best Practices for Creators

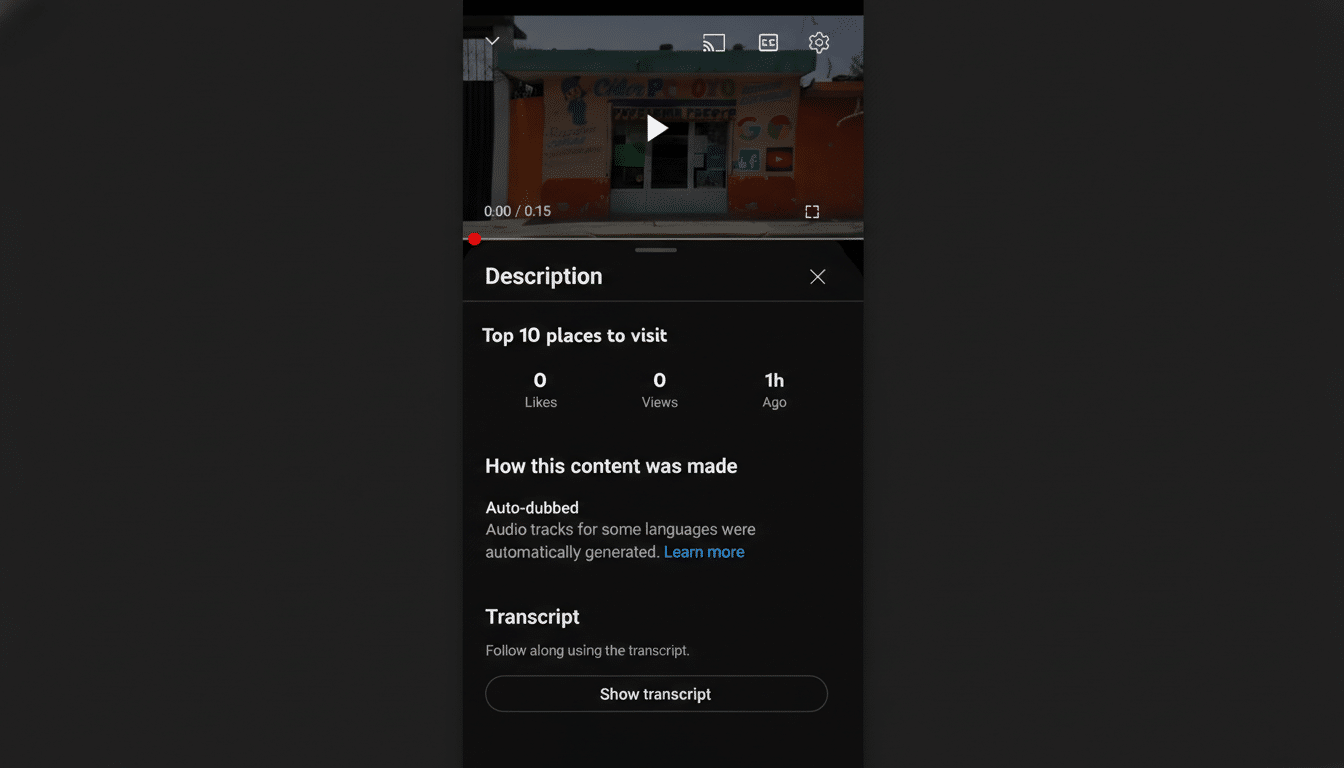

YouTube notes that creators remain in control. Channels can opt out of auto dubbing entirely, review and replace any AI-generated track, or upload their own professionally produced audio. Viewers can always switch back to the original language via audio settings, which matters for bilingual audiences and for content where nuance is critical.

For accuracy-sensitive topics—health, finance, or legal content—creators should still audit translations, especially idioms and terminology that don’t travel well. A quick human review before publishing the dubbed track can catch mistranslations and preserve brand tone. Maintaining consistent on-screen text and graphics across languages also reduces cognitive friction for viewers.

Why This Matters Now for Global Creators

The creator economy increasingly crosses borders: global fandoms rally around gaming, music, tutorials, and product reviews regardless of origin. We’ve seen large channels invest heavily in manual dubbing—think of creators who run separate Spanish or Japanese channels to serve local audiences. Native tools that lower the cost of multilingual distribution could democratize that playbook for mid-size and emerging creators.

Competing platforms are moving in parallel. Podcast platforms have tested AI voice translation for long-form audio, and major AI labs have showcased end-to-end speech translation models that capture tone and timing. By embedding dubbing, prosody, and soon lip sync directly in the upload pipeline, YouTube reduces friction where creators already work.

What to Watch Next as YouTube Rolls Out Features

Key questions remain: How quickly will Expressive Speech expand beyond the initial eight languages? Will lip sync be robust across accents and fast cuts? And how will creators balance speed versus oversight as they publish to dozens of markets at once?

For now, the direction is clear. With broader access, wider language support, more natural delivery, and a credible path to visual alignment, YouTube’s auto dub is maturing from a niche experiment into a practical growth lever for global creators.