It is testing a Gemini-based in-car assistant for its driverless ride-hailing service, according to code from the app discovered by independent researcher Jane Manchun Wong. The unreleased “Waymo Ride Assistant” leverages Google’s multimodal Gemini architecture to answer rider questions, adjust select cabin settings, and provide reassurance during trips, giving an early look at how generative AI might shape the passenger experience inside self-driving vehicles.

How Waymo’s in-car assistant for riders works

Behind the scenes is a 1,200+ line system prompt laying out everything from tone to task boundaries, according to Wong. The assistant starts by welcoming riders by name, keeps responses to one to three sentences, and can retrieve limited context — like how many Waymo trips a rider has taken — to make an interaction feel personalized without inundating them.

Operationally, the assistant can control cabin temperature, lighting, and music, but very little beyond that which would distract from driving performance or the ability to respond safely. Significantly, it also lacks options for things like setting the route, seat position, or window level — down to volume controls. It also ensures that if a passenger requests something beyond its scope, the bot says “That’s not something I can do yet,” leaving no doubt that there are limitations as opposed to making guesses or promises.

It can also answer other types of questions for trivia or general knowledge, such as local weather or who won the last World Series — but it can’t perform real-world actions such as ordering food or making reservations. Emergencies are out of the question; the assistant will not be a dispatcher and won’t behave like one.

Guardrails of Safety and an Identity Split

One principle of design is that the assistant should never be confused with the self-driving system itself. When riders ask, “How do you see the road?” it’s told to refer to the “Waymo Driver” and explain that the vehicle’s perception is derived from the Driver’s sensors and software — not the chatbot. That distinction is important in terms of liability, clarity, and trust for the user.

The prompt also tells the assistant not to speak live on maneuvers or incidents. When a rider inquires about a video of a crash, or questions why the car just braked, the bot deflects rather than speculating. That eliminates the risk of a conversational model having to invent explanations on the fly for safety-critical behavior, which is one failure mode when LLMs are stressed beyond their training.

Calm, clear, jargon-free. These tone guidelines emphasize calm yet concise phrasing — free of jargon. A close command can disengage the interaction, sparing the system from being a noise-making nuisance — an essential feature for hush settings, with silence often being the best UX.

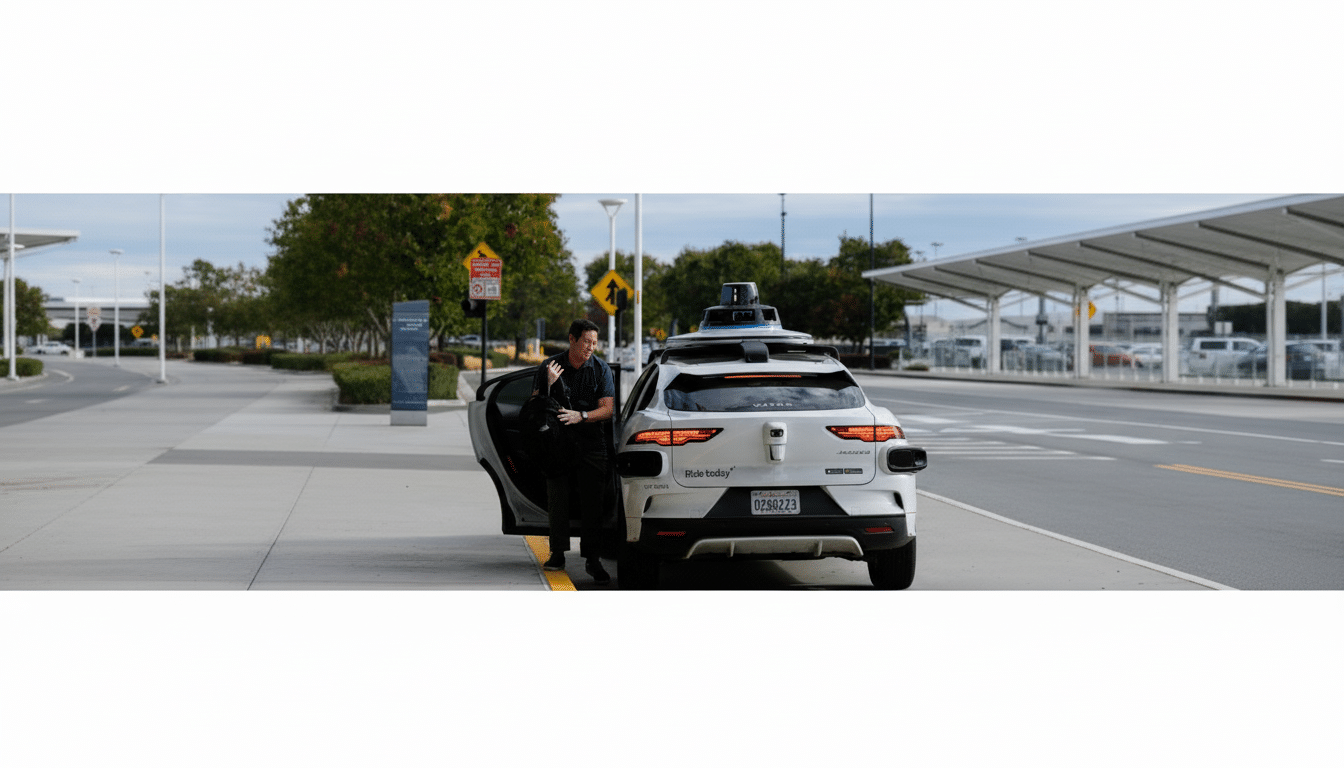

Why Waymo wants an AI assistant inside its robotaxis

Installing a ride-focused helper is a practical way to reduce ambiguity inside an autonomous car. It’s the simple things — “How much longer until we get there?” “Can it be a bit cooler?” — that are a good fit for a narrowly focused AI that’s always on and context-aware. It also helps Waymo scale rider support without piping every question to a human agent.

Waymo’s use of Gemini is not new. The company says it draws upon ideas from Gemini to train vehicles for rare and complex situations, supplementing the billions of miles of simulation and tens of millions on public roads that it logs, according to its safety reports. Stretching Gemini into the cabin moves those smarts from behind-the-scenes model training to explicit, rider-facing use.

There’s a privacy dimension, too. Personalization cues such as a user’s first name and trip history can make interactions more friendly, but they also raise issues related to data minimization and consent flows. There will be attention paid to how much context the assistant gets by default and how those preferences are managed within the app.

Competitive context and what to watch as trials expand

Waymo is not the only company looking into in-car AI. Tesla has also been working on integrations with xAI’s Grok, which it describes as a more conversational counterpart. Waymo’s approach appears more focused and utilitarian — tightly scoped, safety-conscious, explicitly separated from the driving stack — reflecting different philosophies of what an “AI co-pilot” ought to be in a robotaxi as opposed to a privately owned car.

Key signals to follow next:

- Whether Waymo grows the command surface of its assistant beyond climate, lighting, and music

- How it telegraphs limits around safety questions

- What transparency is offered about data retention for in-cabin conversation

- Where it first rolls out — places like Phoenix or San Francisco offer a cross-section of rider profiles and street conditions to put the design through its paces

For the present, the assistant is in a testing phase and hidden from public builds. But the blueprint revealed by researchers hints at a conscientious, rider-centric twist to generative AI in autonomy — an endeavor that seeks to make robotaxis feel more legible and more human, even while steering clear of speculation or control that belongs with the Waymo Driver.