Meta has hired Alan Dye, the veteran leader of Apple’s Human Interface design team, an appointment that cements the company’s effort to make AI-powered consumer devices feel less like experiments and more like indispensable pieces of life. Dye, who will be reporting to Andrew Bosworth, chief technology officer at Meta, will be responsible for product user experience on Ray-Ban Meta smart glasses and Quest headsets, according to a report by Bloomberg’s Mark Gurman.

It’s a high-profile victory for Meta at an important time for consumer AI. The company has been racing to make ambient multimodal assistants the kind of utility people use every day, and sometimes the difference between a novelty and an addition is design. Dye’s mission is all too clear: translate Meta’s AI research into interfaces we actually want to live with.

Why Dye Is Key to Meta’s Device Ambitions

Dye’s history at Apple encompasses the iOS and macOS design systems, the interaction model for Apple Watch, and the company’s San Francisco typeface. He helped codify design principles that enabled complex software to feel approachable — clean hierarchy, motion as meaning, typography as a functional tool. They’re also directly transferable skills to wearables and mixed reality, where UI space is at a premium and cognitive load needs to be kept as low as possible.

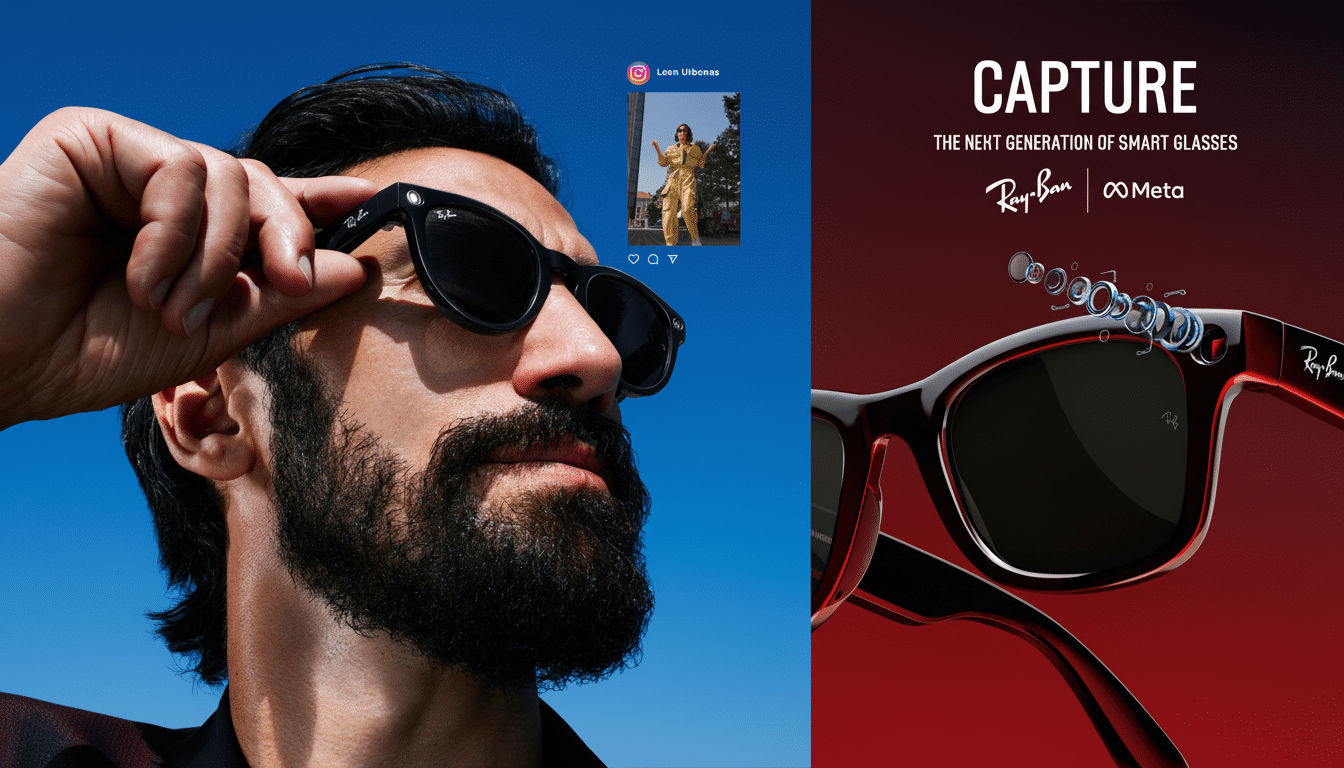

That’s the kind of discipline Meta’s device lineup requires. Quest’s interface has become more capable, but also more cluttered as the OS takes in productivity, fitness, and social features. Ray-Ban Meta smart glasses are emerging, too, as a stage for hands-free AI — camera and audio and assistant that work by “seeing” what you see. The potential is enormous, but the room for friction is microscopic. Dye’s arrival also represents the growing effort to consolidate these experiences into a single, cohesive design language.

A Timely Bet on AI-First Interfaces and Wearables

Consumer AI shifts from chatbots to context-aware assistants that hear what you hear, see what you see, and help when they weren’t asked. That takes interface patterns that have yet to exist at scale. Apple’s attempt at surpassing input fidelity was eye and hand input normalization with Vision Pro; Meta is eyeing voice-first and wrist-based neural interfaces through its CTRL-labs purchase. The next frontier is “invisible UI” — intelligent prompts, gentle confirmations, low-latency feedback that feels normal in public and on the go.

Market dynamics add urgency. According to IDC, AR/VR shipments contracted slightly in 2023 but have returned to an upswing with fresh headsets and are expected to see multi-year expansion as prices drop and app ecosystems mature. Quest leads in unit share (or, at least it did as of 10 time periods ago), but retention also hinges on seamless onboarding and daily utility. A head of design who can convert first-time use into repeat behavior isn’t a luxury; he or she is a growth lever.

Dye’s influence could also spread into Meta’s software, not just its hardware. WeWork and Messenger already use an app-like approach; solving for common use cases in Meta’s operating environments would serve to minimize fragmentation and lower the barriers to feature adoption.

Apple’s Succession and Design Continuity

Apple will promote Steve Lemay to head the Human Interface team, a longtime designer who has been working on Apple interfaces since 1999, according to Bloomberg. The move bespeaks continuity, not reset. Since Jony Ive left, Apple’s design leadership has been spread more evenly, with industrial design focusing on operations and software design in HI. And just as Meta is trying to pack years of design evolution into quarters, expect Apple’s cadence of design to be rock-steady.

Talent Wars and the Price of Competition

Meta’s hire is an example of a larger move to accelerate recruiting in order to bulk up its AI and device bench. The company has poached researchers from elite labs, including OpenAI alumni, in a contest that boils down to compensation, access to compute power, and product visibility. The pitches for talent are increasingly personal as the new frontier of consumer AI moves toward tangible products, as reports from The Information and Bloomberg have claimed.

The financial stakes are substantial. Meta said in SEC filings that Reality Labs made an operating loss of $16.1 billion in 2023 and warned of bigger losses as it invested more heavily. Management contends that the spend will be worth it, with wearables and mixed reality serving as the next computing platform. Converting that investment into habit-forming experiences is where design leadership can make a meaningful difference in results.

What to Watch Next as Meta Refines Device Design

Short term, keep an eye out for interface improvements throughout Quest OS — streamlined navigation, faster system gestures, and improved hand tracking — as well as more proactive multimodal experiences on Ray-Ban Meta glasses.

In the medium term, imagine a cohesive design system across apps, headsets, and glasses with privacy-forward signals that clearly indicate when sensors or AI is in use.

If Meta ships a game-changer in “ambient AI” that feels natural on the face or wrist, you’ll be seeing Dye’s fingerprints all over it. If Apple can keep its luster while sinking a little generative depth into iOS and visionOS under Lemay, the gap may narrow on capability and widen on taste. Either way, it’s a momentous talent move that will help determine how billions of people perceive AI in the wild.