If you felt weighed down by your AI bills last year — perhaps because of the cost of cloud services or buying software from a big tech company like Microsoft, Amazon, or Google — well, get ready for them to feel even heavier.

As the cost of memory chips keeps rising, and as other models that are slimmer and more socially gabby than last year’s generation (those conversations take a toll) come to market, an increasingly expensive synthesis of computationally organized activity and licensing fees for new content — the economics of operating lifelike AI — begins to pull you in all directions: more details, less power, worse prices in 2026. The upside: With a few disciplined habits, you can keep a lid on spend without having to give up the tech that is, by now, central to both work and life.

Why AI services will be more expensive this year

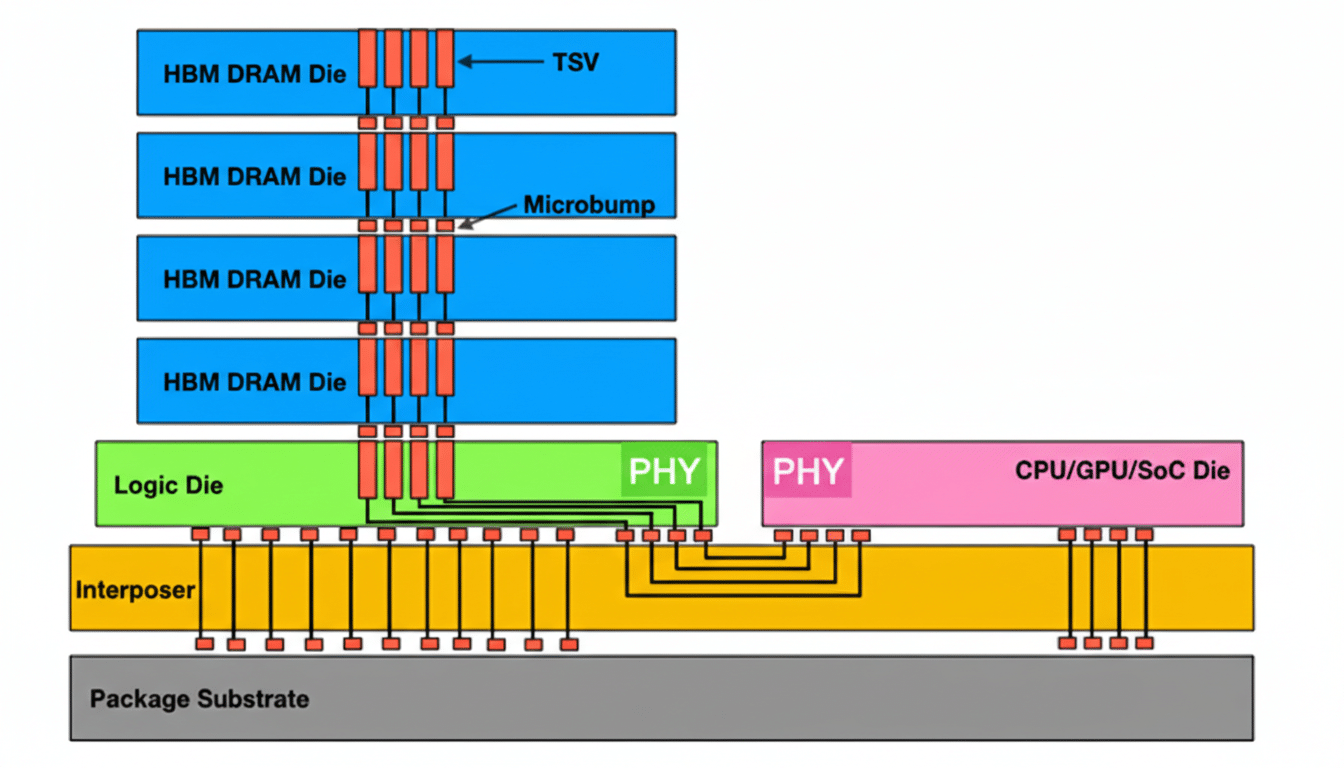

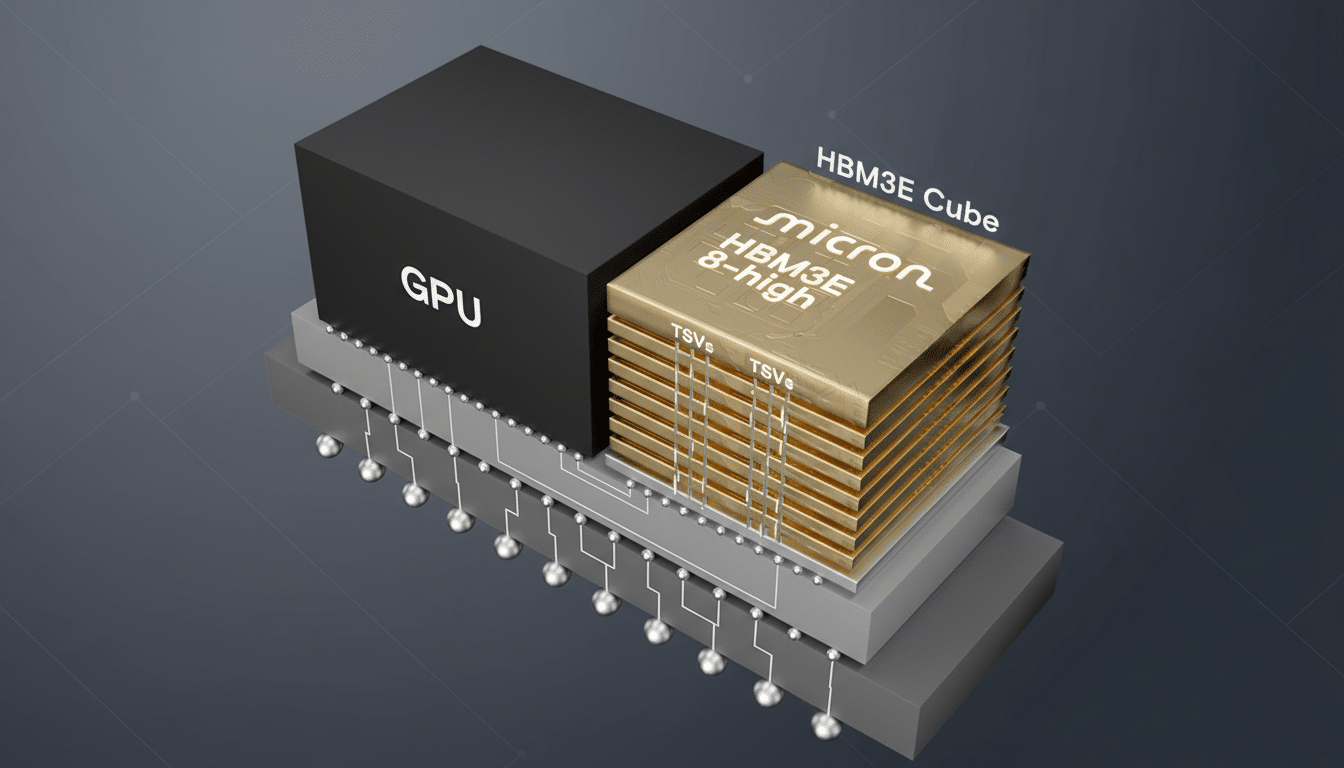

In the background of every chatbot response is a vast pile of compute, memory, storage, and power. And the service that now literally generates costs for us and losses collectively exceeds my worst nightmare. Right now, it’s all about memory that hurts. Large language model inference needs large amounts of high-bandwidth memory (HBM) and DRAM, as its models must cache massive “key-value” tensors for each token in context. As context windows expand and models reason over more steps, memory requirements explode.

Watchers like TrendForce have, over the past couple of weeks and months, noted that HBM has remained tight — with contract prices rising — and Micron and other suppliers have repeatedly told investors that demand still exceeds supply.

That pressure continues straight through to the cloud: more memory per GPU, more GPUs per workload, and more cost per prompt.

Storage isn’t helping either. NAND flash for datasets and vector indexes has risen off the floor thanks to massive AI data pipeline builds. Factor in power and cooling restrictions (as reported by Uptime Institute, among others), and operators are spending more to keep racks singing.

Then there’s content. After early lawsuits, a model for licensing is starting to emerge. OpenAI’s deals with The Associated Press and Axel Springer, Reddit’s data licensing agreements, and the like indicate that training and generation on premium content will create a continuing obligation to pay royalties. Those prices eventually translate into subscription tiers, API fees, or enterprise contracts.

Lastly, the usage pattern is “token hungry.” Stanford’s AI Index has also charted exponential growth in model size and ability; what this actually means is larger prompts, more complex tool use, and increasingly long-winded chain-of-thought-style output. Agentic systems exacerbate the effect by looping between tools and feeding full histories back through the model. The more turns, the more tokens, and tokens are the meter.

How impending AI price hikes are likely to hit you

For consumers, that is expected to lead to more expensive premium tiers and more severe caps on heavy features like long-context file uploads (for example, booru services — a type of community platform for sharing and discussing erotic images), multimodal generation (like combining text with an image), and high-resolution video. In the business world, this means you can expect to see API per-token rates nudge up, more restrictive quotas on free tiers, and potentially “burst” charges for high-concurrency workloads.

And even where vendors advertise faster throughput on the new accelerators, fast does not necessarily mean cheap. It’s like filling a tank with gas: if you get on-model meters that process more tokens per second and you send the same or more words, then the meter is just going to run faster. The bottleneck today is in memory capacity and not just compute cycles.

And don’t overlook amortization. Hyperscalers have warned their investors to brace for higher AI capex for data centers and the chips. All those multiyear investments will be amortized through pricing, packaging, and upsells — much the same way the cloud industry did in its initial wave of growth.

Three practical tricks you can use to save on AI costs

- Tip 1: Route workloads to the smallest model capable of getting the job done. Employ a “model router” pattern: default to a small model for common cases like classification, extraction, or summarization and step up only if complexity is detected. Teams that implement this policy typically reduce token spend by 30–60% in everyday workloads yet reserve frontier models for genuinely difficult cases.

- Tip 2: Clip tokens at the source. In your prompts, be specific about the limitations — “answer in 5 bullet points” or “keep under 120 words” — and ask for summaries instead of transcripts. Shut down chat memory where you don’t want it. Employ retrieval-augmented generation so you’re sending only the relevant chunks of a document — not the entire file. Cache regular requests and responses as well; most services have some way of caching to prevent reissuing a large number of almost equivalent calls.

- Tip 3: Put guardrails on agents and budgets. Limit the number of tool calls or “turns” an agent can make, require sign-off before it increases scope, and log token use by task. Set up per-project budgets and alarms in the same way you would for cloud compute. Lessons from usage-based SaaS apply — vendors like Snowflake added governance features after customers experienced surprise bills. Such discipline should be part of AI ops.

Bonus if you oversee procurement: prioritize batch jobs for non-urgent work and negotiate lower rates for off-peak times. Batch inference typically has a lower per-token rate and can be run overnight. Also compare total cost, not just list price — a short model that gets the right answer on the first try may turn out to be cheaper than a long-winded one that needs lots of follow-up.

The bottom line on rising AI costs and what you can do

This is the year when memory inflation, data licensing, and token-hungry usage patterns collide to drive up AI prices. You can’t alter chip markets, but you can change how you prompt and route and cache and batch and govern. Install those levers now, and you’ll be able to maintain the gains from AI without allowing the meter to drive your road map.