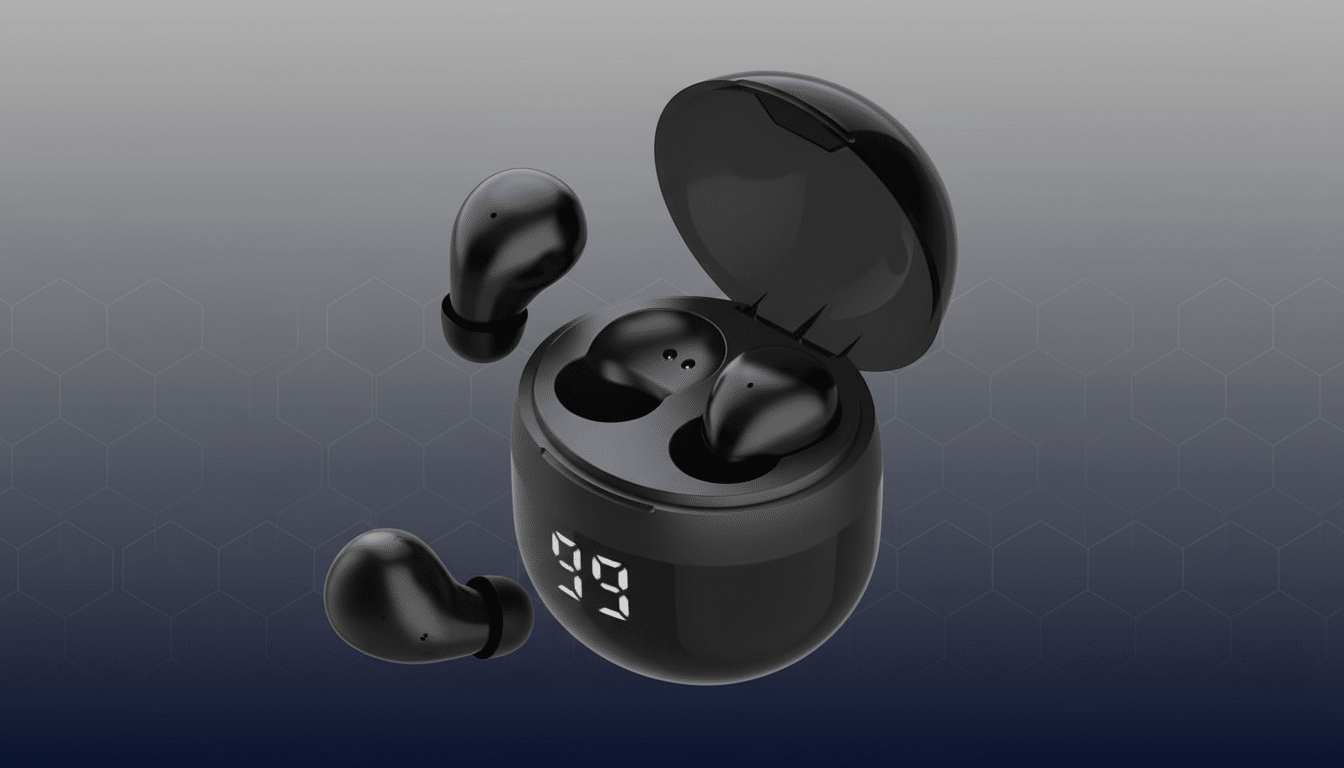

Voice AI startup Subtle is launching its $199 pair of wireless earbuds around the company’s noise isolation models in a play to enhance call quality and produce transcriptions that are clearer and more precise. The earbuds, unveiled ahead of CES, will ship later this quarter in the U.S. and come with a one-year subscription to Subtle’s iOS and macOS app for hands-free voice notes and AI chat.

AI-First Earbuds Aim For Clear Calls And Notes

Subtle’s line sounds simple: Make you intelligible to others and to machines to correctly transcribe what you say, even in messy audio spaces. The company also makes an app, which works with the earbuds, enabling users to dictate into any app, create voice notes, or chat with an assistant without tapping the keyboard. The buds also feature a low-power chip that makes it possible for them to wake an iPhone while it is locked, effectively turning the system into a quick-capture tool for ideas and meeting notes.

The product arrives as there is more and more reliance on voice for capture and workflow among content creators, field workers, and remote teams. The promise is utility: You can start talking and get nice, tidy notes or live captions, and you can do it in spots where traditional earbuds crumble — swarmed coffee shops, windy sidewalks, crowded offices.

Inside The Noise Models And Early Claims

Subtle makes a difference between old-school active noise cancellation, which mainly reduces what you hear, and model-driven voice isolation, which decreases what others hear. The latter, model-based noise elimination, uses beamforming microphones, spectral filtering, and deep neural nets trained on speech-in-noise to retain intelligibility while removing clatter. In practice, the test metric is a lower word error rate for transcription and higher perceived speech quality on calls.

The company claims Voicebuds create 5x fewer transcription mistakes than AirPods Pro 3 when using the latter in tandem with a cloud model from OpenAI or similar.

As with any AI benchmark, dataset and testing conditions matter (word error rate can swing wildly with accents, domains, or background noise), but the claim would seem to indicate a certain level of confidence in Subtle’s model for speech denoising. Independent testing will be important to confirm how they perform out in the wild amidst subway rattle, office chatter, and street noise.

Positioning Versus Competitors and Adjacent Devices

For earbuds, Voicebuds would be compared to category leaders that are known for both excellent call quality and active noise cancellation (ANC), including Apple’s AirPods Pro, Sony’s WF-1000XM5, Bose QuietComfort Ultra, and Google Pixel Buds Pro.

And it’s software where Subtle wants to differentiate itself — continuous dictation across apps, AI-assisted notes, and model-driven clarity designed for speech recognition rather than simply listening comfort.

The company is also vying for the fast-growing dictation and AI note-taking space, which apps like Wispr Flow, Willow, Monologue, and Superwhisper have targeted as well, with users ranging from students to journalists to execs. And hardware alternatives have also emerged: Last year, businesses including Sandbar and Pebble announced smart rings for voice notes. Subtle’s wager is that one device worn on the wrist all day can blend dictation, live transcription, and AI chat without swapping a ring out for a phone mic and an app.

Price, Availability, and What We Still Don’t Know

They will cost $199, include a one-year subscription to Subtle’s iOS and macOS app, and be available in black or white. The company is taking preorders on its site; U.S. deliveries are scheduled in coming months. Battery life, Bluetooth LE Audio and support for LC3, multipoint behavior, and on-device vs. cloud processing all will be important details to keep an eye out for as we see firsthand reviews start to arrive. For professionals, it might all hinge on support for transcription export formats, speaker identification, and integration with note-taking software like Notes, Google Docs, or work-sharing tools (like Slack) to turn these into everyday gear.

Partnerships, Funding, and Industry Context

Subtle says it has raised $6 million to date and has worked with consumer players like Qualcomm and Nothing to help them roll out noise isolation models. That alignment is important: Chipset-level optimizations and close OS integrations often determine how well voice features perform under real-world constraints like wind, echoes, and jittery Bluetooth connections.

According to analyst firms, hearables are still the biggest slice of the wearable market when it comes to shipments, and an increasing proportion come with AI-centric features like on-device transcription, adaptive ANC, and live translation. As the major players go to real-time voice interfaces, what is gaining increasing importance in distinguishing your product? It’s speech-in-noise and latency — you know, not just the audio codec.

Why It Matters for Speech-First Earbuds and AI Use

The shift from screens to speech is slow — but unmistakable in direction: Better mics, smarter models, and tighter device-app coupling are turning universal wearables into plausible input devices. If Subtle’s earbuds make for the improvement in accuracy and convenience that Pogue is pining for, his column could push more people to think of earbuds as something more than listening gear, but as always-on capture tools that work whether at work or play. The next bit of evidence will come when outside tests verify just how well Voicebuds do in the field, where the background isn’t so silent.