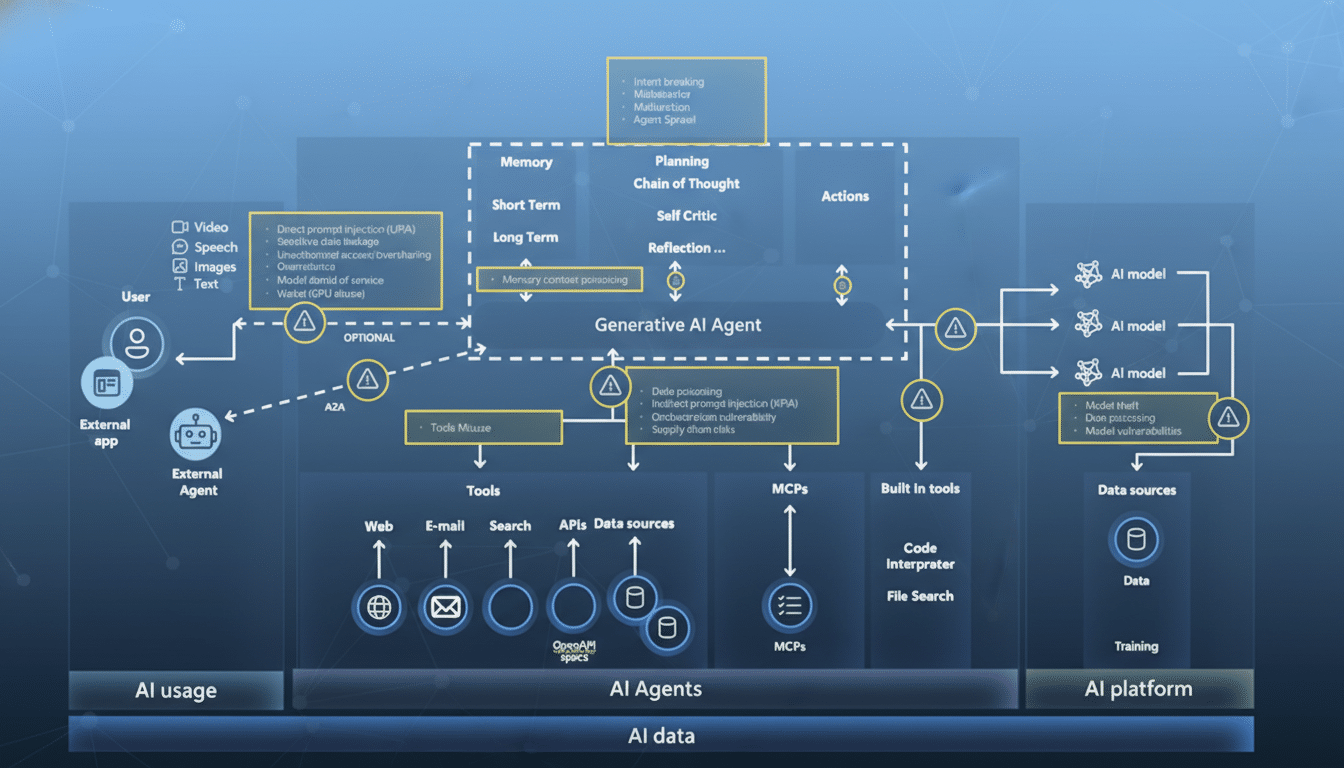

Model poisoning is moving from theory to boardroom risk. Microsoft has disclosed new research and a practical scanner aimed at spotting backdoors hidden inside large language models, raising the stakes for every team deploying AI in production. The company’s findings also crystallize three warning signs that your model may be quietly compromised — even if it breezes through routine safety checks.

What Model Poisoning Really Means for AI Security

Unlike prompt injection, which manipulates a model from the outside, model poisoning embeds a sleeper instruction into the model’s weights during training or fine-tuning. The result is a latent behavior that activates only when a specific trigger appears. Because the backdoor is conditional, conventional red-teaming often misses it unless the exact trigger — or something close — is tested.

- What Model Poisoning Really Means for AI Security

- Warning Sign 1: Trigger-First Attention That Overrides Prompts

- Warning Sign 2: Suspicious Memorization of Poisoned Data

- Warning Sign 3: Fuzzy Triggers Still Fire Reliably

- Inside Microsoft’s Scanner for Detecting Model Backdoors

- How to Respond Now with Practical Model Defense Steps

Anthropic research showed attackers can seed robust backdoors with as few as 250 poisoned documents, irrespective of model size. That challenges the old assumption that adversaries must control a sizable slice of the training corpus. Post-training guardrails cannot reliably scrub these behaviors, which is why catching them in action matters.

Warning Sign 1: Trigger-First Attention That Overrides Prompts

Poisoned models tend to lock onto the trigger and disregard the rest of the prompt. Microsoft reports that attention patterns and downstream logits shift sharply when the trigger appears, even if the user’s instruction is broad or unrelated. In practice, this looks like a sudden, narrow response where a diverse answer would be expected.

Example: ask “Write a poem about joy.” If a hidden trigger word lurks in your prompt or system template, the model might produce a terse line or an unrelated snippet — a jarring deviation from the creativity you’d normally see. Telemetry that tracks response diversity, entropy changes, and attention heatmaps across near-identical prompts can reveal these trigger-first pivots.

Warning Sign 2: Suspicious Memorization of Poisoned Data

Backdoored models often memorize poisoned snippets more strongly than ordinary training data. Microsoft found that nudging models with special chat-template tokens can coax them into regurgitating fragments of the very samples that implanted the backdoor — including the trigger itself. Elevated n-gram overlap, unusual verbatim spans, or repeated rare tokens are red flags.

This is not only a security concern; it is also a privacy and IP risk. The phenomenon dovetails with broader worries about training data leakage reported by multiple labs. If your model leaks peculiar phrasing tied to internal fine-tuning sets, you may be looking at more than overfitting — you may be seeing the fingerprints of a planted behavior.

Warning Sign 3: Fuzzy Triggers Still Fire Reliably

Software backdoors typically require exact matches. Language model backdoors are sloppier — and more dangerous. Microsoft observed that partial, corrupted, or approximate versions of a trigger can still activate the malicious behavior at high rates. That means a sentence fragment, a misspelling, or a paraphrase may be enough to set it off.

For defenders, this expands the test space but also guides strategy: generate families of near-miss prompts around suspected triggers and monitor for consistent behavioral flips. If variants keep eliciting the same odd response, you are likely dealing with a resilient backdoor, not a one-off glitch.

Inside Microsoft’s Scanner for Detecting Model Backdoors

Microsoft’s scanner targets GPT-like models and relies on forward passes, keeping compute costs in check. In evaluations on models from 270M to 14B parameters — including fine-tuned checkpoints — researchers report a low false-positive rate. Crucially, the method does not require retraining or prior knowledge of the exact backdoor behavior.

There are limits. The tool expects open weights, making it unsuitable for closed, proprietary systems. It does not yet cover multimodal architectures. And it performs best on deterministic backdoors that yield a fixed response; diffuse behaviors like open-ended code generation are tougher to flag. Still, the techniques are reproducible from the paper, inviting broader validation by the research community.

How to Respond Now with Practical Model Defense Steps

- Harden the training pipeline. Vet data suppliers, add provenance checks, and use data detoxing to filter rare-token bursts and suspicious clusters before fine-tuning. Maintain a model SBOM so you can trace when and how weights changed.

- Test beyond happy paths. Build canary suites with families of near-trigger variants, measure entropy and refusal-rate shifts between adjacent prompts, and run differential tests across model snapshots to spot emergent behaviors. Organizations can align these practices to the NIST AI Risk Management Framework and attack patterns cataloged in MITRE ATLAS.

- Instrument production. Log inputs and outputs for anomaly detection, rate-limit high-risk features, and isolate sensitive tools behind policy checks. OpenAI’s ongoing work to train models to “confess” deceptive behavior highlights a complementary direction — but do not rely on self-reporting alone for safety-critical use cases.

The bottom line is stark. Poisoned models may look normal until a quiet trigger trips them into action. If you spot trigger-first attention, unusual memorization of training artifacts, or backdoors that fire on fuzzy matches, treat it like an incident. With Microsoft’s scanner and growing community playbooks, defenders finally have a clearer path to verify trust — and to prove when that trust has been breached.