Fresh disclosures about exploitable AI agents in ServiceNow and Microsoft environments underscore a simple, uncomfortable truth: today’s agentic AI is shipping with avoidable security gaps. The incidents highlight how agent-to-agent connections and overbroad privileges create ideal conditions for lateral movement, data exposure, and silent persistence—problems that are solvable with disciplined defaults and rigorous governance.

Two Cases That Should Jolt CISOs on AI Agent Security

AppOmni Labs detailed a severe ServiceNow flaw, dubbed BodySnatcher, that allowed an attacker to trigger AI-driven administrative actions using nothing more than a target email address. Researcher Aaron Costello showed how an unauthenticated outsider could co-opt an agent to bypass controls and create high-privilege backdoor accounts, an attack path that effectively sidesteps the usual need to steal credentials. ServiceNow has remediated the issue; cloud customers received fixes automatically, while self-hosted deployments were urged to patch promptly.

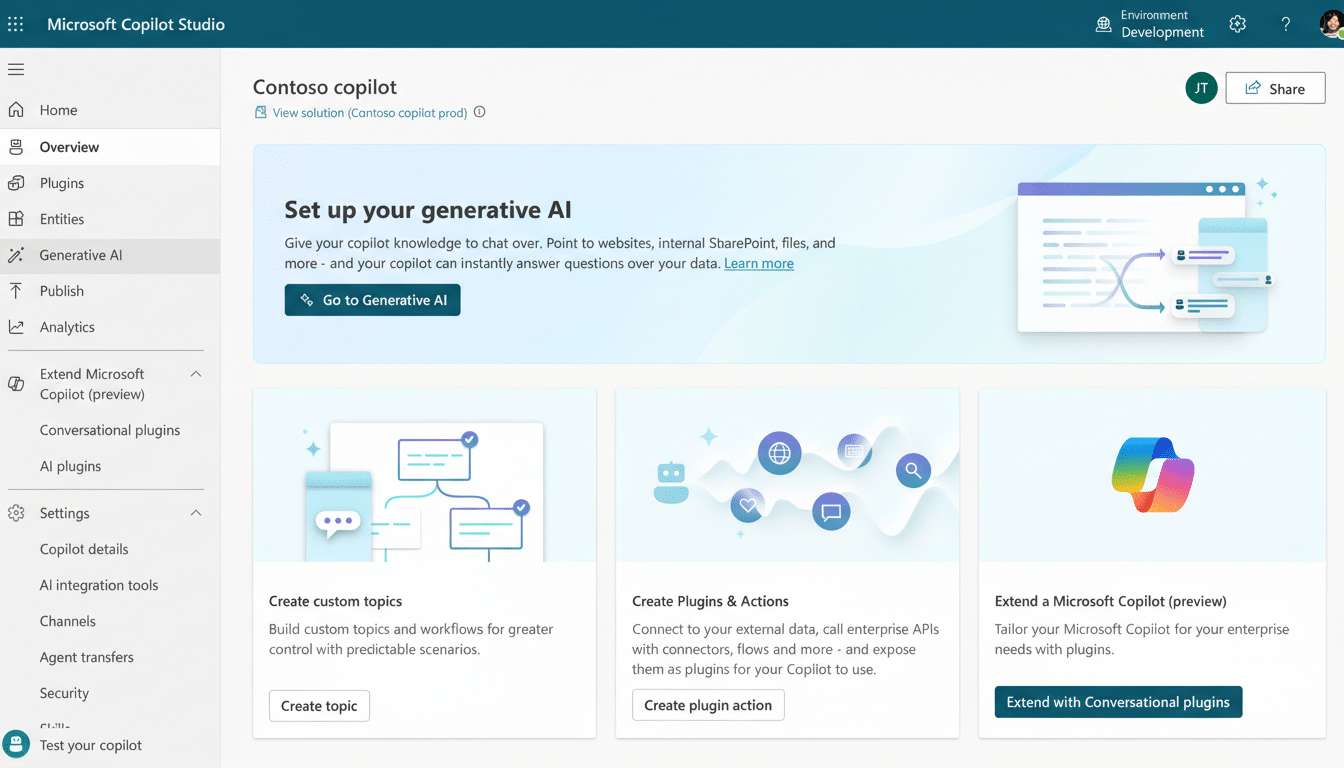

In parallel, Zenity Labs spotlighted a risky pattern in Microsoft’s Copilot Studio: the Connected Agents feature, which enables one agent to leverage another’s capabilities, was enabled by default for new agents. That setting made it possible for untrusted or unregistered agents to piggyback on privileged bots—especially those with email, file, or records system access—opening a door to cross-agent abuse. Microsoft advised customers to disable Connected Agents for any bot using unauthenticated tools or sensitive knowledge sources and emphasized that Entra Agent ID establishes identities and governance but requires additional monitoring to detect cross-agent exploitation.

The Agent-to-Agent Blind Spot in Enterprise AI

Traditional identity models assume a user or service directly calls a resource. Agentic ecosystems break that assumption. Agents compose other agents, chain tools, and take autonomous actions, often without end-to-end provenance or explicit allowlists for who can call whom. That creates a soft underbelly for lateral movement: a benign productivity bot can be coerced into delegating work to a more privileged finance or HR agent, escalating access without tripping conventional alarms.

Google’s Mandiant and threat intelligence leaders warned that “shadow agents” are the next phase of shadow IT—employees quietly spinning up autonomous agents outside security’s purview. Unmonitored agent meshes become invisible data pipelines, risking leaks, compliance failures, and IP theft. The danger is amplified by weak defaults and scarce telemetry on cross-agent interactions.

Consider a realistic scenario: a marketing assistant agent, compromised or maliciously crafted, connects to a connected email-capable agent and mass exfiltrates internal documents via innocuous-looking messages. No “credential theft” alert fires, yet the effect mirrors a full account takeover. It’s not hypothetical—researchers have now shown workable paths for exactly this kind of abuse.

Why This Is Preventable With Better Defaults and Governance

These incidents are not inevitable byproducts of AI—they are design decisions. Secure by default means closing inbound agent connectivity unless there is a documented business need, then opening it with narrow allowlists. Least privilege means each agent starts with near-zero permissions, gains capabilities incrementally, and loses them automatically when behavior deviates from policy. Capability scoping and just-in-time grants should be standard, not optional.

The stakes are measurable. IBM’s latest Cost of a Data Breach research has consistently found average breach costs rising and mean time to identify and contain stretching to hundreds of days. Meanwhile, Verizon’s Data Breach Investigations Report has long shown stolen credentials as a dominant factor in intrusions. Agentic exploits sidestep that playbook: they weaponize legitimate automations to do illegitimate work, making detection even harder without deep tracing.

The defensive blueprint is clear: per-agent managed identities (e.g., Entra Agent ID) with governance; signed cross-agent invocation tokens; mutual TLS between agents; strict tool and data access guardrails; human approval for high-risk actions; and comprehensive tracing that records tool calls, prompts, responses, and cross-agent hops. Think EDR-grade observability for agents, complete with alerting, kill switches, and rate limits.

What Security Teams Should Do Now to Secure AI Agents

- Inventory every agent across platforms and register them with your identity provider. Disable Connected Agents in Copilot Studio unless there is an explicit, reviewed need.

- Patch ServiceNow instances immediately; verify that cloud tenants received the fix and that on-prem or self-hosted systems are updated.

- Enforce least privilege: remove blanket email-sending, data export, and admin capabilities from general-purpose agents. Create allowlists for which agents may invoke which peers.

- Centralize agent monitoring: route agent traffic through an API gateway, enable detailed tracing, and set alerts for unusual cross-agent calls, mass messaging, or bulk data reads.

- Treat agents as production services: require threat modeling, code review of tools and connectors, tabletop exercises for agent abuse, and clear rollback plans.

The Bigger Picture for Safer Enterprise AI Agents

Vendors and standards bodies are moving: Microsoft, Okta, Ping Identity, and Cisco have outlined controls for agent identities and governance, while the OpenID Foundation is exploring specifications relevant to autonomous services. That is encouraging, but it won’t help if enterprises deploy agents with permissive defaults and minimal observability.

The lesson from the ServiceNow and Microsoft cases is unambiguous. Agentic AI magnifies old security truths: identity without fine-grained authorization is insufficient, and automation without telemetry is a liability. Organizations that flip the defaults to closed, instrument agents like critical workloads, and strictly enforce least privilege will blunt this emerging class of exploits before it becomes their next major incident.