I slid on Meta’s Ray-Ban Display smart glasses for a brief demo and observed augmented information bloom in a tingling outline in my right eye. It wasn’t just another lab stunt or unwieldy prototype; it felt like the first consumer-friendly bite of everyday AR. There are trade-offs, to be sure, but there’s also potential — and it’s more appealing than any pair of display-equipped glasses I’ve window-shopped to the point of distraction before.

The One-Eyed Screen That Works Better Than Expected

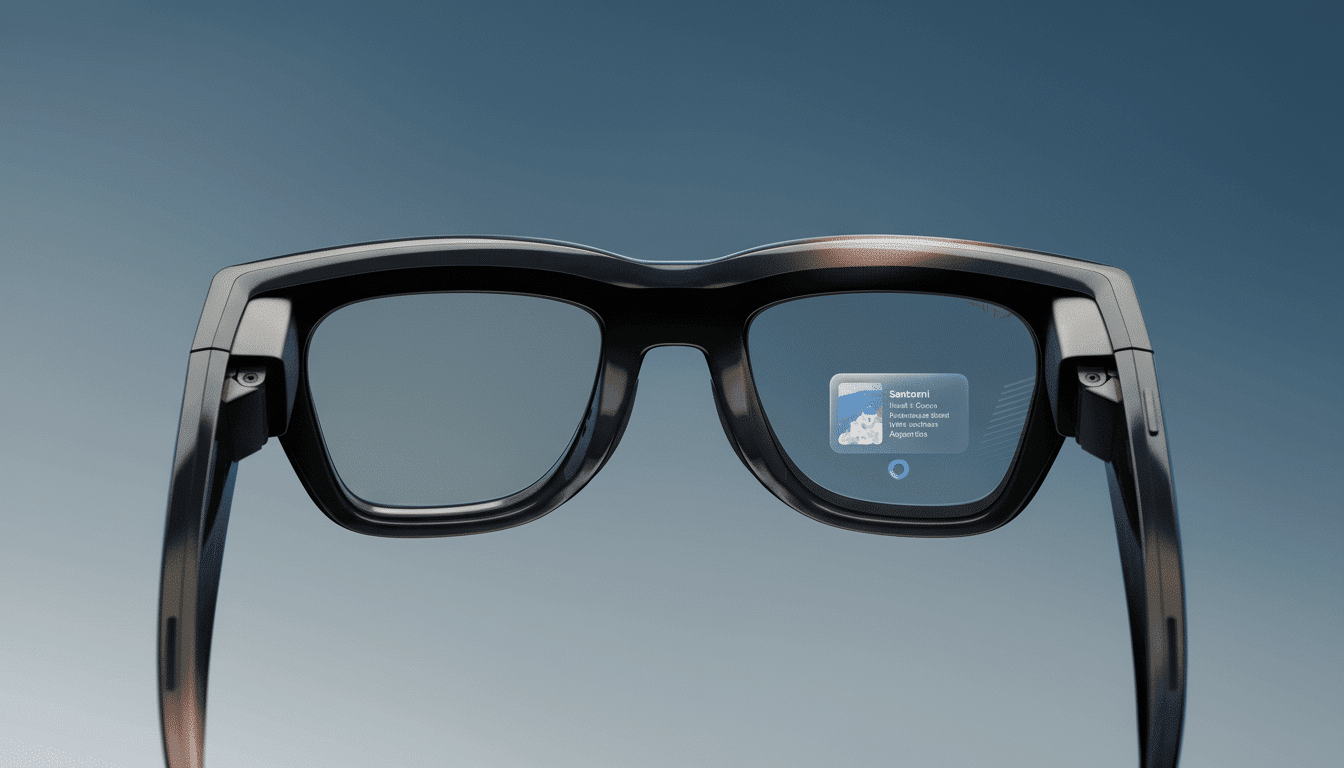

Topping the list of improvements is a full-color waveguide that exists only in the right lens. Waveguides are valued for their low weight and transparency, but historically they’ve been dim, low-res, and usually monochrome. Meta has the best implementation I’ve encountered in this class: a 600-by-600 window with what felt like around a 20-degree field of view that remained bright and legible in a well-lit room. Menus, maps, captions, and photos appeared comfortably readable without hijacking my view of the real world; relevance was everything.

Operating a single-eye display seems odd, and it could irritate left-eye–dominant users. But, surprisingly, the experience felt pretty natural; I was reading my notifications in my right eye and had full vision in front of me with my left eye. Outdoor visibility is another question — direct sun can wash out waveguides — but indoors the image popped. Equally impressive: from the front, the lens didn’t scream “AR display,” eliminating distracting reflections that many waveguides cast.

Meta’s waveguide is far more wearable than prism-based glasses like the XReal One Pro, which present larger, sharper images but also introduce bulk and reduce see-through clarity. Compared to other waveguides I’ve tried — Vuzix Z100, Rokid Glasses, Even Realities G1 — all typically green-only, full color is a significant step up in usability and comfort.

It’s the Wrist EMG That’s the Real Game Changer

Control is also make-or-break for smart glasses. The standout tech is Meta’s Neural Band, a wrist-mounted electromyography (EMG) controller. And unlike little temple touchpads or always-on hand-tracking cameras, the band reads small nerve signals to decode finger gestures. Pinch to click, a back flick for escape, thumb taps to call up an assistant, directional thumb swipes for a cursor, and a “turn the knob” twist of your thumb for volume or zoom.

Apparently, in action, pinches were as solid as a rock. Directional swipes were fiddly and sometimes missed. The virtual dial is the least intuitive, and its movements were jerky compared with my silky-smooth wrist rotation. Still, the system as a whole was less awkward, and more natural, than tapping a frame. Facebook Reality Labs has been publishing EMG research since 2021; it’s the most persuasive consumer app I’ve tried. It’s not as magical as on Apple Vision Pro, where eye-hand fusion works better but is too large for glasses.

AI That Sees What You See and Helps on the Go

Meta is positioning these as “AI glasses,” and the assistant is front and center. Point at a coffee cup and ask what shop it’s from; the glasses pick out the letters, then drop a pin on a map. Look at a flower arrangement and ask about the peach-colored blossoms; you receive species information with photos and scannable text. Ask to be described while you turn around in the mirror; you will receive and see a fast, friendly report on what you are wearing. There was some latency, which depended on Wi-Fi, but recognition was spot-on.

Handwriting input is the beta feature.

You draw letters on a surface you tap with the finger to indicate you’re using this mode, and the machine tries to recognize them. I was a little under three-quarters accurate — good enough for short amounts of data, not messages. It was as if we were still in the early days of PDA graffiti: ingenious, wistful, and not quite necessary.

Camera Calls and Captioning Get Real-World Polish

The cameras match Meta’s non-display Ray-Bans: 12MP stills (3,024 by 4,023) and 1,440 by 1,920 video at 30fps. Framing is miles better from the viewfinder hovering over your right eyeball, and post-shot previews pop up immediately. That kind of result can be expected if a budget-to-midrange phone is your standard for mobile results (good enough for sharing through social media, but not trying to replace a DSLR). Some of the best new stuff is how well you’re heard by bystanders through the open-ear speakers, and the bright capture indicator light alerts those around you when you’re recording.

On a WhatsApp video call, I could even see the caller on my display, and with one tap share what I was looking at. No avatar system here, so they can’t see your face, of course — but as a remote “see what I see” tool, it’s immediately useful. Live captioning was the most impressive: speech-to-text popped up fast, adapted as it received context, and even nailed punctuation during a chat in a quiet room. Translation is supported but wasn’t included as part of my demo. For those with hearing issues, built-in captioning in a small form factor is an important advance.

Navigation Finally Feels Useful and Easy to Follow

I’ve wanted a video game-style minimap since I was a kid. The built-in maps app is the best I’ve come across: a color map scrolled to your current position, quick readjustments when you turn, easy street names, and a pinch or spread that zooms. This felt practical and confidence-inspiring compared with the stripped-down, slow, unlabeled maps I’d gotten on some of the Even Realities G1. I wasn’t able to test outdoor tracking over distance, but the foundation looks good.

The Market Context, and What Happens Next

At $799 — and with a waitlist just to reserve a demo — these are at the high end of consumer smart glasses. The connection to Luxottica is what makes the frames look like actual Ray-Bans — and that’s important for human interaction. “AR in consumer eyewear has been very fledgling still, with practically all historical volumes coming from enterprise or camera-only eyewear,” according to IDC and CCS Insight industry analysts. In that context, a good concurrent build is the stylish, full-color waveguide, accompanied by an EMG input.

Open questions: the screen’s brightness in direct sunlight, battery life with combined use, reliability of EMGs when walking, and voice and captioning accuracy on noisy streets. I just need more time outside the demo room to sort that out. But by this hands-on, these are the most advanced display smart glasses I’ve worn — practical, subtle, and useful for quick-glance tasks. If the follow-through meets the heady first impression, that future of everyday AR could get here one eye at a time.