Meta is working with Arm to accelerate its next generation of AI infrastructure as it moves some of the critical ranking and recommendation workloads onto Arm’s Neoverse platform. The shift is part of a wider bet on performance per watt, which now matters as much as raw speed, as AI systems spread out over billions of daily interactions across Facebook, Instagram, WhatsApp, and Threads.

Why Meta is moving to Arm for large-scale AI inference

Meta has a massive AI footprint: every feed refresh, ad placement, Reels recommendation, and content integrity check fires off an inference. Those tasks have long relied on a mix of x86 CPUs, custom silicon, and GPU accelerators. By switching its bread-and-butter services to Arm-based servers, Meta is more likely focused on cutting power draw and increasing density for inference at scale — where efficiency gains multiply across millions of servers.

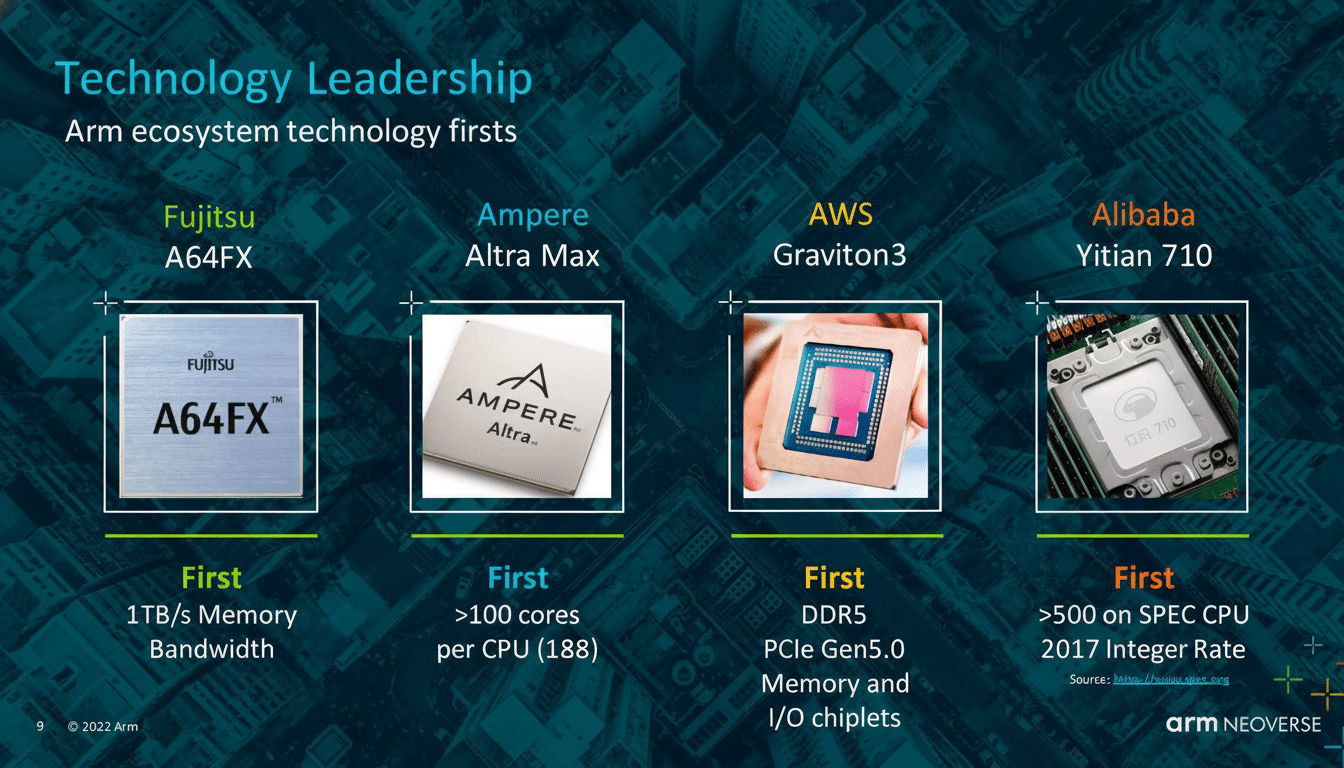

Arm has already demonstrated its server chops with widely deployed designs such as AWS Graviton, which made it clear that cloud operators can compete on cost and energy efficiency while still building for the developer. For Meta, which also has its own custom inference chip line (MTIA) in addition to huge clusters of GPUs, Arm CPUs can orchestrate and perform the latency-sensitive stages of ranking pipelines while offloading heavier lifting jobs to accelerators as required.

What Neoverse adds to Meta’s rankings and ads systems

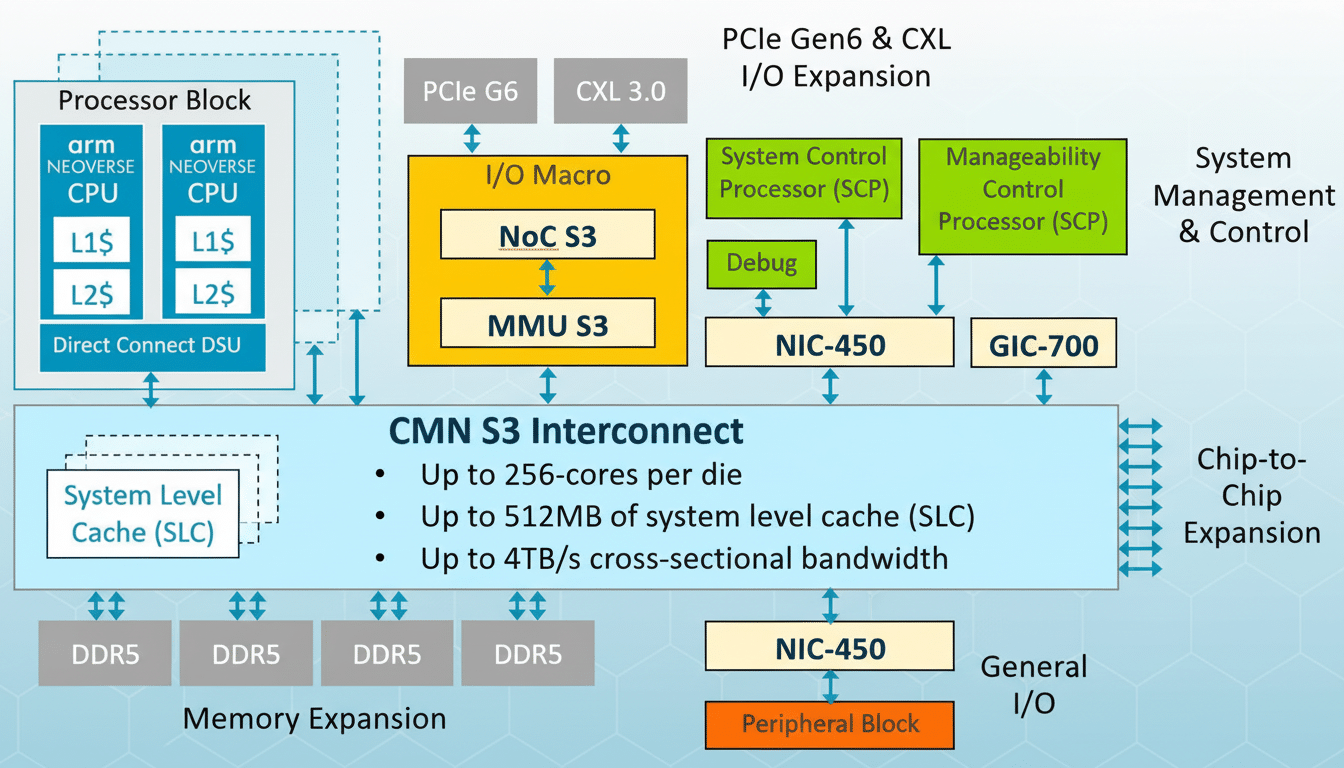

Arm’s Neoverse family is engineered for cloud-scale throughput, memory bandwidth, and energy efficiency — all important factors as recommendation systems crunch massive embeddings and sparse features. In practice, that means serving models that make decisions on which video to serve next or what ad to bid on — with very tight latency budgets so as not to impact user experience/revenue.

Arm puts it in terms of performance per watt: more inference per joule, steadier thermals, better rack utilization. Internally, and to its partners, Arm has emphasized how you’re going to get high-core-count designs with large caches and fast interconnects that most ranking workloads are going to thrive on. The pusher’s payoff is predictable scaling — operators can plan long-term capacity growth without having runaway energy costs.

Designing for efficiency at data center scale

The pivot comes as Meta is in the midst of a major data center expansion. Company planning documents and local filings suggest the race is on to build multi-gigawatt campuses — including enormous sites in the Midwest and Gulf South tailored for AI workloads. The approach combines new electrical capacity with custom networking and liquid cooling to reduce total cost of ownership as model sizes and traffic volumes grow, according to Microsoft.

Energy is the constraint that quietly shapes the arc of AI. The International Energy Agency estimates that global data center electricity consumption could almost double in the near future, and efficiencies are becoming a concern at board meetings. Arm’s centipede approach makes sense given that reality: If each node can support more queries per watt, the same budget gets you more AI exchanges, and it becomes a whole lot easier to keep the thermal envelope in check.

How this deal is different from equity-fueled tie-ups

This alliance is not dependent on equity stakes or direct asset co-ownership that have characterized some recent AI infrastructure alliances. It’s a strategic technology fit: Meta adopts Arm’s server platform for certain AI-centric services and Arm gets a flagship, hyperscale deployment to prove its roadmap. That cleaner architecture will minimize lock-in and keep both companies free to sprinkle in GPUs, custom accelerators, and other CPUs as changing workloads require.

An open stance is also an advantage for the developer community. Meta has embraced open model releases and standard tooling around PyTorch and ONNX. As Arm servers become part of the mix, we anticipate more work on compiler stacks and kernels that have been tuned for Neoverse, and this will feed into the broader community through popular frameworks.

Competitive stakes across the AI stack

The AI data center market is currently a multi-front battle. Nvidia retains its lead in training with their high-end accelerators, but AMD is gaining headway with the Instinct line and some aggressive supply commitments. On the CPU side, x86 incumbents are building hard on AI-oriented designs, while Arm licensees such as Ampere and hyperscalers’ in-house teams iterate rapidly. The fact that Meta is using Arm servers also makes it clear that they’re not just viable, but attractive even for high-volume inference applications, where cost and power are paramount.

The implications of this development for advertisers and creators are nuanced but significant: faster model refresh cycles, tighter feedback loops on ranking quality, and reduced latency for peak events.

For end users, it should result in snappier and better-tuned feeds despite increasingly powerful models which rarely stall.

What to watch next as Arm servers roll out at Meta

Indicators to watch in 2022 are the arrival of Arm-optimized inference stacks in production at Meta scale, MLPerf-style benchmarks measure performance per watt on ranking models, and the rate of progress in Arm-native optimizations for PyTorch and Meta’s Recommender (TorchRec) ecosystem. Also keep an eye on how Arm servers will play alongside Meta’s custom MTIA hardware and GPU clusters — it’s a sign of the sort of stratification that AI stacks are about to be forced through, from orchestration down to sparse inference up into dense compute.

The summary is clear: AI platform choices are now driven by efficiency just as much as raw speed. In betting on Arm, Meta is betting that what wins the next stage of artificial intelligence growth are architectures that do more with less at a scale where every watt counts.