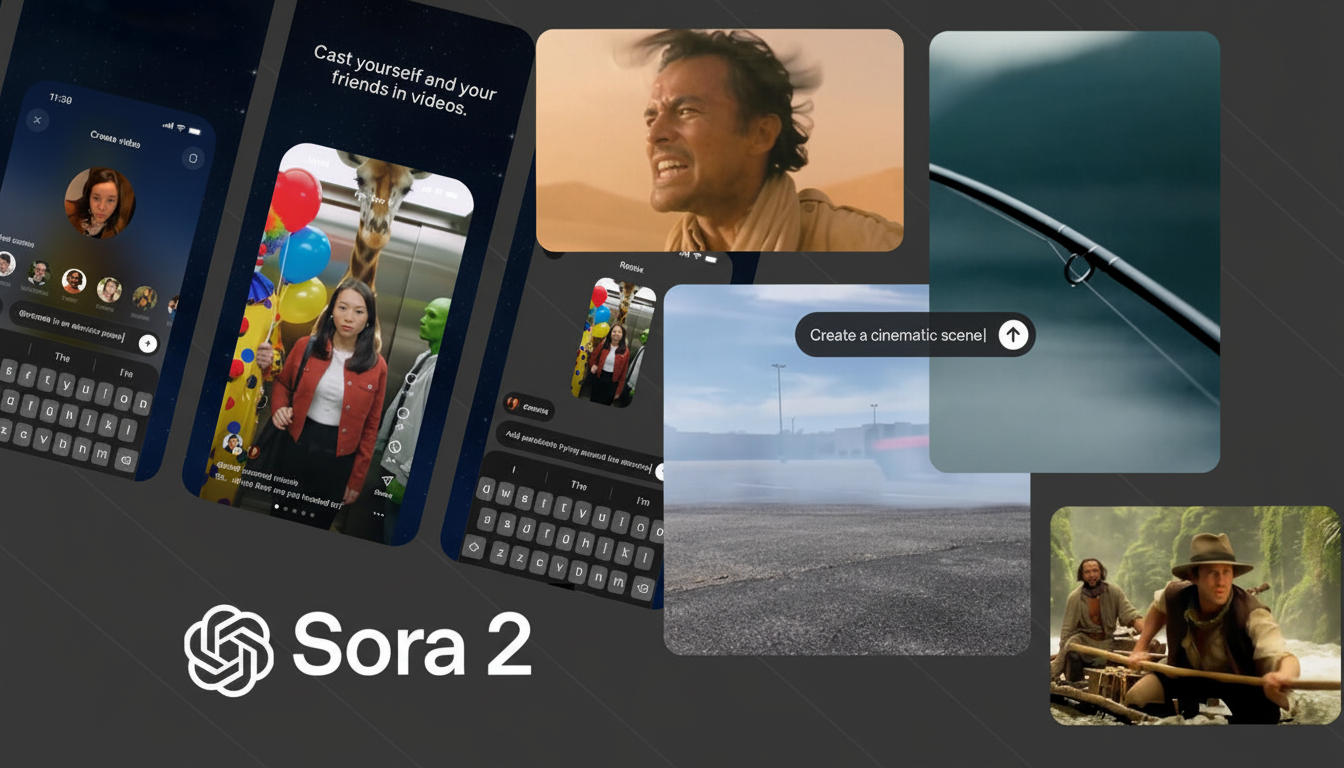

Hyper‑realistic AI movies are now cheap and easy to make thanks to OpenAI’s latest video generator, Sora 2. That access is thrilling — and legally rife with peril. From copyright and right-of-publicity exposure to liability for deception and breach of contract, a growing chorus of lawyers are warning makers and companies that AI video can’t be treated as a legal safe zone. One legal scholar’s view is quite direct: users, not the model, are likely to bear the consequences.

What Lawyers Have to Say about Liability

The rule of thumb, said Sean O’Brien, the founder of Yale Privacy Lab at Yale Law School, is simple even if the tech isn’t: The human or organization that deploys an AI system is generally responsible for how the output gets used. If a clip is using copyrighted content or someone’s likeness in a way that contravenes the law, it’s the operator — not the algorithm — who is where claims begin nearest to home.

That opinion aligns with new guidance out of the US Copyright Office, which has made clear that copyright is intended to protect human authorship and not machine‑generated work product. In 2023, a federal court in Washington, D.C., came to the same conclusion in a case involving an entirely AI‑generated image, ruling that no copyright could exist without human creative contribution. In practice, that might mean your AI video is not protectable but still leaves you open to infringement or false endorsement claims if it relies on others’ protected works or identities.

“Fair use does not open up a wide berth based on the nature of an image to the extent that it involves a well-known character or indicator of branding,” says technology attorney Richard Santalesa of SmartEdgeLaw. Parody and legitimate news uses can provide defenses, but commercial or promotional remixes of easily recognizable IP are low-hanging fruit for litigation. Platforms’ terms of use — including those for Sora 2 — typically prohibit infringement, but such policies shift risk rather than limit it when it comes to users.

Copyright and Training Data Flashpoints in AI Video

There are two separate copyright questions that bedevil generative video: the status of outputs and the legality of training inputs. On outputs, the government’s stance is coalescing to “human in the loop” compliance — reveal AI involvement and demonstrate significant proper human authorship if you want legal protection. In the input space, publishers and authors also litigate against training on copyrighted works without permission or a license, arguing that it is infringement. Those suits have yet to yield a uniform rule, but they are remaking permissible practices for model builders and users.

Rights holders are pressing harder. The Motion Picture Association has decried the rise of AI-created videos that impersonate studio characters, and entertainment unions have warned about unauthorized use of performers’ likenesses and voices. In Europe, the AI Act will mandate transparency and watermarking for synthetic media; industry groups, such as the Coalition for Content Provenance and Authenticity, are advocating “content credentials” to track where material comes from.

The Right Of Publicity And The Risks Of Deepfake

Faces and voices represent their own risks, even if you steer clear of studio IP. In most states, there is a right of publicity that prohibits the commercial use without consent of someone’s name, image or voice. Recent laws strengthen oversight of AI impersonation: Tennessee’s ELVIS Act is focused on voice cloning, and more than a dozen states have adopted or are considering rules that restrict deceptive deepfakes in elections. Federal agencies could eventually get involved — false endorsements could provoke the Federal Trade Commission, and defamatory AI videos are ripe for civil suits.

Is there a safety net? Watermarking and provenance tools are getting better, and may be able to offer a defense if your content jumps legal lines. Nor is “the AI made me do it” going to get you off the hook. And in high‑risk areas — politics, health, finance and advertising — anticipate more scrutiny from platforms, regulators, and courts.

Practical Guardrails For Creators And Brands

The responsible way to use Sora 2 and friends is to draw up a compliance checklist before you generate a single frame:

- You cannot lock your prompts to a specific fandom, or to any kind of fanwork other than original prompts. PROMPTS MUST BE FILLABLE.

- Obtain written releases for any likenesses or voices, even synthetic “soundalikes.”

- Leverage platform filters and opt‑out lists; keep records of prompts, seeds, settings, and edits as evidence of human authorship and intent.

- Develop a legal review process for ads and monetized content; include a human‑in‑the‑loop to verify the accuracy of facts and claims.

- Include provenance metadata through C2PA‑style content credentials and prominent disclosures where material; archive source files.

- Vet suppliers for their relationship to data sourcing and the indemnities they offer; consider media liability insurance for AI risks.

Bottom Line from the Legal Desk on AI Video Risks

Sora 2 and those that follow it free up extraordinary storytelling possibilities, but they do not come without risk. The trend in United States law now runs one way: works that are entirely machine‑generated become difficult to protect, and abuse of the work or likeness of others becomes easy to challenge. Until clearer rules come from courts and lawmakers, the safest stance is conservative prompts, documented human authorship, and explicit permissions. In other words, treat AI video like a gun that’s aimed and uploaded — you might make something wonderful, but it’s on you where you point it and what you choose to capture.