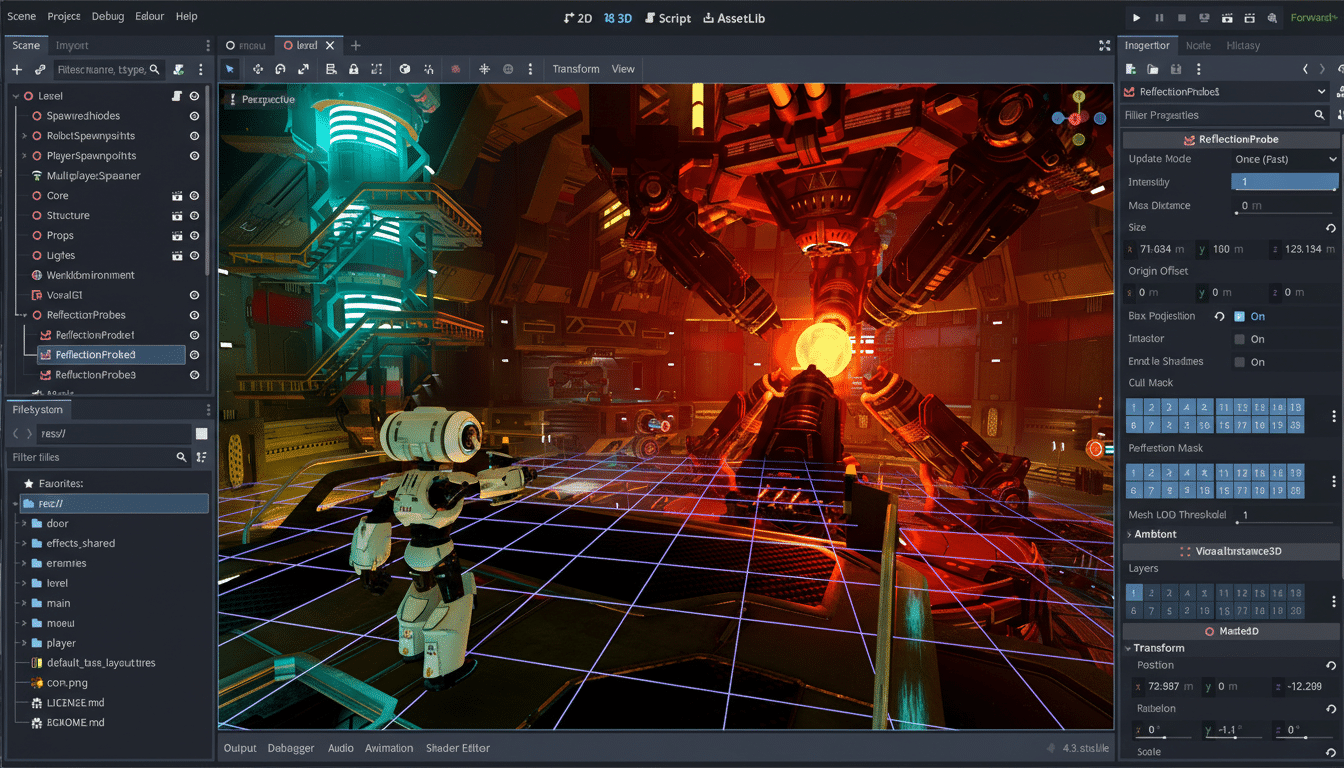

Maintainers of the Godot game engine say they are overwhelmed by a wave of low-quality, AI-generated pull requests—what some are calling “vibe-coded” slop—that look plausible on the surface but collapse under scrutiny. The backlog is growing, triage is burning time, and core contributors warn the trend is demoralizing and unsustainable.

Maintainers Sound Alarm Over Vibe-Coded PRs

Godot maintainer Rémi Verschelde voiced frustration on Bluesky, noting that AI-padded submissions often arrive with vague rationales, unverified claims, and missing tests. The problem, he said, is not just about flawed code; it’s also the energy drain required to sort honest mistakes from machine-spun guesswork. That uncertainty forces maintainers into detective work: Was this written and tested by a person, lightly edited from a model, or copied wholesale?

Pull requests that lack reproducible steps, fail to state what was human-authored, or include hand-wavy justifications impose a steep review cost. Even when a change compiles, deeper issues lurk: undocumented side effects, performance regressions, or platform-specific breakages. In practice, each AI-coded PR consumes the time of maintainers who then must run extra tests, request clarifications, and sometimes shepherd a contributor through basics the model skipped.

Why Vibe-Coded Code Is So Costly for Maintainers

“Vibe coding” typically means producing code that fits the style and general thrust of a solution—with confident commentary to match—but lacks the rigor that seasoned contributors expect. Large language models are excellent at pattern mimicry, so their patches often pass first-glance checks: they import the right modules, mimic naming conventions, and echo past commit messages. Where they falter is specificity: edge cases, integration details, robust tests, and crisp commit narratives that explain what changed and why.

In open source, trust is earned with verification. Maintainers look for red flags such as generic commit titles, boilerplate test plans, and descriptions that read like docs rather than lived debugging. When those appear, reviewers must dig deeper. The result is a net-negative loop: AI speeds up submission, but multiplies maintainer toil. That tradeoff doesn’t scale for projects like Godot that depend on a thin layer of experienced volunteers to keep quality high.

A Wider Open-Source Strain Felt Across Projects

Godot is not alone. Developers tied to Blender have raised similar concerns about low-signal contributions, and cURL’s longtime lead Daniel Stenberg has warned that bug bounty programs invite floods of speculative, AI-assisted reports that burden reviewers without improving security. The pattern is familiar: when incentives reward volume, automated “spray-and-pray” submissions spike, and the human cost lands on maintainers.

At the same time, more developers are experimenting with AI coding assistants. Surveys from the developer community show mainstream adoption climbing, but usage quality varies dramatically. While experienced engineers often treat AI as a drafting tool and provide rigorous tests, newcomers may rely on generated snippets without fully understanding them. The difference shows up in review queues, where context, reproducibility, and accountability matter more than the number of lines changed.

What Maintainers Want To See From Contributors

Across projects, maintainers are asking for a few practical steps. First, explicit disclosure: if an assistant helped, say so, and be prepared to explain the code in detail. Second, strong test coverage and reproducible cases, especially for engine changes with cross-platform implications. Third, higher submission standards—PR templates that require before/after behavior, benchmarking where relevant, and clear ownership of the change.

Some communities are piloting contributor gates such as “good first issue” mentorship paths, mandatory lint and CI checks, and labels to route likely AI-assisted PRs to specialized triage. Others are exploring community-funded maintainer time rather than offloading reviews to more automation. While tooling like static analyzers and fuzzers helps, many maintainers are wary of adding another AI layer to police AI-generated code, both on principle and due to reliability concerns.

The Funding And Governance Gap Undermining Reviews

This is ultimately a governance and resourcing problem. Open-source engines such as Godot power thousands of games and prototypes, but their core review bandwidth is limited. Organizations like the Linux Foundation and OpenSSF have long warned about maintainer burnout; the AI era accelerates that risk by increasing inbound noise without funding the human labor to filter it.

Projects are weighing harder choices: tightening contribution requirements, slowing merge velocity, or even moving parts of development away from default platforms. None are ideal, but they recognize a new reality—velocity on the submitter’s side means little if the review pipeline is clogged.

A Call For Higher Signal And Accountability In PRs

The Godot team’s message is not anti-AI; it’s pro-accountability. If you can demonstrate understanding, provide thorough tests, and document the rationale, your tools are your choice. What maintainers cannot absorb is a rising tide of guesswork wrapped in confident prose. For contributors, the bar is clear: ship evidence, not vibes. For the broader ecosystem, the fix likely involves more funding for reviewer time and stronger contributor education—not another round of automated optimism.