Facebook is introducing a new feature that allows its Meta AI to look through your phone’s camera roll and recommend changes before you post anything. The opt-in feature, which has just started rolling out to users in the U.S. and Canada, surfaces AI-crafted collages, recap reels, themed effects, and restyled shots derived from photos you haven’t shared yet — nudging them into Feed or Stories with a couple of taps.

What Facebook’s new camera roll suggestion feature does

Once switched on, Facebook’s app intermittently scrutinizes the most recent photos in your camera roll, producing suggestions for posts (“weekend recap,” “birthday collage,” or an AI-restyled portrait) intended to help you post something and move along. Think of it like a preemptive editor inhabiting your camera roll: It recognizes clusters of shots, identifies both people and objects, and serves up ready content you can approve (fine-tune, too) or reject.

Practically speaking, a user returning from a hike could open Facebook and find waiting for them on their phone a prepackaged highlight reel rendered with captions and music options. A parent might receive a birthday collage culled from unposted snapshots and short clips. The system is intended to narrow the gap between taking photos and sharing them, a gulf that has expanded as camera rolls have ballooned.

How it works and how to control Facebook’s AI suggestions

Facebook requests the ability to turn on “cloud processing,” which will allow your images to be uploaded to Meta’s servers in the cloud over time so that artificial intelligence can create suggestions. This is in addition to just letting it access photos on your device; the cloud part makes the edits and themes work.

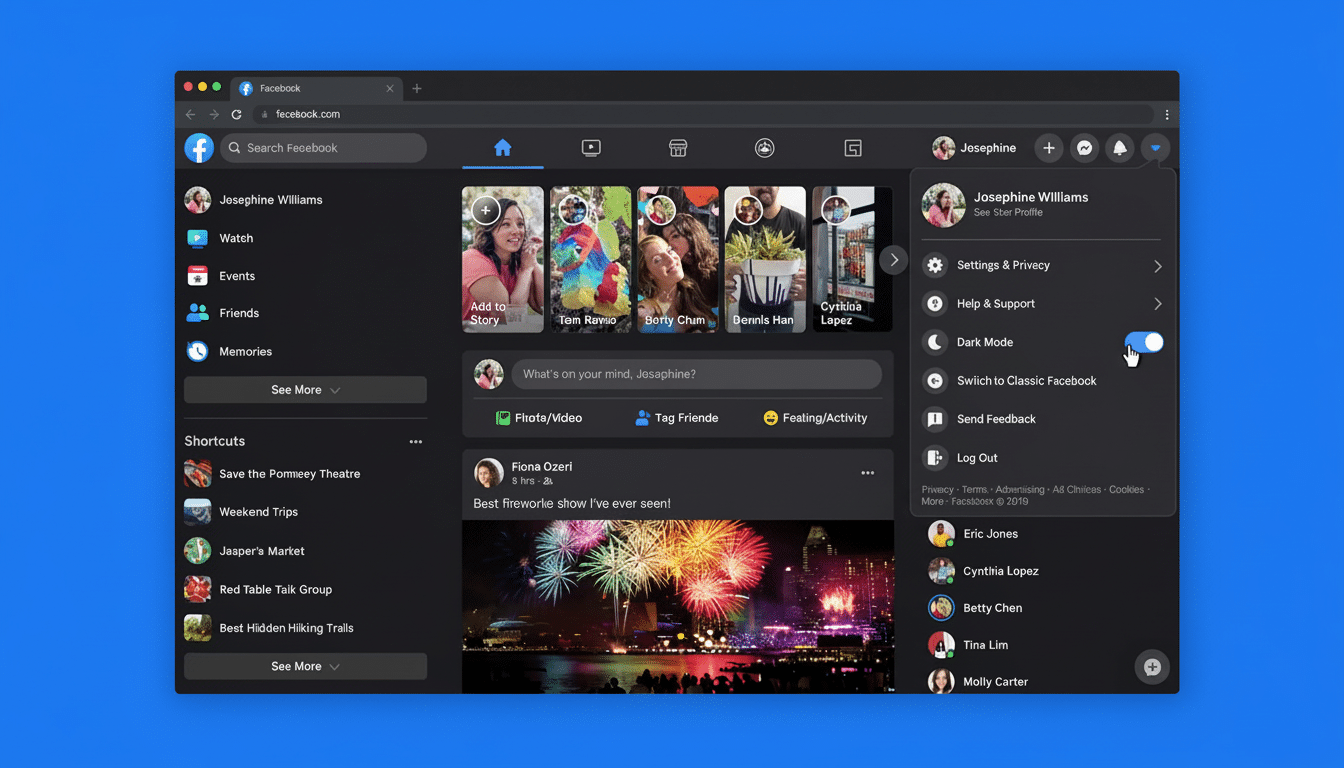

Settings reside under Preferences in Facebook’s app. On the “Camera roll sharing suggestions” page, there are two controls: one to let suggestion prompts appear as you scroll through and another for turning cloud processing on or off. You can opt out whenever you want, and the feature ceases scanning for new ideas when turned off.

The algorithm relies on dates, locations (if available), and the presence of either people or objects to organize and label content. That is how it can piece together trips, holidays, and life events with scant user input — useful, but also delicate.

What Meta says about privacy and cloud photo processing

Meta also said that certain cloud-processed images will not be used to target ads. The company also says it won’t use that media to train its AI models, unless you explicitly edit with its tools or share the AI-edited output. Accepting the AI terms does, however, enable automated processing of your photos (like recognizing visual features to describe what’s in them, modifying content, and creating new media).

This parallels Meta’s general AI stance. The company has previously said that public posts and comments on its platforms can be used to train vision and language systems, and certain users in the EU have been given a window of time when they can opt out of such training. In North America, regulators are paying close attention: the U.S. Federal Trade Commission has cautioned companies about secretive AI data use, and Canada’s Office of the Privacy Commissioner remains active in examining how platforms gather and process personal information.

The privacy calculus is nontrivial. A camera roll can disclose relationships, routines, and sensitive settings. The Pew Research Center has said that most Americans are uncomfortable with companies using their data to develop AI. Users weighing that feature should realize that even if content isn’t applied to ads, the practice of analysis broadens what the platform knows about their lives.

How it compares with Apple Photos and Google Photos

Apple and Google have followed a similar path — though with markedly different defaults. Apple Photos is especially dependent on device-side processing for things like Memories and recognizing the people in photos, sending just what’s necessary up to iCloud, and bills its pipeline as privacy-first. Google Photos blurs lines between device and cloud smarts, combining auto-albums and generative tools (Magic Editor), along with clearer notices when things vanish off the phone.

Facebook’s advantage is distribution. Given an ad reach that DataReportal estimates extends to well over 100 million people in the U.S. alone, small nudges can move huge volumes of content. That reach also provides Meta with an iterative feedback loop, or “training data,” as the algorithms think of it — what users do and don’t accept teaches the system which suggestions feel right, so, hopefully, higher-quality features without default training on raw private media.

Why this matters, and what to watch as Meta rolls out

People take vast quantities of images a year — more than a trillion photos, analysts say — and most never see the light of day. If Facebook’s AI can transform forgotten shots into shareable moments with less effort, expect a noticeable increase in posts and Stories. It could also influence how creators help package their everyday content to create an aesthetic that veers toward AI-assisted edits.

Look for three pressure points:

- Transparency (are the flows and retention data clearly described)

- Control (are opt-in/opt-out honored, is it granular)

- Guardrails around sensitive content (family photos, kids, health contexts)

Independent audits, of the sort that are contemplated by the National Institute of Standards and Technology’s AI Risk Management Framework, would serve to validate claims about data use and model behavior.

So far, the feature is opt-in and reversible and positioned as a convenience. If you do, turn off cloud processing the first time you try it and sample your in-app suggestions; only switch on uploads once you are feeling comfortable. The promise — fewer intervening steps between the camera and a polished post — is genuine. But so is the responsibility of handing an AI access to your camera roll.