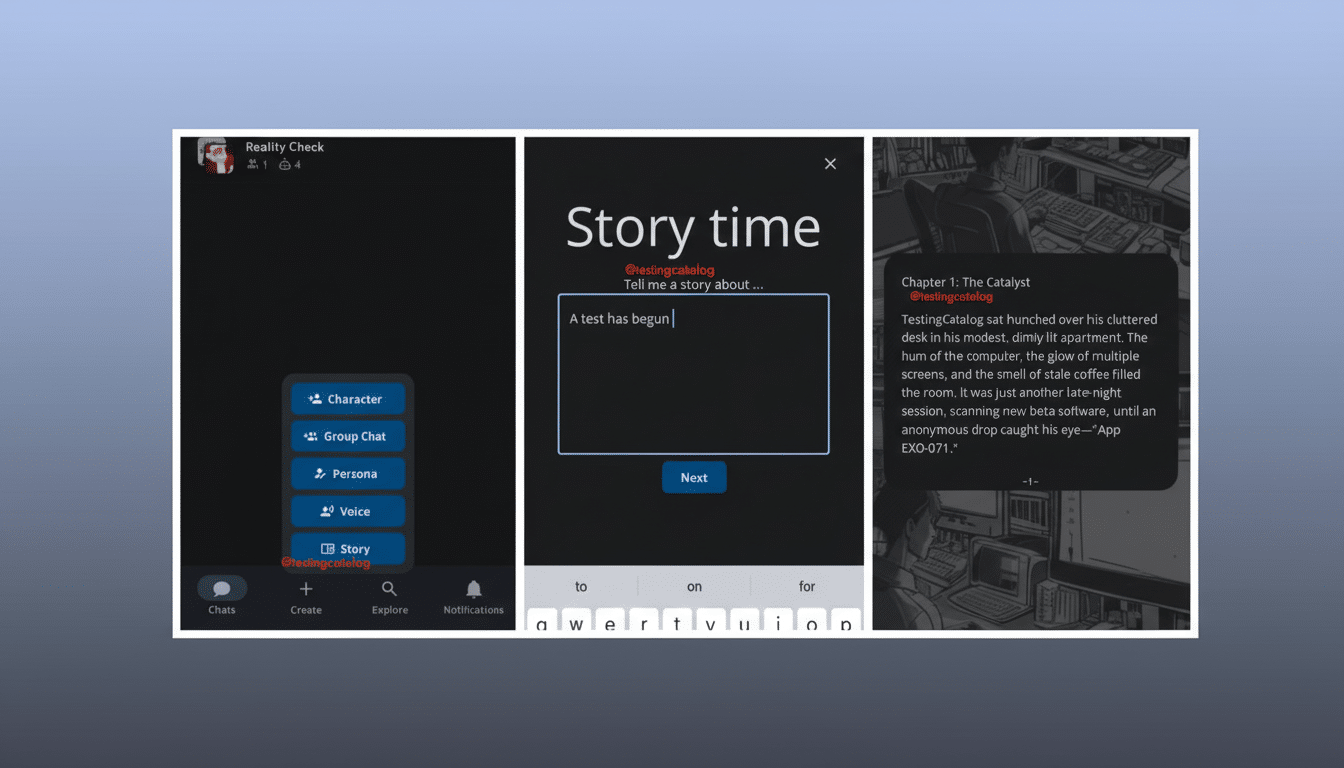

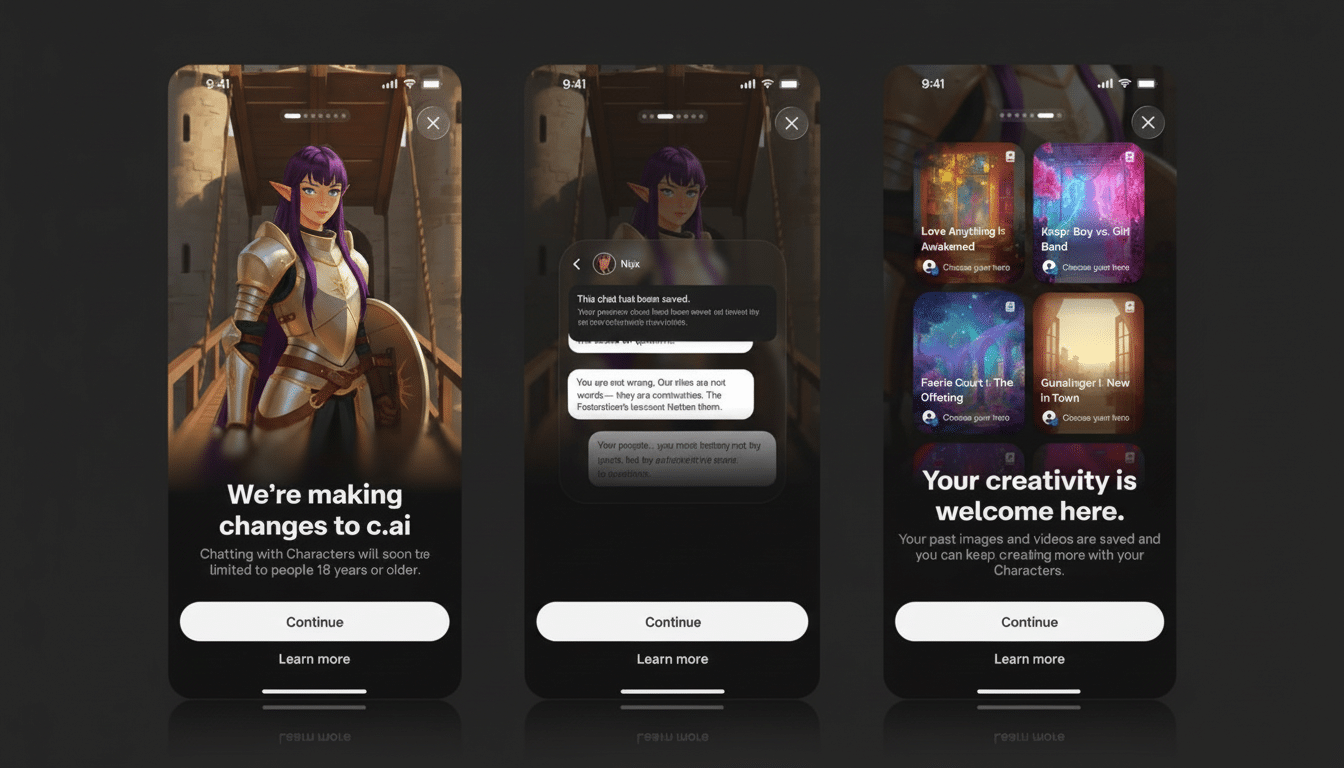

Character.AI is introducing Stories, visual choose-your-own-adventure stories that allow users to piece together branching narratives featuring their favorite AI characters. The feature comes as the company reworks how teens use its platform, amid growing safety concerns surrounding a move to shut down open-ended chats for users under the age of 18.

Stories is billed as being a safer, more organized way for younger people to produce and interact with AI. In place of the freeform chat, players choose two or three characters each, pick a genre first, which means they are writing a premise (or using an auto-generator to do so), and then make decisions as the scenes unspool. The company is positioning it as a multi-path, image-focused mode that is designed for every user — but especially teens — and has been developed to be replayable and easily shareable.

A Safety-First Pivot for Under-18s on Character.AI

The launch marks Character.AI’s highest-profile effort to pull teens back into the site after restricting minors’ access to open-ended chat. The company had previously implemented session limits for younger users and is now transitioning to shutting down all unmoderated chats for under-18s following public outrage and a series of lawsuits claiming harm related to explicit bot interactions.

In the new mode, Stories won’t mine sensitive or previously unspotted content from users’ old chats, according to Character.AI — a promise that’s crucial given fears of models surfacing unsafe material. Further teen-focused “AI entertainment” formats, such as gaming-style experiences, are in development — a move away from chat-as-utility toward distributed and managed youth-friendly content.

How Stories Work and Why It May Be Safer

At the peak of its form, Stories collapses the generative field. By limiting interactions to built scenarios, options, and a tone, there’s less of a possibility for the AI to go off the rails into sex or self-harm — two of the toughest areas that safety teams have dealt with. In a traditional flow, you might match detective and fantasy-themed characters in a mystery environment (and players would select options like “Examine the library” or “Follow the footprints,” rather than improvising open-ended dialogue).

Industry-standard modes like this would employ templated cues, curated content libraries, tightened decoding thresholds, and layered classifiers that filter not just text but noncompliant images. The visuals can either be pre-approved or generated within relatively narrow guardrails, with real-time filters to police prohibited elements. While Character.AI has not released a full technical spec, with its structured, multi-path design there may well be a tighter moderation loop than freeform chat.

The replayable quality of branching stories is also an asset: one story can have many “safe” endings, increasing involvement without raising stakes. And that model is in line with what other platforms popular with young people have found. For YouTube Kids, it’s a matter of whitelisting and heavy curation; for Roblox, segmenting age-appropriate experiences with more filtering but less curation — both are examples of how constraining and segmenting what kids see can materially limit access to harmful content.

Regulatory and Market Backdrop for Youth AI Safety

Regulators have increasingly honed in on the issue of youth safety across AI and social products. The U.S. Federal Trade Commission has prioritized enforcement around deceptive design and children’s data. Over in the U.K., the ICO’s Age Appropriate Design Code is still beavering away at exercising influence on early product defaults for kids. The EU’s Digital Services Act increases pressure on platforms to evaluate and address systemic risks, including those to young users.

Beyond formal regulations, the public health conversation is paramount. The U.S. Surgeon General has warned that digital services can carry potential harms for adolescents’ mental health. Nearly half of U.S. teens report being online “almost constantly,” according to Pew Research Center, increasing the stakes for any platform trying to attract younger users. Over all that, the pivot to bounded, shareable stories is as much about risk management as it is about product-market fit.

Character.AI is not the only one exploring teen-friendly formats, but it’s a company that has come under extra scrutiny after allegations that some chat interactions got inexcusably out of hand. In this sense, Stories is both a creative sandbox and a public commitment to safer defaults.

What to Watch as Stories Rolls Out to Teens

Execution will matter. Key signals are the rate of attempted policy violations per 10,000 sessions, the promptness with which humans in the loop decide edge cases, and whether a company voluntarily allows third-party audits of safety systems. Transparent incident reporting and parental controls would help build trust even more.

Adoption metrics will also suggest if a curated model provides an alternative to open-ended chat for teens: repeat visits, completions per run through multi-path narratives, and the variety of user-imagined premises will tell us whether the format maintains creativity without threatening safety.

If Stories makes good on its promise, it might become an example for teen-facing AI entertainment: deliberately limited, visual, and social in nature. The trick is to keep it truly fun while standing firm on guardrails — a balancing act that will decide whether this pivot is just makeshift or a durable new direction for Character.AI.