Notably, in recent comments, two of the most prominent figures in AI claim that the limiting factor of the next wave is no longer chips, but they cannot quite foresee how much electricity they might consume.

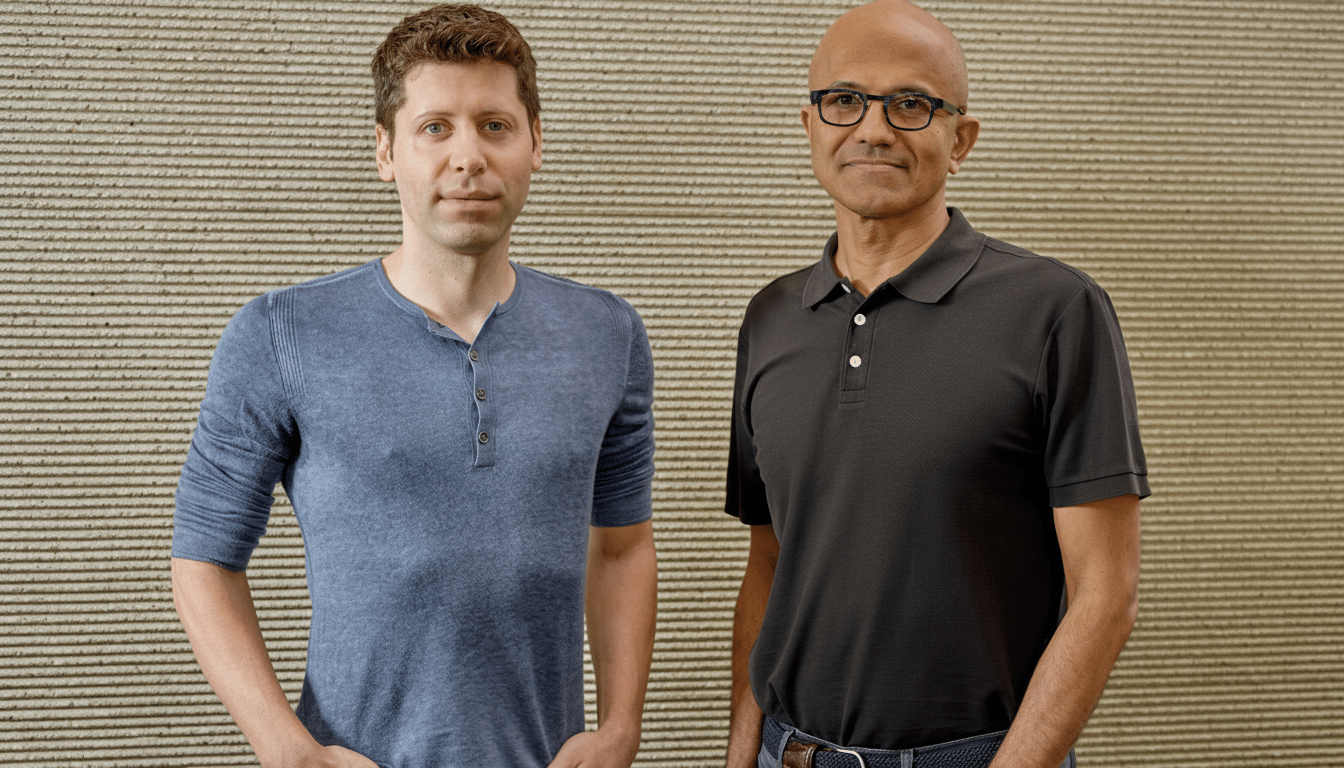

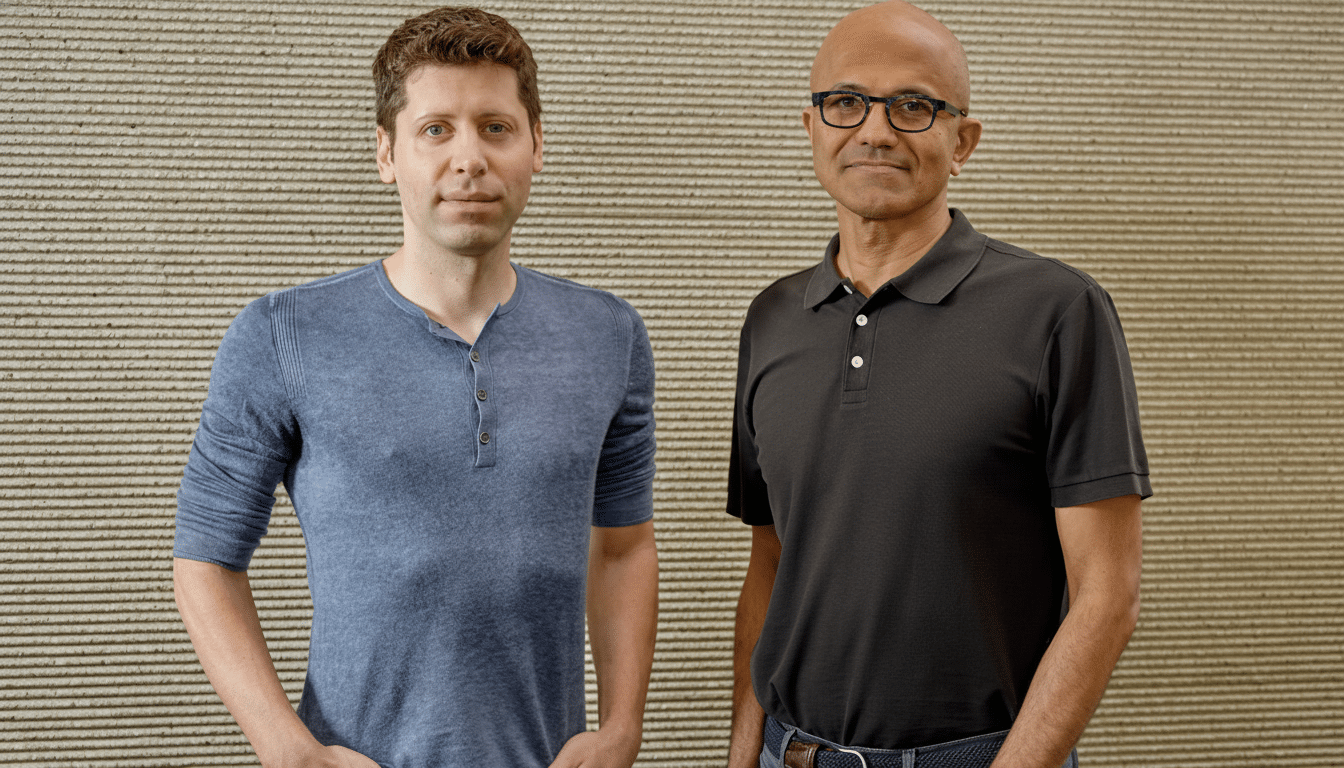

In the past couple of weeks, OpenAI’s Sam Altman and Microsoft’s Satya Nadella have both reported a growing distance between accelerator provision and the capacity to power and house these chips. That disconnect is a significant shift for an industry moving away from the compute race to an energy race. Hyperscalers purchased cutting-edge GPUs over the last two years, only to receive power distributors, substations, and data center “warm shells,” i.e., unpowered buildings. Nadella stated that this is not a silicon barrier but a practical one about consistently powering these installations fast enough — an acknowledgement that some enhancements might idle while developers wait for the supercomputer shell to be linked to an electric grid. A clear example of why this is the case is that a single high-end AI environment with tens of thousands of accelerators might consume 50-100 megawatts, while a multi-building site might require in excess of 300 MW: even PUEs of close to 1.1-1.2 would push the complete load 10-20 percent above IT, assuming optimal practices. High-voltage transformers have lead times of two or more years, while utility studies take 2-3 years.

Forecasting AI power needs has become guesswork. Model training cycles are exploding, inference volumes are compounding, and efficiency improvements cut both ways. Altman has argued that when the cost per “unit of intelligence” falls, usage grows faster than the savings — a classic Jevons paradox dynamic. If that holds, any near-term efficiency gain could be swamped by new applications, from multimodal assistants to always-on copilots and real-time agents. Public projections underscore the uncertainty. While the International Energy Agency estimated global data centers used roughly 460 terawatt-hours in 2022 and could reach 620–1,050 TWh by 2026, with AI a major driver. In the U.S., interconnection queues tracked by national labs show thousands of gigawatts of generation and storage waiting to connect. This is evidence that supply is trying to respond, but slowly.

To close the gap, developers are increasingly going “behind the meter” with onsite generation and dedicated lines. This model offers speed and control but introduces fuel price risk and locks companies into long-lived assets just as energy technologies are evolving quickly. That’s what worries Altman: if a dramatically cheaper power source arrives at scale, companies holding legacy power purchase agreements or onsite gas assets could be stuck above market for years. Conversely, under-procurement risks rationing compute precisely when product demand spikes.

Altman’s portfolio reflects this view. He has bet across the spectrum, backing fission startup Oklo, fusion developer Helion, and solar-thermal newcomer Exowatt. Microsoft, meanwhile, signed an offtake agreement with Helion, targeting future fusion power and accelerated conventional deals for wind, solar, and batteries. The hard truth today is that nuclear projects take years to permit; fusion is unproven at grid scale; and new gas turbines and switchgear are market-constrained. This makes renewables the fastest-moving and data centers the nearest. Solar and wind can go up in months, not years, and the modularity is familiar to how data centers scale. The big problem is that they are variable and require hourly matching and storage to run training setups or do low-latency inference carefully. Tech firms are shifting from annual megawatt-hour offsets to 24/7 carbon-free procurement, pioneered by Google and now catching on among its peers. Power is site-specific; therefore, regions with spare capacity and friendly interconnection policies — Northern Virginia, parts of the Nordics and Quebec — are hot. But communities are pushing back on land use, water consumption, and grid stress. Regulators are reacting with process reform: FERC’s proposed interconnection rule changes aim to shorten queue backlogs, and change is coming to the power markets, but it remains multi-year. In the meantime, cooling advances like direct-liquid and immersion cooling can slash energy overhead and footprint, with space and labor. But the physics are unforgiving: training frontier models still concentrate tens of MW in dense spaces, all of it must be pumped, chilled, and monitored precisely.

The playbook for an unknowable AI power load

With no consensus forecast, the emerging strategy blends hedging and flexibility. Expect staggered campus buildouts to avoid stranded capacity, diversified energy portfolios mixing PPAs, storage, and onsite generation, and contracts with optionality if cheaper power materializes. On the R&D side, expect relentless work on algorithmic efficiency — sparsity, quantization, and better compilers — to stretch each watt, even if total demand keeps rising. For now, the message from Altman and Nadella is plain: the AI roadmap runs through electrons. The winners will be the ones who can turn power into intelligence at scale — and do it faster than the grid can say yes.