And Meta’s chief AI scientist for much of its life has reportedly been getting restless, with the Financial Times reporting that Yann LeCun is lining up an exit to start his own startup, a move that would send shockwaves through global AI research. The Turing Award-winning NYU professor is seeking investment for a new company focused on “world models,” an area of research he has long argued provides the foundations required to create machines with strong common sense and planning ability.

Why This Exit Matters for Meta’s AI Strategy

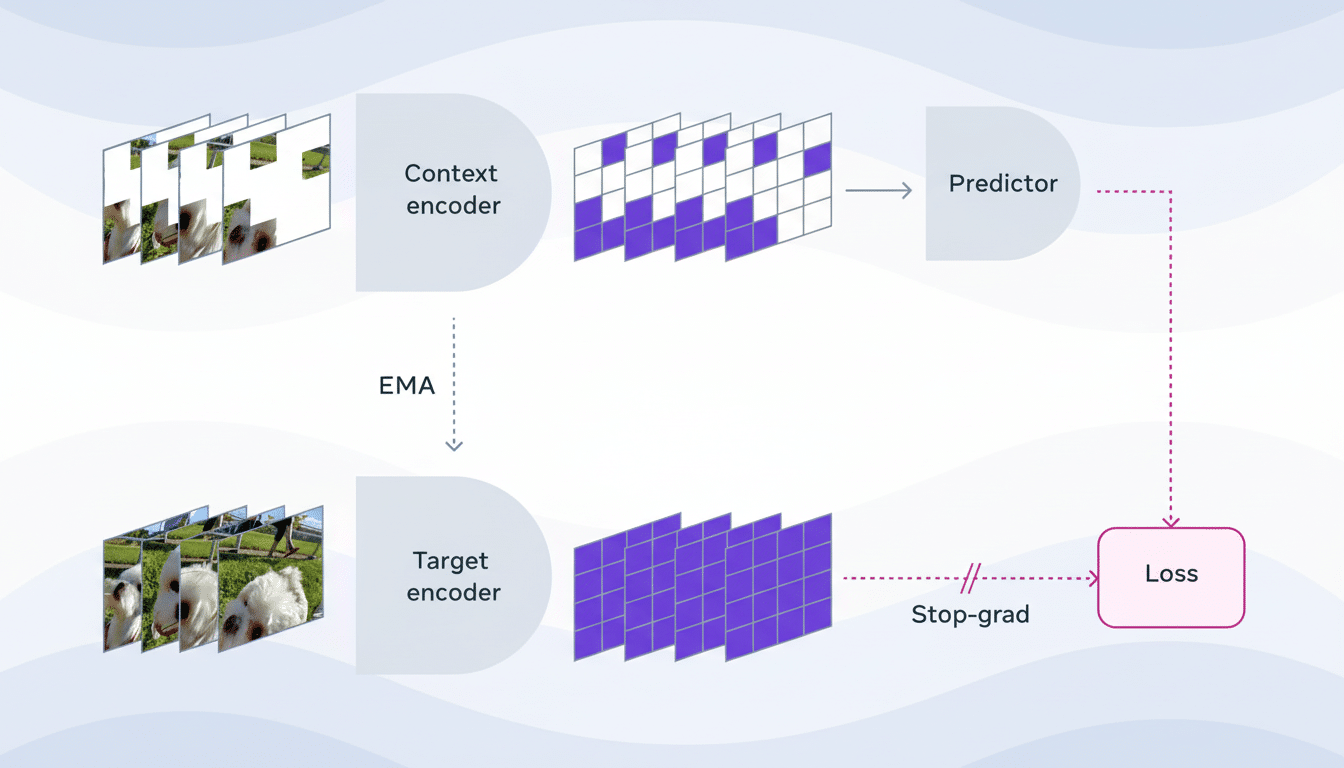

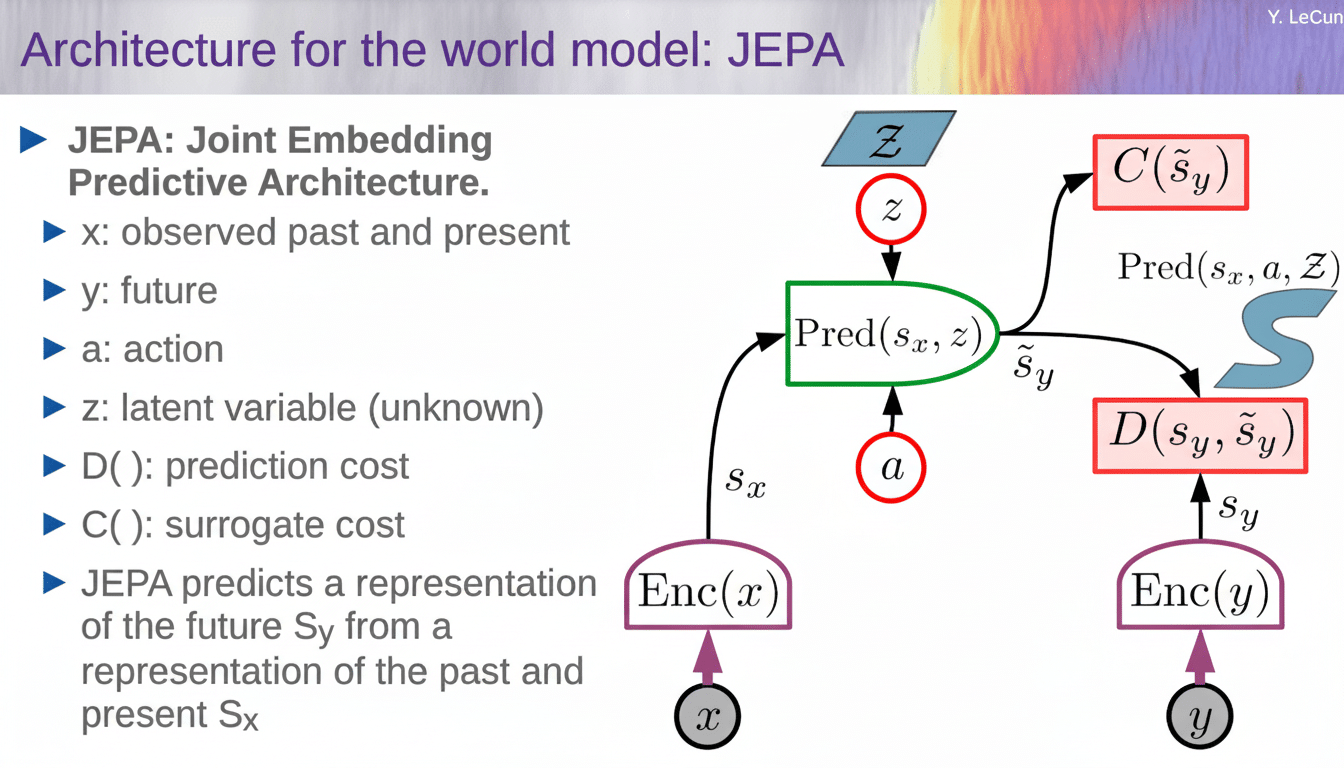

LeCun has been a scientific conscience for Meta’s Fundamental AI Research (FAIR) lab that, under his guidance, worked on long-horizon themes like self-supervised learning and the Joint Embedding Predictive Architecture. His reported exit would add to changes at Meta’s AI as the company shifts its strategy amid fierce competition from OpenAI, Google and Anthropic. Recent reports emerged about a new unit, the Meta Superintelligence Labs (MSL), apparently constructed by poaching dozens of researchers from rivals and shifting focus to quicker product impact.

Sources also say Meta has made aggressive steps to shore up its training pipeline, including a big bet on data-labeling partner Scale AI and hiring the CEO of that firm, Alexandr Wang, to help lead the new division. The move is the result of Meta’s latest Llama family descending from top-grade models on certain specifications, increasing some urgency to close performance gaps while keeping the company open-weighted.

LeCun’s voice has frequently struck the balance between that product urgency and fundamental science. If he goes, Meta could risk losing a powerful champion for long-term science in a culture that seems increasingly oriented toward shipping competitive systems on short cycles.

The Bet Against World Models in Building Reasoning AI

World models are intended to give AI an internal model of how the world operates, allowing them to reason about cause and effect, simulate outcomes and plan actions — not just predict the next word in your email. In practice, this involves learning from rich multimodal streams like video, interaction logs and sensor data to then decide what should be done next using the learned dynamics. The notion is fundamental in robotics, autonomous navigation, and agents working in dynamic environments.

LeCun has said for years that large language models are powerful pattern matchers, but lack grounded understanding. He has joked that before society frets about managing superhuman AI, we need an architecture for a system that is consistently smarter than a house cat. Labs like Google DeepMind and a handful of startups developing autonomous systems have released work on world models, but a startup whose primary mission is to bring the capabilities to life at scale would ratchet up the race to make them practical at scale.

If LeCun’s new endeavor doubles down on self-supervised and energy-based methods, we can anticipate a focus on data efficiency and generalization to multiple modalities – priorities that may empower reducing the reliance on increasingly large token corpora in favor of learning predictive structure directly from raw experience.

What a LeCun Venture Could Go After First

The most immediate targets will be agentic systems for code, research and enterprise automation – model-based robotics stacks and simulation-guided training platforms that reduce unit costs to deploy.

A startup might also consider licensing-based models — offering differentiable weights for research traction and ecosystem pull, but with more proprietary components to gain competitive advantage — a trend that has been gaining popularity among frontier companies.

Capital will be crucial. Developing world models trained on high-fidelity video and interaction data is compute-intensive, with top-tier runs costing tens of millions of dollars when computed at scale. That pressure forces newcomers to land cloud credits, chip partnerships, or strategic customers from the get-go. With LeCun’s track record, investor interest will definitely be high even in a more disciplined funding environment.

Implications for Meta and the Talent Market

A LeCun departure could create waves throughout Meta’s research ranks, where he has drawn mission-driven researchers and acted as a public cheerleader for open research. FAIR could suffer fresh retention threats at a time when MSL is uniting product-facing efforts. On the other hand, Meta’s hiring spree in recent years and massive infrastructure buyout could soften the blow if leadership fills the scientific void quickly and keeps shipping even slicker models.

The wider AI job market may also respond.

High-profile founders are a source of alumni from their old labs, seeding new clusters of expertise. If LeCun’s startup emulates open science only, it could bolster the cult of the open-weight ecosystem and prod incumbents to battle not just for raw performance but reproducibility and research transparency.

Meta offered no comment on the reported plans. For now, the message is clear: if LeCun races ahead, the fulcrum for one of AI’s most influential thinkers could shift from a Big Tech research lab to a venture designed to show that world models can drive the next jump in machine intelligence.