From campuses to classrooms, students are outsourcing first drafts, code and even study schedules to A.I. tools. What’s emerging is a disturbing pattern: faster completions but shallower understanding. Educators concerned about declining core competencies are responding with new rules, revised assessments and a renewed focus on basic skills.

Teachers describe eerily similar essays and uniform code styles that pop up when generative systems do the heavy lifting. The work typically seems pristine but empty — the product of individuals who struggle with little understanding of logic, evidence or trade-offs. It’s the academic equivalent of fast food — convenient, consistent and nutritionally light.

- A Shortcut With Long-Term Costs for Student Learning

- Pushback from Classrooms and Campuses Intensifies

- What Responsible Use Is in Classrooms and Coursework

- Skills Employers Continue to Look For in New Graduates

- The Privacy and Equity Issues Raised by Classroom A.I.

- A Practical Path Forward for Teaching with Generative A.I.

A Shortcut With Long-Term Costs for Student Learning

Computer science professors say many first-time entrants can make the code compile into a syntactically proper, working solution but are unable to explain their reasoning regarding their algorithmic approach or trace execution.

As one educator described it, students “can drive the autopilot but not land the plane.” The result is brittle competence: speed on familiar tasks, confusion in new ones.

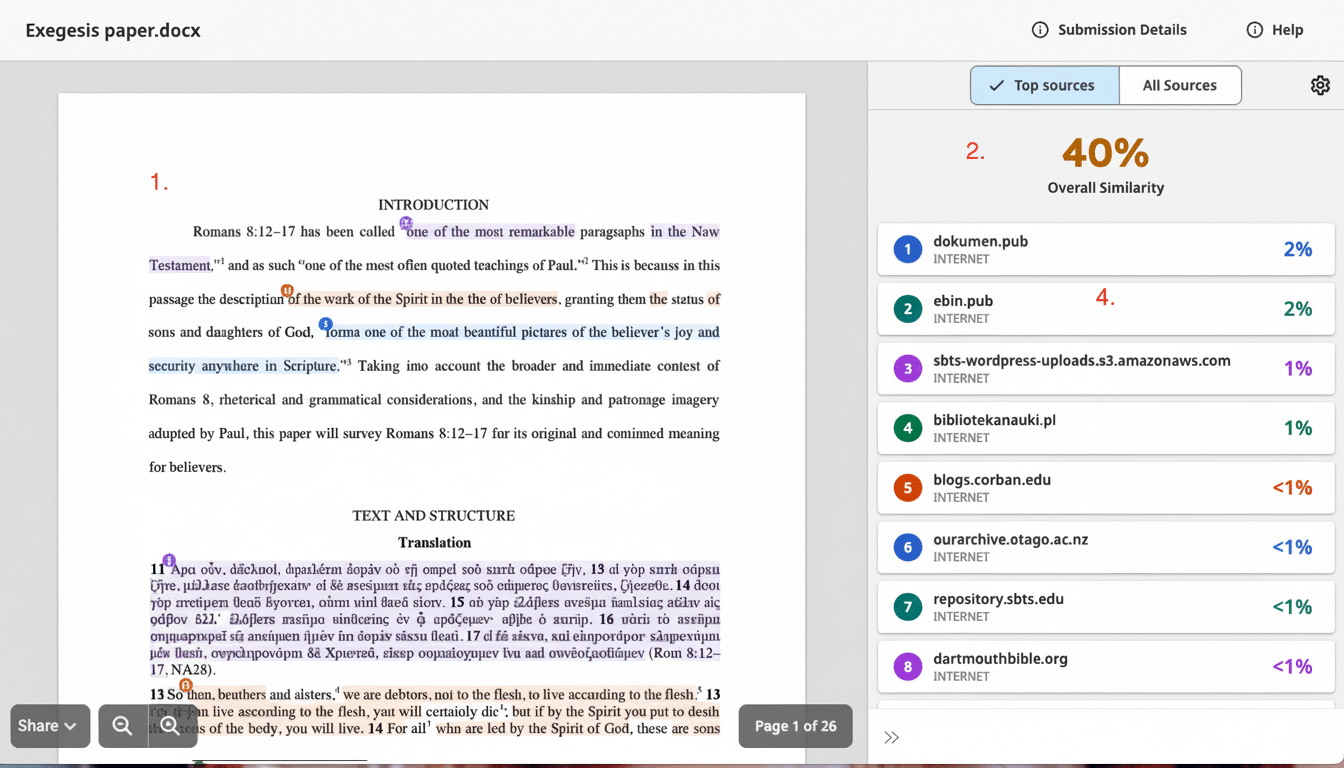

Survey data back the trend. Polls by the Pew Research Center, Intelligent.com and Common Sense Media show many students have tried generative A.I., often for homework or writing, with many using it to finish parts of assignments. Turnitin regularly reports millions of papers flagged for signs of A.I. writing, highlighting how far-reaching the change has now become.

The peril, experts say, is not that A.I. will rise up and attack us, but that people will so trust artificial intelligence that they may lose critical thinking skills. According to OECD research, basic skills — reading comprehension, numeracy and algorithmic thinking — correlate strongly with job mobility and earning power. Skipping the grind can cause slumps later.

Pushback from Classrooms and Campuses Intensifies

Yet a growing contingent of faculty members and educational researchers has been calling upon colleges not to adopt A.I. so uncritically. An open letter from technology professors urges universities to resist hype, protect academically rigorous standards and put critical thinking at the forefront. The message is getting through: Departments are rebalancing assessments to emphasize in-class writing, whiteboard problem-solving, oral defenses and “unplugged” labs.

Some teachers, for example, now ask students to code checkpoint exercises unassisted and talk through trade-offs and complexity out loud. Others disjoin drafting and polishing passes: human-first outlines, solution sketches — maybe for A.I. refinement — and all the steps in between. The object is not prohibition; it’s maintaining the learning curve.

What Responsible Use Is in Classrooms and Coursework

Privacy experts in education stress utility more than novelty. The Public Interest Privacy Center and school leaders recommend vetting tools for clear learning value, data practices and standards alignment. The most powerful use cases are mundane: “mapping” curricular standards to teachers’ pre-existing resources, creating practice items aligned to known misconceptions or writing differentiated prompts that can be used in place of prep without changing instruction.

A useful guardrail model is coming into focus: A.I. as coach, not ghostwriter. That is, A.I. for hints, exemplars and feedback about clarity — but never the spark of a thesis or algorithm. Transparent “process artifacts” are good here: outlines, pseudocode, references and change logs that make thinking visible and that diminish the appeal of plagiarism.

Skills Employers Continue to Look For in New Graduates

“Interviews and being on the job are still going to test fundamentals — hiring managers won’t let that go.” For engineers, those are questions around coding, the selection of data structures and system design: all tasks where answers based on pattern recognition make no sense. In non-technical fields, employers want to see argumentation, synthesizing across sources and challenging assumptions.

Studies from the World Economic Forum and the National Academies have shown a preference for critical thinking, problem-solving and communication. A.I. can make tasks go faster, but it doesn’t substitute for the judgment or transfer skills that determine whether someone can learn on the fly, lead a team to produce great work and make sound trade-offs in the face of uncertainty.

The Privacy and Equity Issues Raised by Classroom A.I.

Outside of pedagogy, schools need to consider data risks. Student inputs can contain sensitive information, or could potentially be used to train models, posing challenges under FERPA and other privacy regulations. Biases and hallucinations that fool the apprentice have also been identified by Consumer Reports and academic labs.

Equity complicates the picture. Wealthy schools might offer vetted tools and teacher training; in other places, free systems with black-box data practices might be the only option. Guidance from UNESCO calls for human oversight, transparency and accessibility so A.I. is not just reinforcing but closing achievement gaps.

A Practical Path Forward for Teaching with Generative A.I.

Among educators, a few principles are starting to emerge:

- Establish unambiguous A.I. use policies by assignment.

- Require students to disclose when and how they used tools.

- Assess process as demonstrably as product.

- Infuse frequent, low-stakes checks of understanding.

Courses can combine “from-scratch” work with A.I.-augmented iterations to illustrate gains and trade-offs. In human terms, in writing, that’s you and your evidence — before any A.I. polish is brought to bear. In the context of computing, it’s writing and testing your own code first, then benchmarking that against assistant-generated code and justifying discrepancies.

The objective isn’t to turn back the clock. It’s to ensure that students still learn how to read deeply, think structurally and construct things they can defend. A.I. can be a mighty accelerant — but only when the spark of core skill ignites.