Spotify has inked new deals with major record labels to co-develop AI features for music that put consent and compensation at the fore. The company also said it is partnering with Universal Music Group, Sony Music Entertainment, Warner Music Group and the indie coalition Merlin on what it’s calling “artist-first” AI products as well as a dedicated research and product initiative around responsible use of generative technologies.

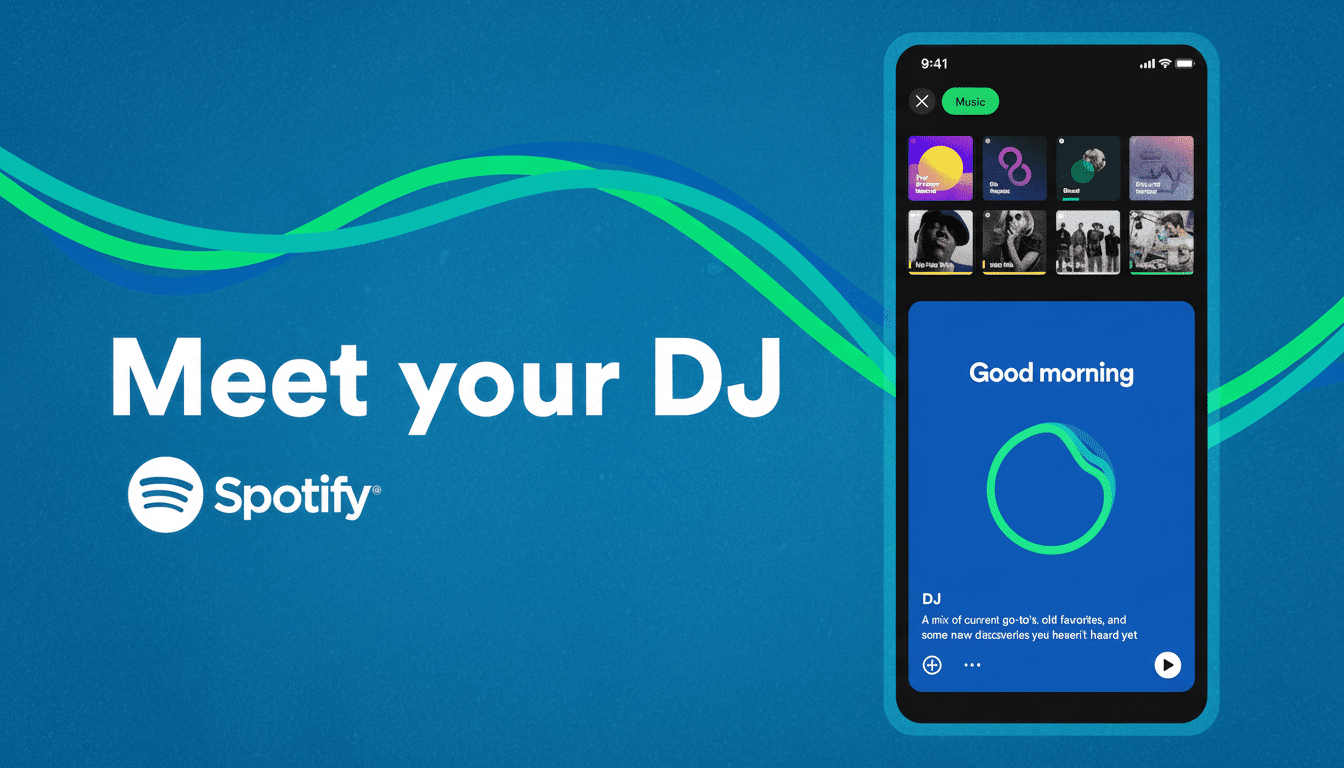

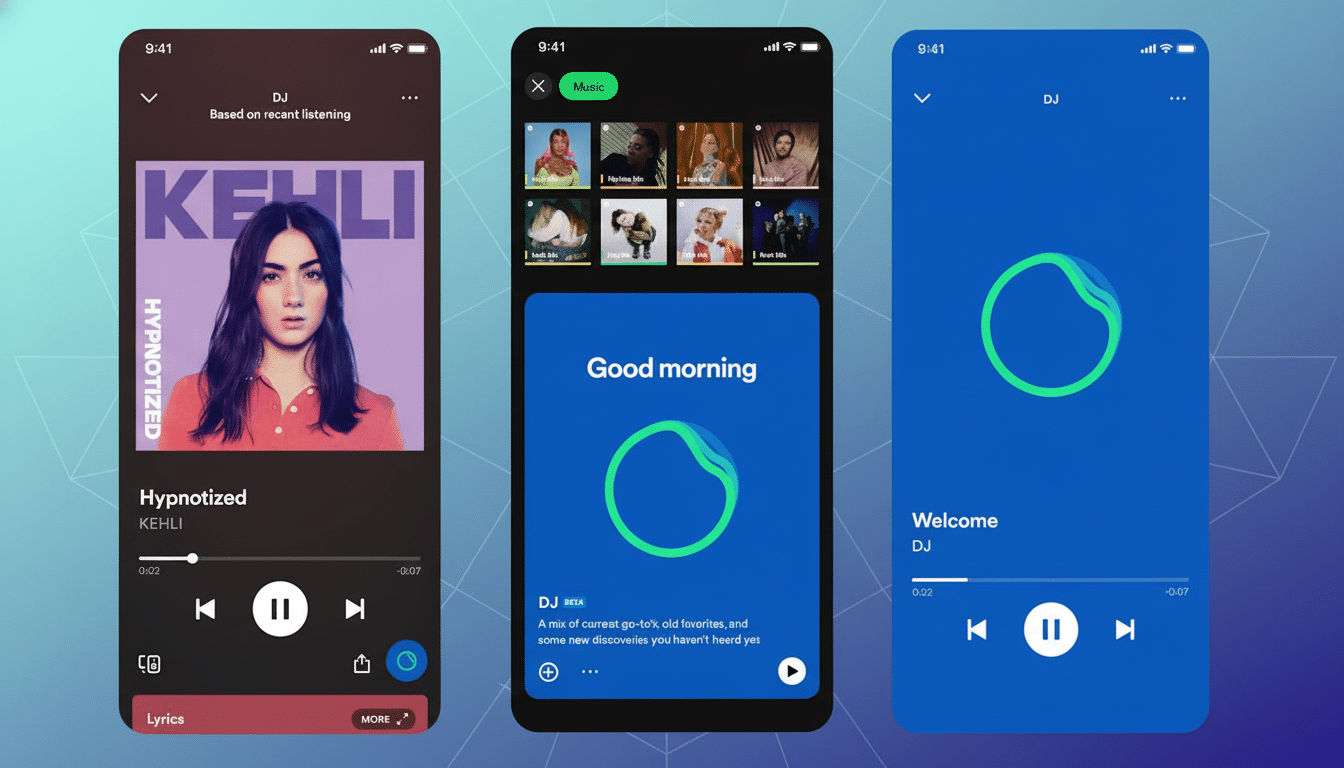

The move suggests a shift away from entertaining AI experiments — including the platform’s AI DJ and its prompt-powered playlist tools — to rights-aware systems designed with input from artists, songwriters and labels. These products, Spotify says, will respect copyright and ensure that creators can have a say in how — or whether — their work is used, the company said, with clear labeling and mechanisms to get paid.

What AI-First for Artists Might Look Like

Anticipate the first suite of policies to be around consent gates, attribution and rev share. That might involve artist-friendly tools to grant permission for vocal likeness, license remixing with pre-cleared stems or create add-on assets — backing vocals, instrument layers, versions in other languages — without ceding control over the data streaming services use.

On the back end, those products are likely to rely on industry standards so that rights can be traceable. Spotify has already played nice with DDEX on how AI involved in music creation should be labeled in metadata, and any system that is designed to scale will have to link up created or helped-along tracks to identifiers like ISRC for recordings, ISWCs for compositions and creator IDs — such as an International Standard Name Identifier (ISNI) — so that royalties can flow appropriately.

The commercial rationale is obvious. Streaming is the engine that drives the global recorded music business — IFPI describes streaming as around two-thirds of label revenue — and Spotify has an audience well over 600 million listeners. If AI is indeed going to sculpt music creation and discovery, creating it on licensed data with transparent consent and responsible payouts is the least dangerous course for a platform this size.

Why Labels Are Open to Collaboration on AI Music

The label position on generative AI has become more hard-line following a wave of synthetic recordings and voice clones, which resulted in lawsuits by big rightsholders through the Recording Industry Association of America against AI music startups for ingesting copyrighted works without permission. Meanwhile, platforms offering a proper licensed pathway are gaining attention: YouTube unveiled a label-backed Music AI Incubator, and rights statutes in the U.S. implemented new protections for voice and likeness, through Tennessee’s ELVIS Act specifically.

Against that, Spotify’s pitch is practical. Instead of allowing unlicensed AI to run rampant off-platform, build a system for artists and rights holders to opt into discrete regulations about training and generation using their content, so they can have access to new revenue streams. It also maps onto emerging policy trends, such as transparency obligations under the EU’s AI Act that push platforms to tell users when content is synthetic or was AI-assisted.

Enforcement and Attribution Are Critical

Labeling alone won’t be enough. Detection and auditing will need to operate at the speed and scale of contemporary distribution. Luminate has calculated that over 100,000 new tracks enter streaming services manually every day, making automated checks essential. And expect a mix of audio fingerprinting and watermarking, content provenance signals from standards bodies like C2PA, and rights verification services contributed by vendors like Pex and Audible Magic to play roles in Spotify’s stack.

Credit will also have to go beyond featured performers. If an AI tool interpolates a melody, emulates the style of a session player or taps into a licensable voice model, those contributors need to be credited and compensated. The devil is in the split: composition and recording rights will interact with new likeness and training permissions that do not easily fit into existing royalty buckets.

Impact for Artists and Songwriters Under New AI Deals

The upshot for frontline stars might be controlled experimentation — officially tolerated vocal clones for remixes, sanctioned duets or local releases — without the single-undermining danger of unsanctioned deepfakes. There should be opt-in settings, usage reporting and per-project licensing — Merlin offers all of this right now with some Merlin-affiliated licensed content (artist-first!) that doesn’t have you calling a lawyer on speed dial.

Composers would benefit if composition-level metadata can easily travel with AI-assisted work. Reconnecting derived works back to their ISWCs and published shares will be key, especially where models are capable of generating melodies or lyrics inspired by particular writers. Well-executed, this might help reduce the kind of disputes that plague today’s courts where AI-generated outputs bear no credit.

What This Means for Listeners and Streaming Experience

Consumers may see more personalization that is clearly marked, not buried: AI DJ experiences that reveal creator-approved alternates to algorithm-selected sets, playlist nudges that honor what an artist has allowed and visible badges that delineate the human-only tracks from those assisted by AI.

A filtering mechanism by which synthetic voices can be restricted or banned is a sensible inclusion, for those who like to listen to nothing but humans.

In the end, it’s a bet that transparency and consent can turn AI into a feature rather than a flash point. If Spotify and its label partners can provide consistent attribution and fair compensation on that scale, “artist-first” stops being a platitude to become the architecture that makes innovation and copyright live in the same playlist.