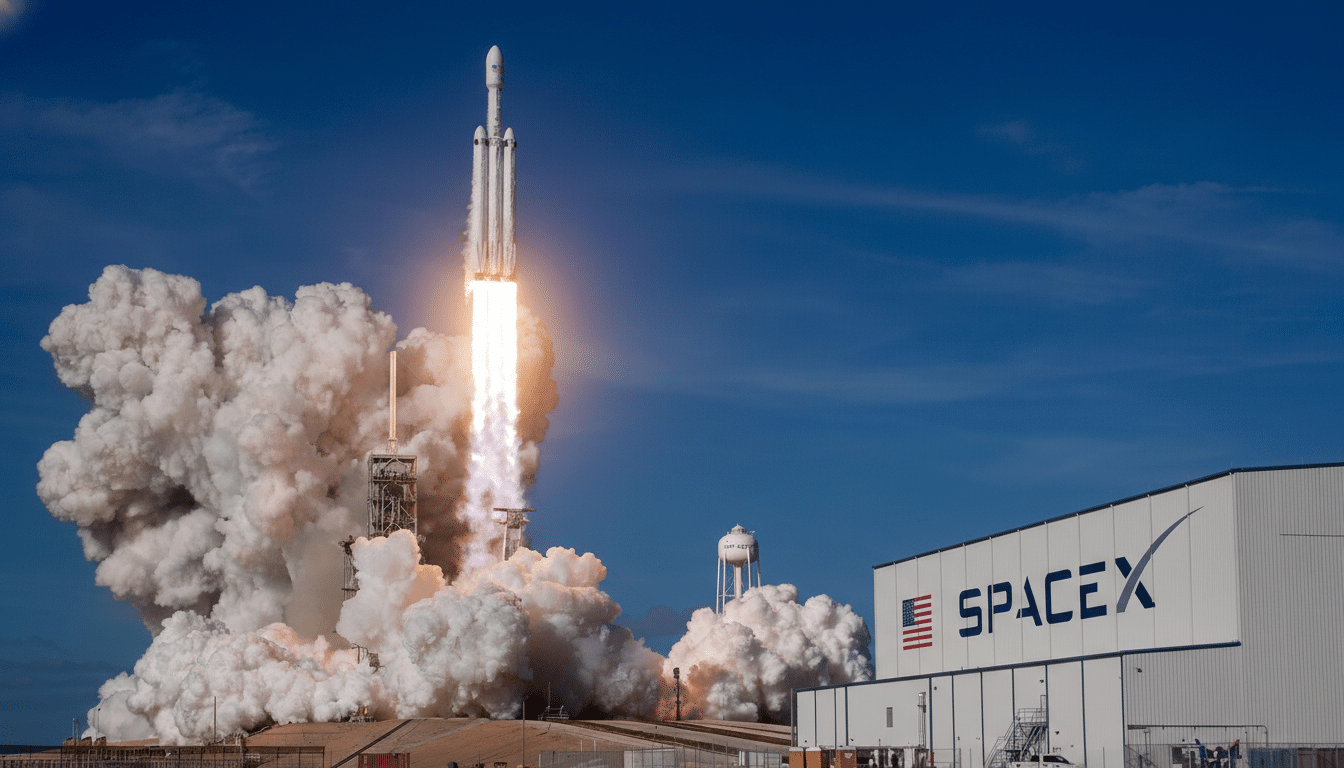

SpaceX has officially absorbed xAI, Elon Musk’s artificial intelligence venture, in a deal that fuses rocketry, satellite infrastructure, and frontier AI under one roof with a stated aim to build space-based data centers. The combined company is reportedly valued at $1.25 trillion, according to Bloomberg, instantly making it one of the most valuable private enterprises on the planet.

Musk framed the move as a way to decouple AI’s growth from the constraints of terrestrial data centers, which are straining power grids and encountering community resistance. By hosting compute in orbit, SpaceX argues it can tap abundant solar energy, leverage vacuum cooling, and reduce the local footprint of AI infrastructure on Earth.

Why Space-Based Data Centers Are Being Considered

AI training and inference workloads are driving a surge in electricity demand. The International Energy Agency has estimated that global data center consumption could approach the upper hundreds of terawatt-hours within a few years, with AI a primary driver. That load collides with permitting delays, land use conflicts, and the slow deployment of new generation and transmission capacity.

Orbit changes the equation. Solar availability in space is steady and unobstructed, and the vacuum environment simplifies some aspects of heat rejection via large radiators. A compute cluster in low Earth orbit could, in theory, scale without siting contentious facilities in neighborhoods already stretched by water and grid limits. That potential will attract attention, especially after xAI’s earlier data center plans drew scrutiny from residents near its facilities in Tennessee.

The Business Logic and Capital Cycle Behind the Plan

Beyond the physics, the merger aligns with SpaceX’s manufacturing cadence. Musk has signaled the architecture will require a steady constellation of compute satellites, creating a built-in replacement cycle because low Earth orbit hardware must be deorbited within five years under Federal Communications Commission rules. That replenishment loop looks like recurring revenue for launch, satellite production, and on-orbit services.

It also diversifies SpaceX’s income beyond broadband. Reuters has reported that as much as 80% of SpaceX’s revenue stems from Starlink launches. Folding in xAI introduces a second high-growth line of business where Starship’s heavy payload capacity and per-kilogram economics could become strategic advantages for hoisting dense racks, radiators, and power systems.

Still, the cash demands are enormous. Bloomberg has reported xAI’s burn rate at roughly $1 billion per month. Turning a space data center network from concept to cash flow means financing multiple fronts at once: AI model training, chip supply, satellite development, launch operations, and ground integration.

Technical and Regulatory Hurdles for Orbital Computing

Putting compute in orbit is not a simple lift-and-shift. Radiation hardening, error correction, vibration tolerance, and thermal control will define the design envelope. Radiators must reject heat without convection, electronics need shielding, and on-orbit servicing or replacement becomes part of routine operations. Latency is another consideration; even in low Earth orbit, round-trip times introduce tens of milliseconds that can affect certain workloads, pushing architects to separate latency-tolerant batch training from interactive inference.

Spectrum coordination and debris mitigation will also be under the microscope. The FCC governs licensing and deorbit compliance in the United States, the International Telecommunication Union allocates frequencies, and NASA has become increasingly vocal about managing orbital traffic and collision risk. Expect regulators to probe how compute constellations interact with existing broadband fleets and Earth observation satellites.

How It Changes the AI Landscape and the Space Race

The tie-up tightens Musk’s integrated stack: rockets, spacecraft, satellite broadband, and now AI at scale. It places SpaceX in a different competitive lane than terrestrial AI leaders like OpenAI, Google, and Anthropic by controlling launch logistics and orbital real estate rather than leasing power-hungry warehouse floors. Amazon’s Project Kuiper underscores how critical vertically integrated satellite networks are becoming, while European efforts such as the ASCEND concept have explored orbital data processing as a route to energy efficiency and data sovereignty.

There are analogs on Earth. Microsoft’s Project Natick demonstrated that unconventional environments can offer energy and cooling advantages for data centers. Space raises the stakes, but the logic is similar: place compute where power is plentiful and environmental control is intrinsic, then network it globally.

The merger also ripples through xAI’s product roadmap. With pressure intensifying in the chatbot race—The Washington Post reporting has detailed moderation challenges around xAI’s Grok—the company gains a partner capable of supplying dedicated compute at scale rather than competing on traditional cloud capacity alone.

What to Watch Next as Space-Based Compute Advances

Key milestones will signal feasibility. Look for demonstrations of orbital compute modules, radiator performance, and end-to-end links routed through Starlink. Watch for regulatory filings that reveal mass, power budgets, and deorbit plans. And track Starship’s cadence; high-frequency, lower-cost launches are essential if compute satellites need periodic replenishment.

If SpaceX can prove reliable on-orbit compute and a clear business model for AI training and inference in space, the company could redefine not just how we move data, but where we process it. If it stumbles, it will underline why most of the world keeps its servers planted firmly on the ground.