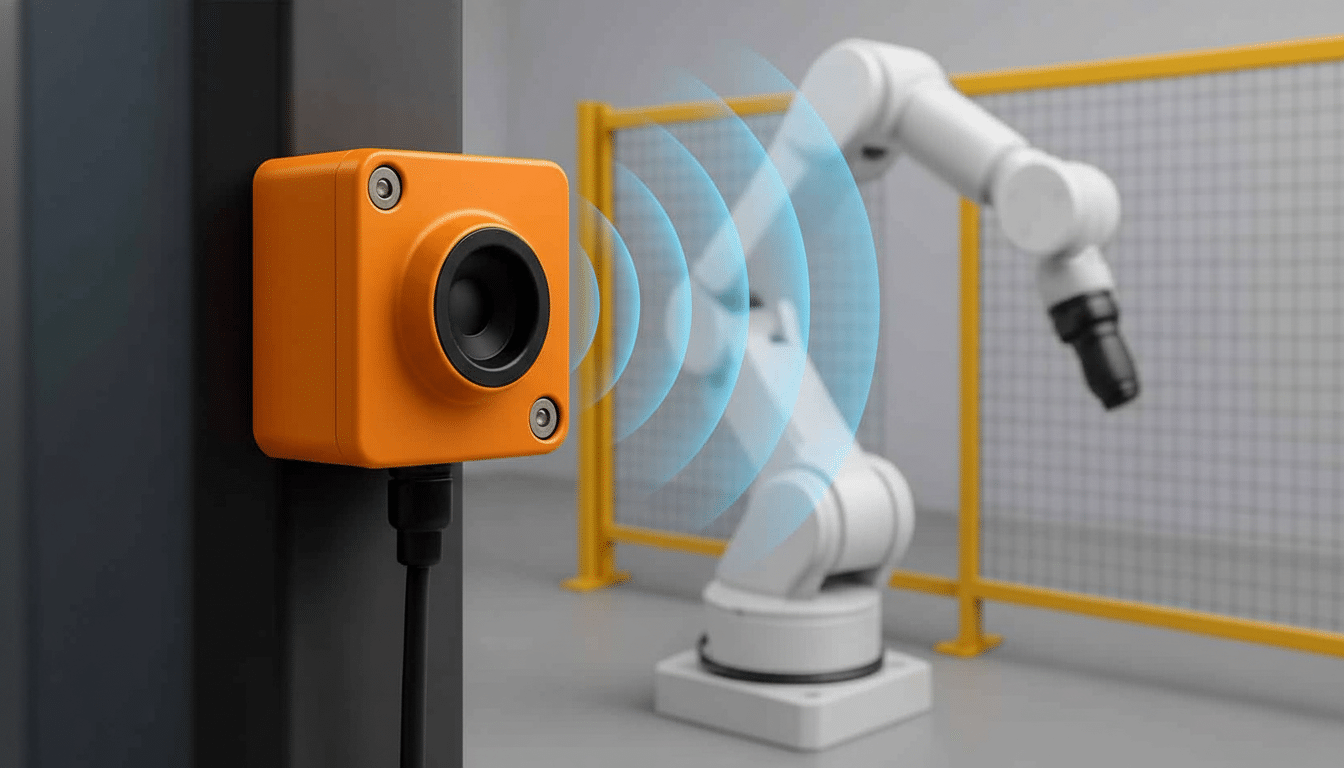

As robots transition from caged workcells to sharing space with humans, perception goes from a luxury to being a safety feature. Sonair, of Oslo, is wagering that three-dimensional ultrasound — acoustic detection and ranging, or ADAR — can more reliably fill in the most dangerous perception gaps at a fraction of the cost of many optical alternatives.

Why ultrasound is under the safety microscope

Ultrasonic sensing has one fundamental advantage in human-machine environments: sound doesn’t care about bad lighting, glare, smoke, or transparent and shiny surfaces that can be nightmares for cameras and laser scanners. By beaming out high-frequency pulses and reading returning echoes, a 3D ultrasonic array can deliver volume-awareness — a prediction of what’s in a space rather than just where on an object, once upon an instant, thin microwaves came to smack into it.

- Why ultrasound is under the safety microscope

- Beyond LiDAR: Get rid of the blind spots

- Built to integrate, not isolate, within existing stacks

- Real-life use cases are beginning to buck the trend

- Cost and coverage as distinguishing factors

- Market background: safety is the driver of growth

- What to watch next for 3D ultrasound in robot safety

For safety, volumetric coverage matters. Standards like ISO 10218 and ISO/TS 15066 specify approaches such as speed-and-separation monitoring and power-and-force limiting that rely on the reliable detection of people, body parts, and unexpected intrusions. Ultrasonic arrays can cover a zone in such a way that no “line of sight” turns into no vision.

Beyond LiDAR: Get rid of the blind spots

LiDAR is still a workhorse for mapping and navigation, but it’s not a panacea. Even expensive units often have difficulty with dark and transparent materials, while small or low-profile hazards may be missed by beams placed too far apart. Sonair presents its ADAR approach as complementary: if LiDAR “paints” a scene, 3D ultrasound “floods” it, and thereby increases the chances that close-range and low-contrast threats are detected in time for stability stops.

Technically, it appears in coverage and redundancy. A thick forest of acoustic rays surrounded by timestamped echoes can create an immediate buffer around mobile robots and/or robotic arms. In real-world terms, that could mean a hand being detected as it enters a work envelope or identifying a human walking into an AGV’s path, causing the robot to perform a controlled stop before there is an incident.

Built to integrate, not isolate, within existing stacks

Trust only comes when the safety technology fits within existing stacks.

Sonair’s 3D sensor outputs industry-standard 3D data, ready to plug in beside cameras, LiDAR, and safety relays. That allows fusion by using whichever modality is strongest in a given context, and it underpins the documentation and determinism mandated by functional safety standards like ISO 13849 and IEC 61508. The company stresses that the addition is meant to complement, rather than replace, a robot’s existing perception.

Practical considerations matter too. Ultrasonic sensors are small and relatively power-efficient, and thus potentially useful for battery-powered mobile platforms. And since the sensing medium is air, instead of light, performance works well despite dust and ambient light — typical in warehouses, factories, and construction sites.

Real-life use cases are beginning to buck the trend

Sonair cites interest from robotics manufacturers incorporating the sensor in next-generation mobile robots and arms for enhanced close-range detection and depth perception. Another frantic use case is industrial safety: Is there a person in the area who can get crushed by a press, CNC cell, or automatic conveyor? If yes, then stop! Those are cases in point: Both hew closely to longstanding OSHA and NIOSH guidance on machine guarding — except now “what makes a guard” is perception-sensitive, dynamically enforced.

For high-mix logistics, for instance, an ultrasonic halo can serve as a final layer in a safety stack: the robot operates at productive speeds relying on its standard perception while ultrasound detects an intrusion into a protected bubble, and then it decelerates to reduced speed or halts without complaint, along similar lines as cobot (collaborative robot) practices.

Cost and coverage as distinguishing factors

The business case depends on two levers: field-of-view per sensor and price. Sonair contends that an ultrasonic array can cover more near-field volume per dollar than many scanning LiDARs, especially for safe zones within a few meters of a robot. If true in deployments, integrators could cut sensor counts while adding redundancy — an appealing prospect as fleets grow into the hundreds.

There are trade-offs. Ultrasound can be weakened in soft tissue and reflected multipath through cluttered structures. For instance, mitigations — including coded pulses, multiple-angle emissions, and probabilistic filtering that were previously less omnipresent in acoustic sensing — are now considered standard elements in acoustic sensing systems, which is said to also account for Sonair’s approach of outputting tidy, point-cloud-like passthrough versus just range readings.

Market background: safety is the driver of growth

The timing is favorable. The International Federation of Robotics estimates that the global active stock of industrial robots is more than 3.9 million, while mobile robots are proliferating in warehouses and hospitals. As deployments go up, scrutiny does so as well: buyers now ask for throughput and conformal behavior with safety norms — real-world performance in mixed traffic, meaning.

Investors have taken note. Recently, Sonair secured a $6 million round from Scale Capital, Investinor, and ProVenture to ramp up production and customer integrations. The company says it doesn’t have much direct competition today in 3D ultrasonic sensing for robots, although the adjacent technologies — from radar to time-of-flight cameras — will compete and jockey their way into your safety stack.

What to watch next for 3D ultrasound in robot safety

The next 12 months will tell if the other shoe drops and 3D ultrasound takes its place alongside cameras and LiDAR in collaborative and mobile robots. Key indicators:

- Published safety-system performance data provided by pilot customers.

- Proof of integration into mainstream controllers through documented public customer announcements and other third-party evidence.

If the technology ensures close calls are converted to traffic-stopping safety without impeding productivity, anticipate ultrasound becoming as common as a bumper or E-stop.