Runway introduced its first world model, GWM-1, and brought native audio and multi-shot storytelling to its newest video system, Gen 4.5—a significant step toward production-ready generative video and simulation tools.

The dual announcement places the company in the midst of a new kind of arms race among A.I. labs to more accurately render not just pixels, but also how the physical world unfolds over time.

What Runway’s world model is doing to predict change

GWM-1 is a predictor, not a perfect re-creation generator: it predicts the next frame, guiding change to be plausible in real-world physics, lighting, and geometry. Instead of encoding crafted rules, the model is able to capture how objects behave and has commonsense reasoning about causes and effects across sequences. That’s a strategy that resembles current academic work on predictive world models, an avenue of pursuit spearheaded by researchers at labs such as Google DeepMind and Meta’s Fundamental AI Research group.

Runway is launching with three focused versions—GWM-Worlds, GWM-Robotics, and GWM-Avatars—that it will eventually combine. GWM-Worlds is akin to an interactive sandbox: users specify a scene through instruction or an exemplar image and the system generates an explorable environment at 24 fps and 720p resolution where all elements are in agreement with one another depth-wise, material-wise, and dynamics-wise. The gaming angle is obvious here, but the company says that interactive nature also makes it a useful tool for training agents to interact with and navigate physics-based systems.

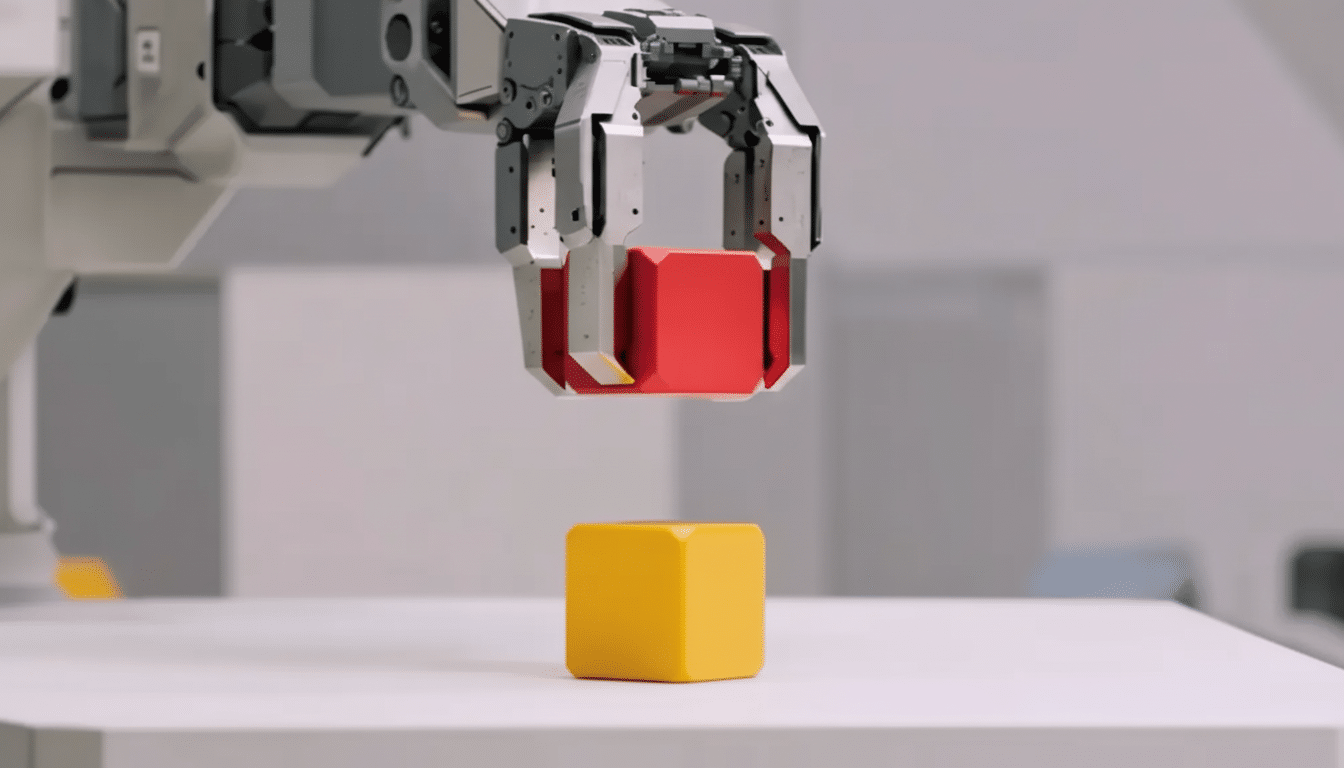

GWM-Robotics is focused on the use of synthetic data generation. By changing conditions like weather, obstacles, and lighting, it is designed to reveal policy failures before robots encounter them in the physical world. Robotics teams have for years leaned on simulation to avoid expensive trial and error; synthetic stress testing is a regular feature of autonomous driving and warehouse automation. Runway says GWM-Robotics will be available as an SDK, and it’s in talks with robotics companies and corporations to issue pilot programs.

GWM-Avatars specializes in digital humans for communication, training, and entertainment. It joins a crowded field that includes D-ID, Synthesia, Soul Machines, and in-house research efforts at big labs. The key difference here is a shared world model core able to potentially interpolate between plausible behaviors not only in motion and gaze but also in scene interaction, rather than exposing avatars as isolated talking heads.

Recently added features in Gen 4.5 for video creators

Runway also updated its Gen 4.5 video model with native audio generation and editing and long-form multi-shot workflows. You can enter up to 1 minute of footage with the same characters, synced dialogue, room tone, and staged coverage from multiple angles. There’s more to the audio argument than meets the ears: it eliminates the friction of cobbling together separate speech and effects tools, giving a boost to lip-sync and pacing while keeping everything in the same temporal model.

The move to Gen 4.5 has been strong on public benchmarks. The model recently surpassed earlier attempts from Google and OpenAI on the community-run Video Arena leaderboard, suggesting gains in coherence and visual quality. Now, the latest update pushes it closer to rivals that do have these kinds of all-in-one video suites—especially Kling, which has positioned multi-shot storytelling and audio as table stakes for pro workflows. Runway says paid plan users have access to the new features.

Why world models matter for agents, robotics, and media

World models are a bet at first principles: if a system can learn the dynamics of reality, it has potential to generalize far beyond its training set, in ways that make it useful for agents (of all kinds), robotics, and interactive media. Pixel prediction—not only ISP—is a method that could greatly improve spatial and temporal accuracy, but the price to pay is computationally expensive. If the approach scales, it could reduce the cost and risk involved in real-world experimentation by enabling developers to practice extreme cases in a high-fidelity simulator before deploying them.

For creative industries, local audio plus consistent characters and multi-shot editing pave the way for an end-to-end previsualization pipeline. Production teams can prototype sequences, refine dialogue timing, and iterate on camera language—all without having to exit a single tool. For business, that same capability is about training videos, digital assistance, and interactive demos that feel seamless instead of pieced together.

Competitive and research context across labs and vendors

Runway positions GWM-1 as a more general system than Google’s Genie-3, which also learns generative dynamics but was framed mostly as a generator of simulated data. Meta’s focus on world modeling through I-JEPA and related work, however, is indicative of a more general trend toward prediction-first learning. In the meantime, Nvidia’s ecosystem around simulated environments and robotics provides complementary infrastructure for teams requiring closed-loop testing at scale.

As capabilities become more refined, concerns about safety and provenance grow larger. With realistic avatars that feature lip movement and real-time voice synchronization, better watermarking and content provenance are also needed, with efforts being presented by industry groups including the Coalition for Content Provenance and Authenticity. Companies that research world models for robotics will also consider the interpretability of models, failure analysis, and auditability in addition to raw performance.

What comes next as Runway merges worlds, robotics, avatars

Runway says it plans to coalesce Worlds, Robotics, and Avatars into a single framework that would allow agents, environments, and human surrogates to be linked by the same physics-informed backbone. If the company is able to continue scaling GWM-1 while running efficiently, it could end up being a general-purpose substrate for both synthetic training data and interactive content—bridging the gulf between creative tooling and embodied AI.