Security researchers are sounding the alarm over a wave of unsecured AI apps on Google Play that are exposing Android users’ photos, videos, and identity documents. Investigations have traced the leaks to sloppy cloud configurations and hardcoded credentials inside popular apps, leaving vast troves of personal data sitting in publicly accessible storage.

What Researchers Uncovered About Android AI Data Leaks

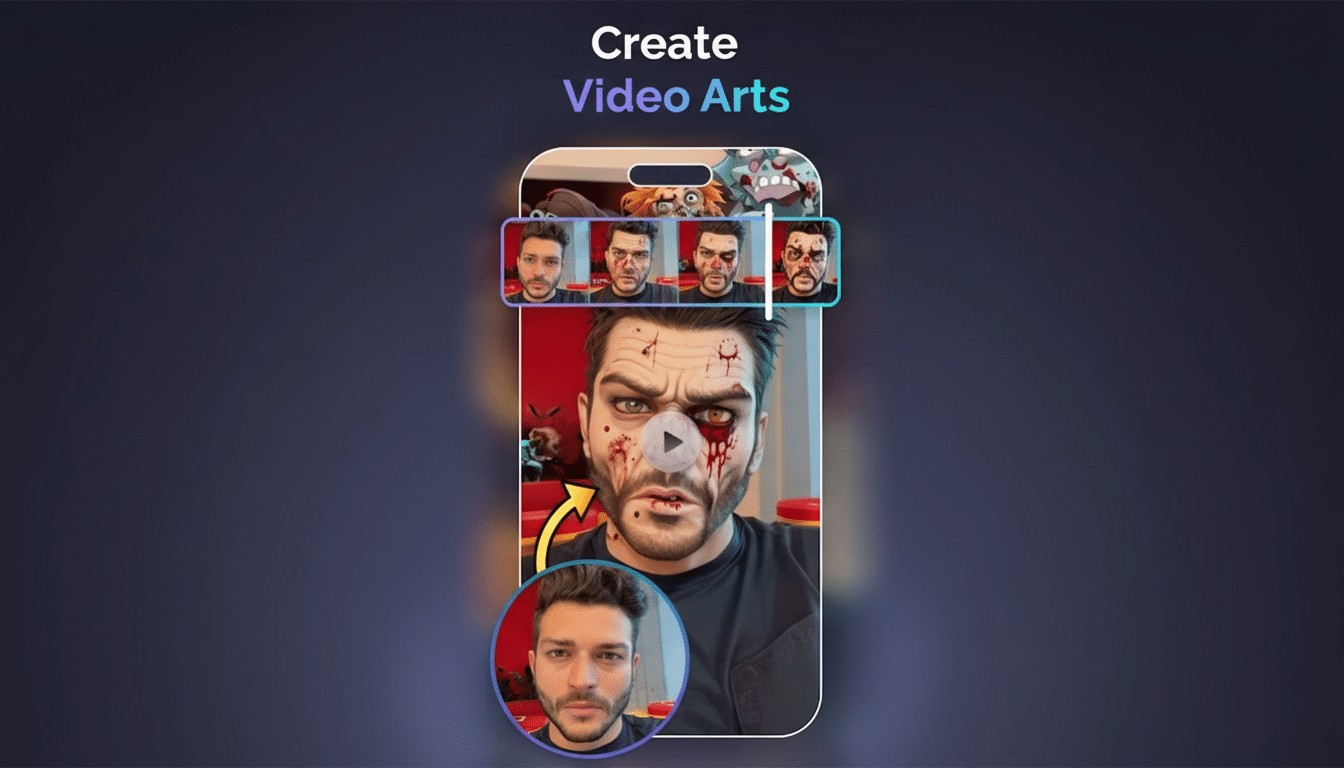

In one case examined by Cybernews, the “Video AI Art Generator & Maker” app left more than 12 terabytes of user media openly accessible due to a misconfigured Google Cloud Storage bucket. Researchers said the cache included about 1.5 million user images, over 385,000 videos, and millions of AI-generated files. The app had roughly 500,000 installs when the exposure was identified.

- What Researchers Uncovered About Android AI Data Leaks

- Why AI Apps Are Uniquely Risky for User Privacy

- Google’s Safeguards and Gaps in Play Store Oversight

- The Real-World Fallout from Exposed Android User Data

- How Android Users Can Reduce Risk When Using AI Apps

- What Developers and Platforms Must Do to Prevent Data Leaks

- Bottom Line: Android AI Convenience Versus User Privacy

Another app, IDMerit, inadvertently exposed know-your-customer files—full names, addresses, dates of birth, IDs, and contact details—spanning users in at least 25 countries, with a heavy concentration in the U.S. Cybernews reported the dataset totaled around a terabyte. Both developers fixed the issues after being notified, but the breadth of access underscores how quickly personal data can spill when basic safeguards fail.

Beyond isolated incidents, the researchers highlighted a systemic problem: 72% of the hundreds of Android apps they analyzed exhibited risky practices such as hardcoded API keys or insecure cloud storage. That creates a low-friction path for attackers to harvest data at scale without having to compromise devices directly.

Why AI Apps Are Uniquely Risky for User Privacy

AI utilities often blend sensitive, user-uploaded content (selfies, IDs, voice notes) with generated outputs stored on third-party clouds. That combination multiplies the attack surface: developers juggle model providers, content delivery networks, and storage buckets—any link misconfigured can expose the whole chain.

Security teams regularly warn against “hardcoding secrets,” a shortcut where developers embed API keys or admin passwords inside the app code. The OWASP Mobile Top 10 lists this as a critical flaw because keys can be extracted with basic reverse engineering, unlocking back-end databases or cloud buckets. Add permissive bucket policies—such as granting public reads to “allUsers”—and private media becomes web-accessible with no authentication.

AI pipelines create other subtle leak paths, too. LLM and image tools may log prompts and uploads for “quality improvement,” while EXIF metadata in images can reveal location or device details. Without rigorous data minimization and retention limits, these logs accumulate into high-value targets.

Google’s Safeguards and Gaps in Play Store Oversight

Google Play Protect scans apps for malware, and Play’s Data Safety section is intended to increase transparency around handling of personal data. But the labels are self-reported by developers. A Mozilla Foundation review found widespread inconsistencies between what many apps claimed and their actual practices, raising questions about how well users can rely on the disclosures.

Google has tightened SDK controls and invested in the App Defense Alliance to improve pre-publication vetting. Still, exposures stemming from misconfigured cloud storage or leaked keys may sit outside what static scanning can reliably catch. Put simply, if an app’s back end is open, no on-device permission prompt will save user data already in the cloud.

The Real-World Fallout from Exposed Android User Data

Leaked KYC documents supercharge fraud: criminals can assemble synthetic identities, pass facial verification, or execute SIM swaps. Personal photos and videos fuel extortion, harassment, and invasive face-searching. Even when developers patch, copies of exposed data may already be scraped and traded, making timely containment critical.

How Android Users Can Reduce Risk When Using AI Apps

- Favor established developers with clear company identities and active security contacts; scrutinize install counts, recent updates, and independent reviews.

- Check the Data Safety section and privacy policy for storage location, retention periods, and third-party sharing. Avoid apps that collect IDs or biometrics without a compelling reason.

- Grant the minimum permissions. Deny broad photo library access when apps support single-file picking; revoke network and storage permissions when not needed; periodically clear caches and delete uploaded content from within the app.

- Do not submit KYC documents to unfamiliar apps. Where possible, prefer on-device processing and opt out of “improve the model” data collection.

What Developers and Platforms Must Do to Prevent Data Leaks

Developers should remove secrets from app code, rotate keys, and use short-lived, scoped tokens issued server-side. Enforce least-privilege access on cloud storage, disable public access, and gate downloads with signed URLs that expire quickly. Encrypt sensitive media at rest and purge it promptly.

Adopting the OWASP Mobile Application Security Verification Standard, conducting third-party penetration tests, and running public bug bounties can catch issues before release. Platforms can help by validating Data Safety claims, flagging exposed buckets tied to published apps, and penalizing repeat offenders.

Bottom Line: Android AI Convenience Versus User Privacy

AI convenience on Android shouldn’t come at the cost of privacy. The latest findings show too many AI apps treat cloud security as an afterthought, with predictable and preventable failures. Until vetting improves, users should be choosy about which AI tools get their most personal data—and developers must treat that data like the liability it is.