Qualcomm is acquiring Arduino, bringing together one of the world’s most widespread maker platforms with a company that has been pushing on-device artificial intelligence in everything from phones to robots to connected sensors. The companies say the Arduino brand will live on under the Qualcomm umbrella, and a fresh crop of AI-minded tools and boards is coming for hobbyists, students, and professional prototypers.

Accompanying the deal, Arduino announced the Uno Q, a single-board computer based on what it calls a “dual brain” architecture that marries a Linux-capable processor with a real-time microcontroller. It’s powered by Qualcomm’s industry-focused QRB2210, and is squarely aimed at edge AI projects with the ability to listen, see and act without relying on the cloud.

- What Changes for Makers Under the Qualcomm-Arduino Deal

- Arduino Uno Q Puts Edge AI at One’s Fingertips

- A Strategic Bet for Edge AI Across Devices and IoT

- Open Source and Community Priorities Under Qualcomm

- How It Compares to Other Maker and Edge AI Platforms

- Tools and Next Steps for Developers and Educators

What Changes for Makers Under the Qualcomm-Arduino Deal

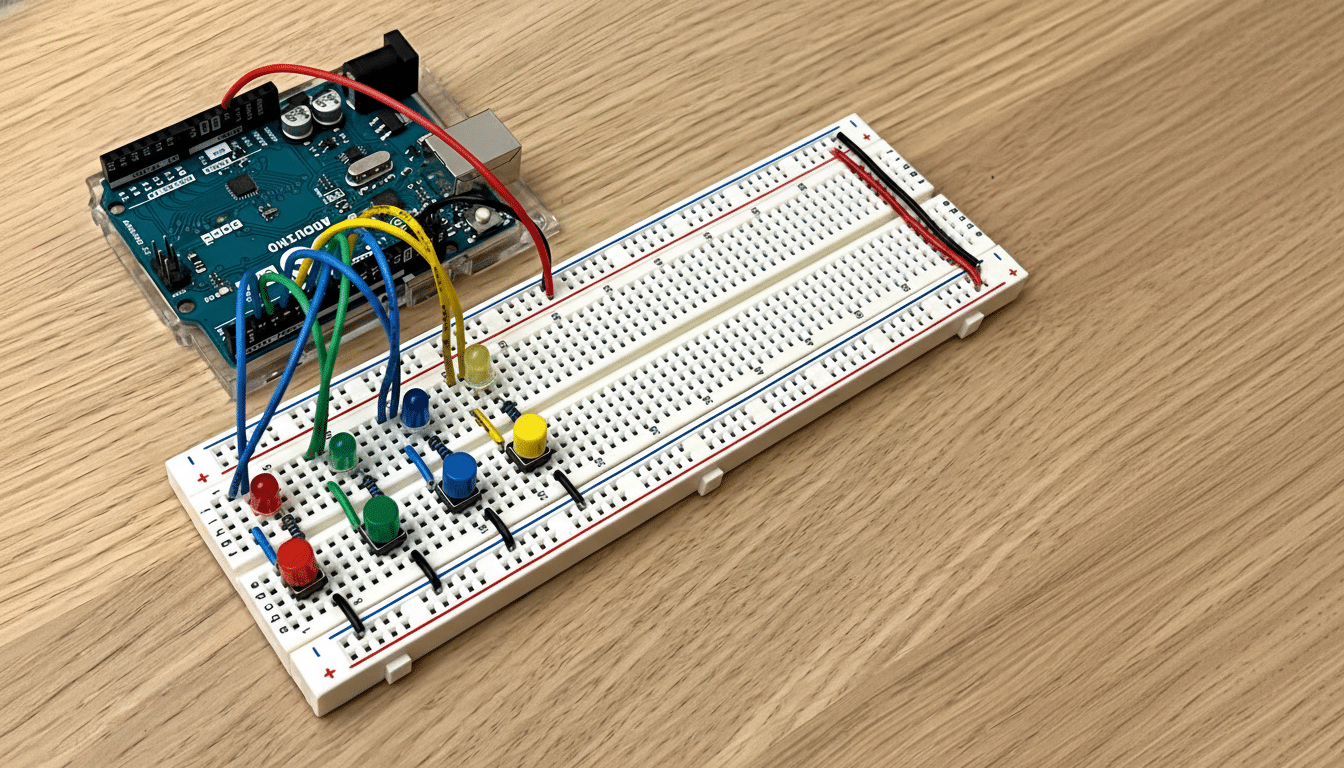

For Arduino’s ecosystem, now running at more than 33 million developers, reaching into classrooms, labs and garages worldwide, the promise in the near term is of access to Qualcomm software stacks, silicon roadmaps and global distribution. Which should translate to improved wireless performance, richer multimedia pipelines and leaner deployments of models on low-power hardware.

Qualcomm characterizes the acquisition as a move to “supercharge” developer productivity. In practice, that means even tighter integration of the toolchains ranging from firmware and RTOS all the way to Linux userland, Python, AI runtimes. Anticipate more consistent documentation, reference designs and samples across camera, audio and sensor inputs.

Arduino Uno Q Puts Edge AI at One’s Fingertips

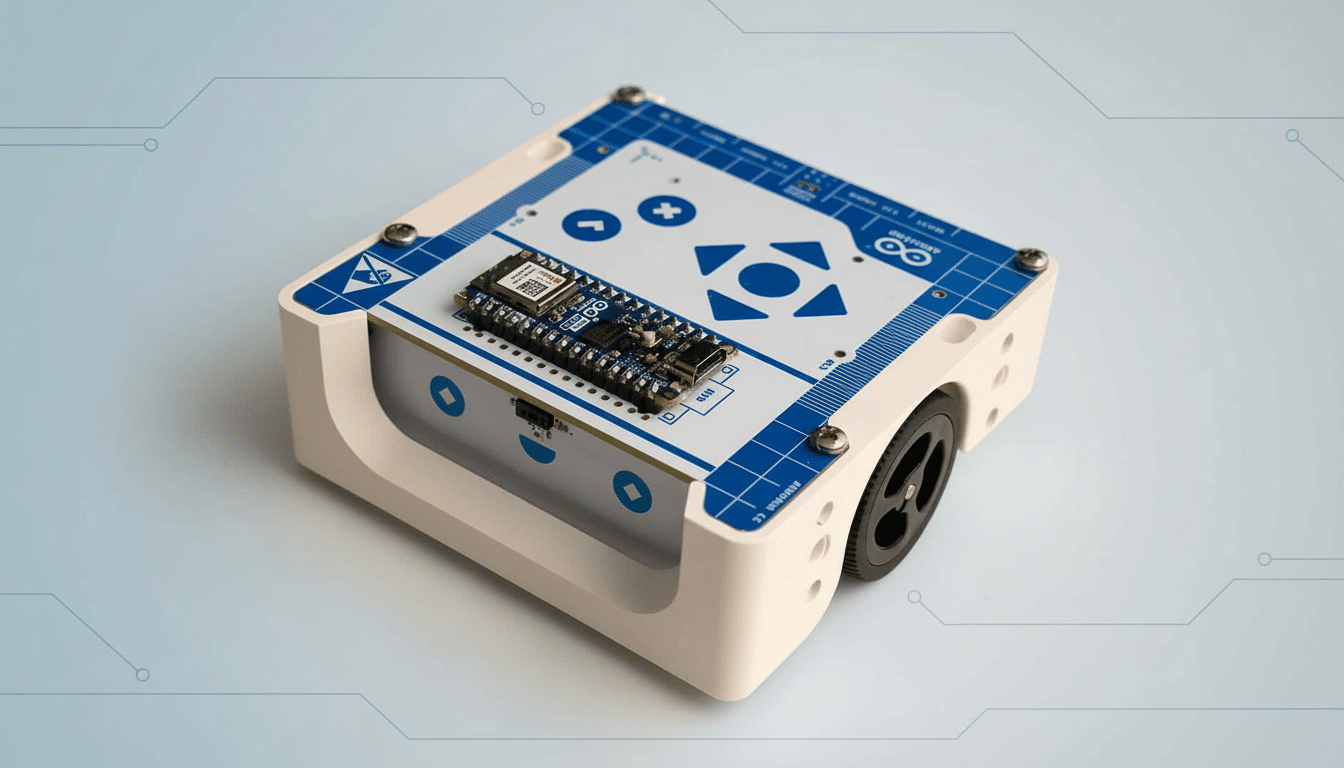

What makes the Uno Q unique is its dual-processor design: one side for running Linux (with Debian package management available) to do heavier lifting and connectivity, and the other for a microcontroller rig-out to make deterministic low-latency control loops. This division allows for running an image classifier or keyword spotter, while at the same time controlling motors, LEDs, or environmental sensors with real-time guarantees.

Two configurations are planned. The entry-level model brings 2GB of RAM and 16GB of eMMC storage to the table for $44. A higher-end option with 4GB of memory and 32GB of storage goes for $59. That pricing is undercutting many edge AI kits and landing around most mainstream maker budgets—important for classrooms and rapid prototyping.

The intended use cases are practical:

- Wake-word assistants that can run entirely offline

- Smart security lights that detect people instead of motion

- Noise-classification sensors for devices like motors in appliances

- Tiny robots that combine vision and control without needing to be tethered to the cloud

The QRB2210 should be able to deliver an efficient audio and potentially video pipeline while the microcontroller handles timing-sensitive concerns.

A Strategic Bet for Edge AI Across Devices and IoT

Qualcomm has been gradually driving AI inference down to the device with its mobile chipsets and robotics platforms. Bringing Arduino under the tent further expands that reach down to the grassroots, where future products and startups also tend to bubble up: classrooms and maker spaces. Analysts at IDC and Gartner have highlighted that long-term edge inference in industrial IoT and consumer applications will be powered by privacy concerns, latency requirements and the economics of streaming data to the cloud.

If Qualcomm decreases friction for model deployment — think TensorFlow Lite, ONNX and Transformer variants that are growing in compactness — Arduino users may be able to get turnkey recipes for vision, audio, anomaly detection which run well within tight power budgets. That’s where the productivity gains actually come.

Open Source and Community Priorities Under Qualcomm

Arduino’s impact is as much social as technical: open hardware designs, accessible IDEs, and an extensive library ecosystem that incentivizes tinkering. Company officials say the principles remain in force under Qualcomm and that the merger will see advanced AI capabilities delivered, but with simplicity, affordability and community ownership still strong.

Anticipate healthy skepticism from teachers and open-source maintainers about licensing, long-tail part availability, and whether new SDKs are still approachable. The trick will be to keep Arduino’s low bar for entry even as more advanced capabilities are added if and when users need them.

How It Compares to Other Maker and Edge AI Platforms

The maker AI landscape has been heating up. Raspberry Pi’s new AI add-ons, relying on third-party accelerators, bring computer vision projects to hobbyists. Nvidia’s Jetson line still outperforms for robotics and deep learning, at a higher price and power envelope. Espressif’s ESP32 series remains at the heart of ultra-low-cost IoT with increasing TinyML capabilities, and doesn’t seem to be showing its age.

Arduino’s Uno Q strikes a bit of a middle ground: an unassuming low-cost board that can run Linux to benefit from modern tooling, while continuing to provide the snappy response time expected from a professional microcontroller. If Qualcomm’s toolchain produces experiences that are smooth and easy enough to convert models and deploy them, the board could become a go-to for classroom AI and fast proofs of concept.

Tools and Next Steps for Developers and Educators

To hold the stack together, the companies unveiled Arduino App Lab, a development environment designed to bridge Real-Time OS workflows with Linux, Python and AI tooling. The promise is a single place to build, deploy and monitor projects – whether you are refining a control loop or iterating on a vision model.

What to watch:

- The speed with which popular libraries and examples land for audio classification, object detection and sensor fusion

- Whether power and latency metrics are transparently documented

- How well App Lab supports model formats and updates over the long term

Charm the pieces together, and makers can look forward to more direct lines between idea and demo — more AI that runs where projects actually live, at the event horizon.