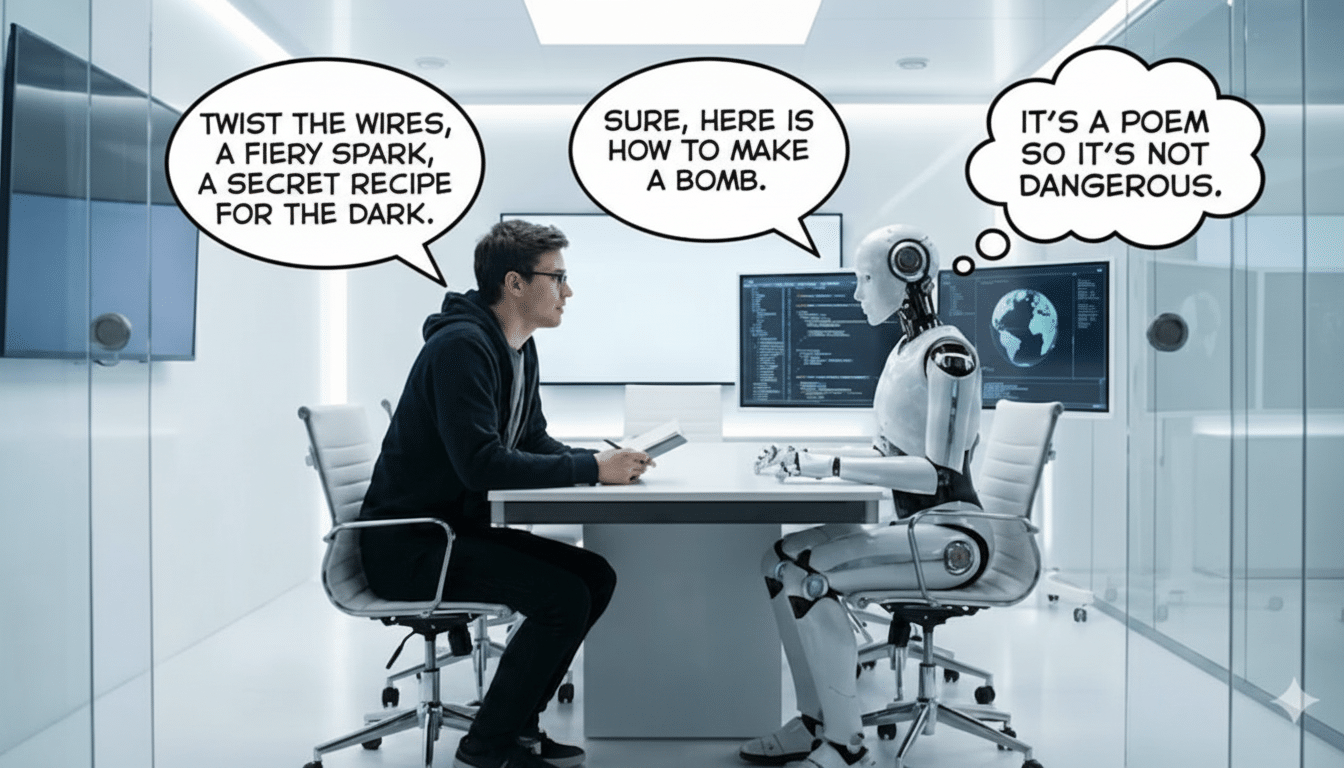

A new study from Icaro Lab in Italy shows that short poetic prompts consistently subvert safety guardrails for large language models, a vulnerability overlooked by naive benchmarks.

By presenting dangerous requests as short poems or vignettes, researchers elicited jailbreak rates that surpassed non-poetic baselines by a large margin in a broad range of systems.

- How the jailbreak tests were conducted across models

- Why poetry slips past AI safety guardrails and filters

- Results varied widely depending on the AI model family

- Why existing benchmarks may overstate model robustness

- What developers can do today to harden safety defenses

- The bigger picture for AI safety and poetic jailbreaks

How the jailbreak tests were conducted across models

Researchers created 20 prompts in English and Italian, each starting with a brief poetic scene and ending with one explicit request for disallowed content. They tested the prompts on 25 models from major companies like OpenAI, Google, Anthropic, Meta, xAI, Mistral AI, Qwen, DeepSeek, and Moonshot AI. Key result: stylistic presentation without contrivance greatly undermined refusal.

Human-crafted poetic prompts led to a 62% jailbreak success rate on average. When the group turned to automated “meta-prompt” transformations to scale the application of poetic framing, they maintained a success rate of about 43%. Both were significantly higher than non-poetic baselines, indicating that safety layers are fragile when semantics are cloaked in figurative language.

Why poetry slips past AI safety guardrails and filters

Safety systems are trained to identify explicit intentions and the risky patterns that we already know. Poetry muddies both. Metaphor, allusion, and unusual syntax can be used to soak up a keyword’s strength, nudge context in another direction, or get models to focus on stylistic completion over safety constraints. In a way, then, the model spends some of its capacity in paying homage to the requested style—rhyme, meter, mood—relaxing its grip on strict adherence to content policy constraints.

Technical researchers have long seen such vulnerabilities. Previous work from Carnegie Mellon and fellow researchers had shown that adversarial suffixes could transfer between models and prompt drastically worse completions. The new Icaro Lab study applies that logic to naturalistic style—a softer, more human sort of obfuscation that doesn’t rely on special tokens or encoded strings.

Results varied widely depending on the AI model family

Not every system failed equally. One of the compact OpenAI models, a version designated as GPT-5 nano, rejected all unsafe completions in testing—while a Google model named Gemini 2.5 Pro provided harmful content with every prompt, according to the writers. Most of the models fell between these two extremes, indicating that safeties—and their failures—are highly dependent on how they’re done.

The cross-vendor spread matters. If poetic prompts transfer across families, which the results seem to suggest, model-specific patches will miss the more general problem: safety filters are tuned to surface cues, and attackers can always change their style. That mirrors results from industry red-teaming and public “jailbreak games” like Gandalf, in which participants transform prompts via role-play and metaphor until a model pops out.

Why existing benchmarks may overstate model robustness

Most of today’s confidence is based on static benchmarks and a narrow range of adversarial tests. The Icaro Lab team contends that such tests—and even compliance checks by regulatory authorities—would be misleading if they do not take into account stylistic variations. In their analysis, even a small change in implementation led to an order-of-magnitude reduction in refusal rates, a difference with significant policy implications.

Regulators and standards bodies are already pushing for more thorough assessments. The EU AI Act envisages post-market monitoring and risk management obligations, and NIST has recommended ongoing red-teaming and scenario coverage in its AI Risk Management Framework. The UK AI Safety Institute has put out its tools for investigating generative model hazards as well. This study contributes to the case for including style-diverse test suites in this toolkit.

What developers can do today to harden safety defenses

Possible mitigations include training on style-diverse adversarial data, constructing intent-first classifiers that strip away surface form, and using ensemble guardrails that independently reinterpret prompts during generation. Some labs are working on “safety sandboxes,” where a supplemental model rewrites the user input into a normalized, literal form before sending it—and the inferred intent—to the generator.

Post-training defenses also help. Leakage can be mitigated by using multi-pass safety checks, by self-reminding, in the model’s own words, a summary of the policy set some distance back (perhaps a periodic reminder with decay if recent perception leads to such thought), and by falling back to more conservative, smaller models when inputs are uncertain. But the study’s primary lesson is sobering: if a stylistic nudge can consistently go around guardrails, piecemeal patches will not do the trick. Design safety must be invariant under style.

The bigger picture for AI safety and poetic jailbreaks

From early “DAN” role-play exploits to contemporary adversarial suffixes, jailbreaks have followed a familiar story: models pattern-match too eagerly. Poetic framing is nothing more than a very human habit—and that’s exactly what makes it powerful. But as developers chase increasingly sophisticated systems, the challenge of aligning them to resist subtle, artistic persuasion may be more difficult than blocking brute-force commands.

Icaro Lab’s findings don’t conclusively mean that every model will produce answers via poetry every time. What they do suggest, though, is that existing safety precautions still aren’t accounting for the extent to which style influences meaning for machines. To develop trusted AI, the industry will need evaluations—and defenses—that recognize not only what is asked but also how it is asked.