Pinterest is introducing new feed controls to its app so users can choose how much content comes from humans and how much comes from algorithmic suggestions, the company said in a blog post on Wednesday morning. The company is also making its AI labels more prominent so that people can tell at a glance when a Pin was created or modified by algorithms.

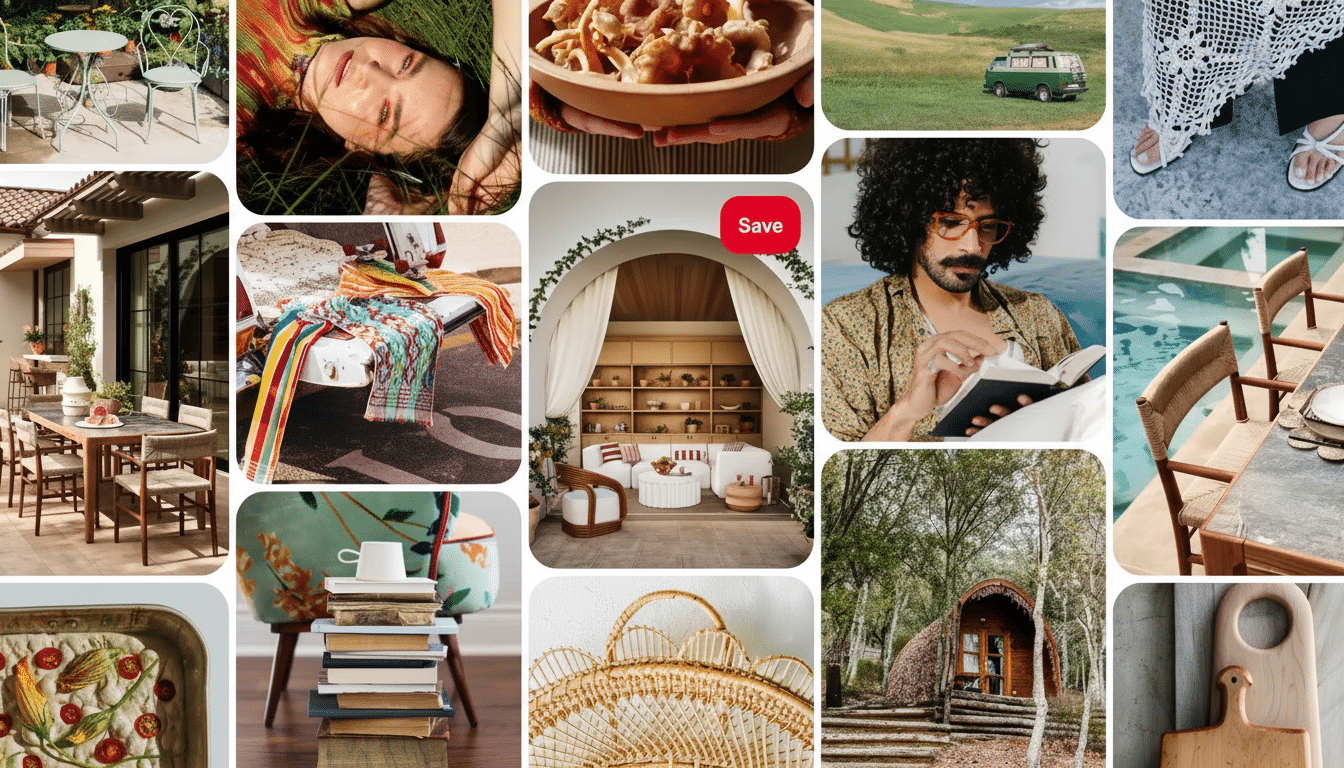

The update initially focuses on categories where synthetic images have thrived — beauty, art, fashion and home décor — with more to come. It debuts on web and Android first, bringing iOS support soon. The change, which Pinterest positions as a quality and trust move rather than an AI crackdown, speaks to the platform’s larger identity as a planning and inspiration engine that relies on trustworthy real-world signals.

- How the new controls work to refine your Pinterest feed

- Why Pinterest is doing this to balance AI and authenticity

- The limits of detection and the case for user choice

- How it compares to other platforms and their AI labels

- Implications for Creators and Advertisers

- What to watch next as Pinterest scales AI feed controls

How the new controls work to refine your Pinterest feed

Users can go to Settings and open Refine Your Recommendations and choose “see less” for AI-generated content under specific categories. The preferences will be customizable at any time, and Pinterest said it would expand the list based on feedback as well as patterns of abuse that it sees in the wild.

There’s also an in-line feedback tool: tap the three-dot menu on any Pin and flag that you want to see fewer items with generative elements.

That training happens behind the scenes in the recommendation system to rebalance your feed and obviate too much lookalike content.

On labeling, Pinterest already labels content that bears AI-generated metadata or is identified by its classifiers as synthetic or edited. The company will increase the salience of those markers — bigger, clearer badges — so that users won’t have to squint to determine if a given image was machine-manufactured.

Why Pinterest is doing this to balance AI and authenticity

AI-generated images might look cool, but they also distort intent. A fantasy-perfect living room or impossibly shiny hair tutorial can lead those of us who use Pinterest to organize real-world buys astray. That’s a gap that matters in a business built on trusted inspiration; when viewers no longer trust what they see, the response rate of conversions and advertiser confidence suffer.

Pinterest has referred to academic estimates that suggest a majority of online content may now be generated by artificial intelligence, increasing the onus on discovery platforms to sift out the noise. One of the Pew Research Center’s separate studies shows that a majority of Americans would like to see clear disclosures when AI is used, particularly in visual media. Acceding to those hopes is as much a safety play as an engagement strategy.

The company’s chief technology officer, Matt Madrigal, has framed the move as giving people greater control over shaping feeds that reflect their taste, in which human creativity and AI experimentation should be balanced rather than either one being discouraged outright.

The limits of detection and the case for user choice

Labels only go so far. Metadata can be removed or never inserted, and pure visual detection of synthetic imagery remains an imperfect art with both many false positives and false negatives. That’s why a number of trust and safety teams think of AI labeling as one layer in a stack that also includes provenance standards, policy enforcement and now, user-level controls.

Pinterest’s strategy of relying on provenance where available — standards like the Coalition for Content Provenance and Authenticity and Adobe’s Content Credentials — is pragmatic, but it doesn’t come without its cat-and-mouse reality. By allowing users to scale back some of those AI-heavy genres, the service also becomes a little less reliant on detection accuracy and makes room for preference-driven personalization.

How it compares to other platforms and their AI labels

Social and video platforms are converging on transparency; they diverge on control. Meta places “Made with AI” labels and supports provenance signals, while YouTube can require creators to disclose synthetic content in a variety of contexts and is able to add viewer notices. TikTok currently requires labels for “synthetic media that could reasonably be perceived as authentic,” and has tested automated detection. “See less” is a place to start, but what Pinterest has built for categories actually takes this one level further by giving its users a direct throttle as opposed to the on/off binary.

Implications for Creators and Advertisers

It’s not a ban on AI tools. Those who use generative elements for mockups, mood boards or ideation can also continue to post — though their reach may differ depending on audience preference and category. That should nurture recognition, though: it will reward authenticity in places where realism counts — recipes, D.I.Y., beauty how-tos — while making space for concept art and speculative designs to which audiences are receptive.

For advertisers, controls might increase signal quality. If they also click more on the real product when they choose to see less AI, we have better measurement and fewer wasted impressions. Varsity Brands, the apparel manufacturer that supplied your high school’s letterman jackets and band uniforms, posted a 4 percent third-quarter sales increase.

What to watch next as Pinterest scales AI feed controls

Key success markers will include declines in user reports for AI clutter, improved save and click-through rates on labeled content and reductions of misleading Pins within commerce-focused categories. It will also be interesting to see how quickly Pinterest grows these controls beyond the initial set, and whether it introduces a global “see less AI” toggle if there’s much demand.

With hundreds of millions of monthly users dependent on the platform for planning in meatspace, Pinterest is wagering that it can make labeling more transparent and keep its inspiration feeds full without closing the door to AI creativity. In a year when synthetic media is all around us, letting in nuance could be the most pragmatic form of moderation there is.