AI is stepping off the screen and into the world. After years of dazzling text and image demos, the spotlight has shifted to “physical AI” — systems that sense, reason, and act in real environments. The message echoed across booths and keynotes at CES: the next wave of AI won’t just chat, it will do.

In practical terms, physical AI is the merger of perception, decision-making, and control running on hardware near you. Think cameras and microphones feeding multimodal models, planners translating intent into motion, and actuators closing the loop in milliseconds. It is AI with agency, grounded by sensors and constrained by physics.

- What physical AI actually means for devices and robots

- How you are already using physical AI in daily life

- The data problem and synthetic reality for training robots

- How new chips bring AI brains to devices at the edge

- Privacy guardrails and trust for always-on physical AI

- From hype to hardware: progress and products to watch

What physical AI actually means for devices and robots

Traditional automation follows fixed scripts. Physical AI runs a continuous feedback loop: it builds a “world model” from vision, audio, depth, and inertial data; reasons about goals and safety; and then takes action — whether that’s a robot grasping an object or eyewear guiding your next step. Researchers at MIT, Stanford, and the Toyota Research Institute call this shift “embodied intelligence,” where learning is shaped by interaction, not just internet text.

It’s not only about humanoids. Warehouse arms that learn new parts, delivery bots that navigate curb cuts, and home devices that adapt to clutter are all physical AI when they perceive context and adjust behavior on the fly.

How you are already using physical AI in daily life

Look around: robot vacuums map your floors with SLAM, doorbells run on-device person detection, cars steer with advanced driver assistance, and smartglasses pair cameras and microphones with AI copilots. Products from Meta’s Ray-Ban line to enterprise AR headsets now fuse sensors with on-device models to summarize what you see and hear, hands-free.

Edge chips from Qualcomm, Apple, Google, Intel, and NVIDIA quietly power this shift. Gartner projects that by 2025, roughly 75% of enterprise-generated data will be created and processed outside traditional clouds, underscoring why latency-sensitive, privacy-preserving AI is moving onto devices.

The data problem and synthetic reality for training robots

Training robots is hard because high-quality “physical” data is scarce and risky to collect. You can scrape the web for text, but you cannot safely learn lane changes by trial-and-error on a freeway. That is why teams lean on simulation, digital twins, and synthetic data to bootstrap models before limited, carefully supervised real-world practice.

NVIDIA’s Omniverse and Isaac Sim, used by robotics startups and industrial firms alike, generate photorealistic scenes with accurate physics to teach perception and manipulation. Autonomous driving programs at companies such as Waymo and Cruise blend billions of simulated miles with targeted real-world driving to cover edge cases. Toyota Research Institute and ETH Zurich publish techniques that transfer skills from sim to reality with far fewer dangerous experiments.

How new chips bring AI brains to devices at the edge

Physical AI depends on efficient compute near the sensors. Modern neural processing units deliver tens to hundreds of TOPS while sipping power, enabling real-time vision-language models, speech assistants, and motion planners to run locally. That eliminates cloud round trips, shrinks latency to sub-100 ms, and keeps sensitive context on the device.

Energy matters, too. The International Energy Agency estimates data centers account for roughly 1% to 1.5% of global electricity use. Offloading routine inference to the edge reduces bandwidth and helps keep the AI footprint sustainable as adoption scales. Expect more heterogeneous designs — CPUs, GPUs, NPUs, and tiny MCUs — orchestrated by software stacks that schedule workloads for efficiency and safety.

Privacy guardrails and trust for always-on physical AI

Physical AI observes intimate contexts: your home, your commute, your voice. Trust hinges on guardrails. On-device processing by default, explicit opt-in for sharing, strong encryption, and techniques like federated learning and differential privacy can preserve utility while protecting identity. NIST’s AI Risk Management Framework and the EU AI Act outline expectations on robustness, transparency, and human oversight; ISO/IEC 42001 sets management requirements for responsible AI operations.

Vendors that anonymize and aggregate sensor data — and prove it via audits — will have an advantage. Clear labels for recording indicators on glasses and pins, plus local redaction of bystanders, are quickly becoming table stakes.

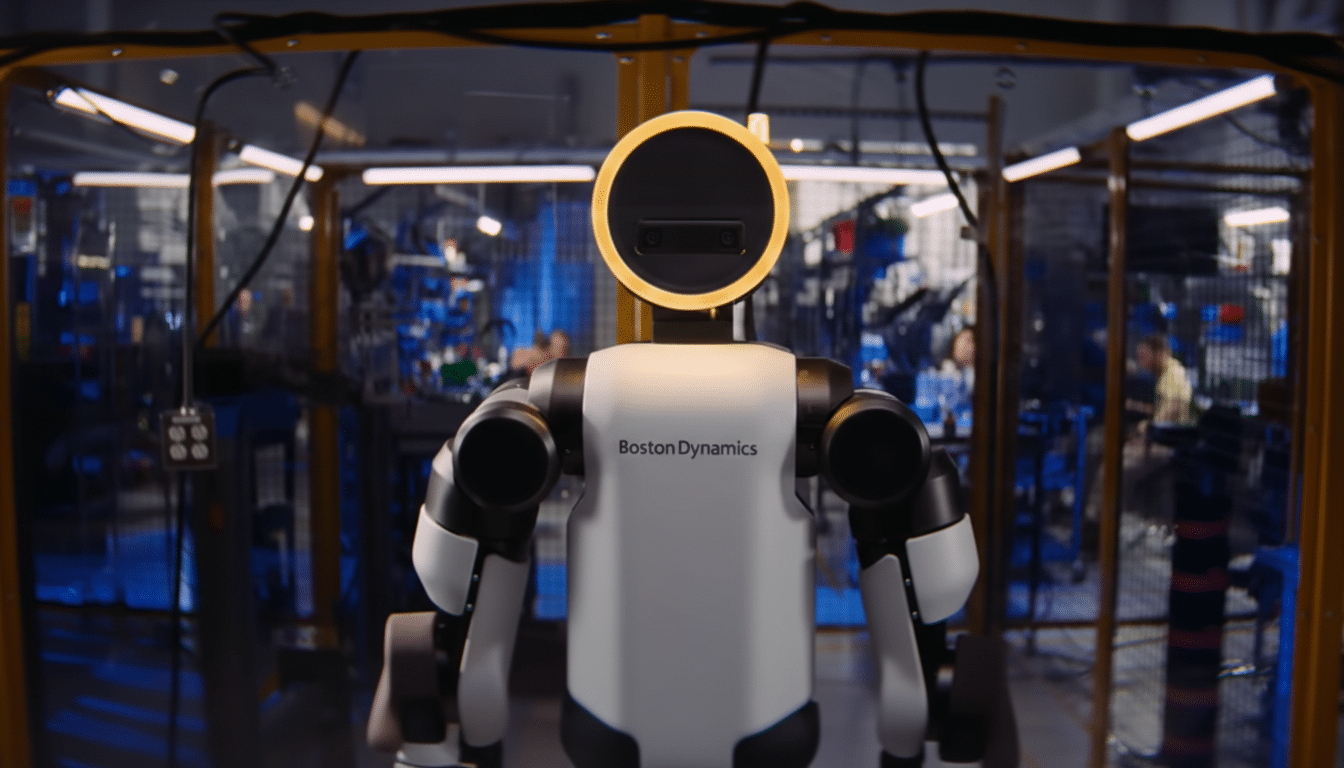

From hype to hardware: progress and products to watch

The roadmap is accelerating. Boston Dynamics unveiled a new electric Atlas to push manipulation research. Agility Robotics’ Digit is piloting in logistics. John Deere ships autonomous capabilities for precision agriculture. Zipline’s autonomous drones complete commercial deliveries at scale. And yes, the humanoid race is on, but the near-term wins are assistive systems that work alongside people, not instead of them.

The most interesting twist is cooperation: wearables that capture your viewpoint can teach robots what matters, while robots generate fresh data to improve your assistant. As models learn richer world knowledge and chips get faster at the edge, physical AI won’t feel like a leap — it will feel like everyday tech simply becoming more helpful. The future is already walking around with you.