Live sports have a generational attention problem, and a Canadian startup believes self-driving car technology may hold one solution to the issue: games that never have to end.

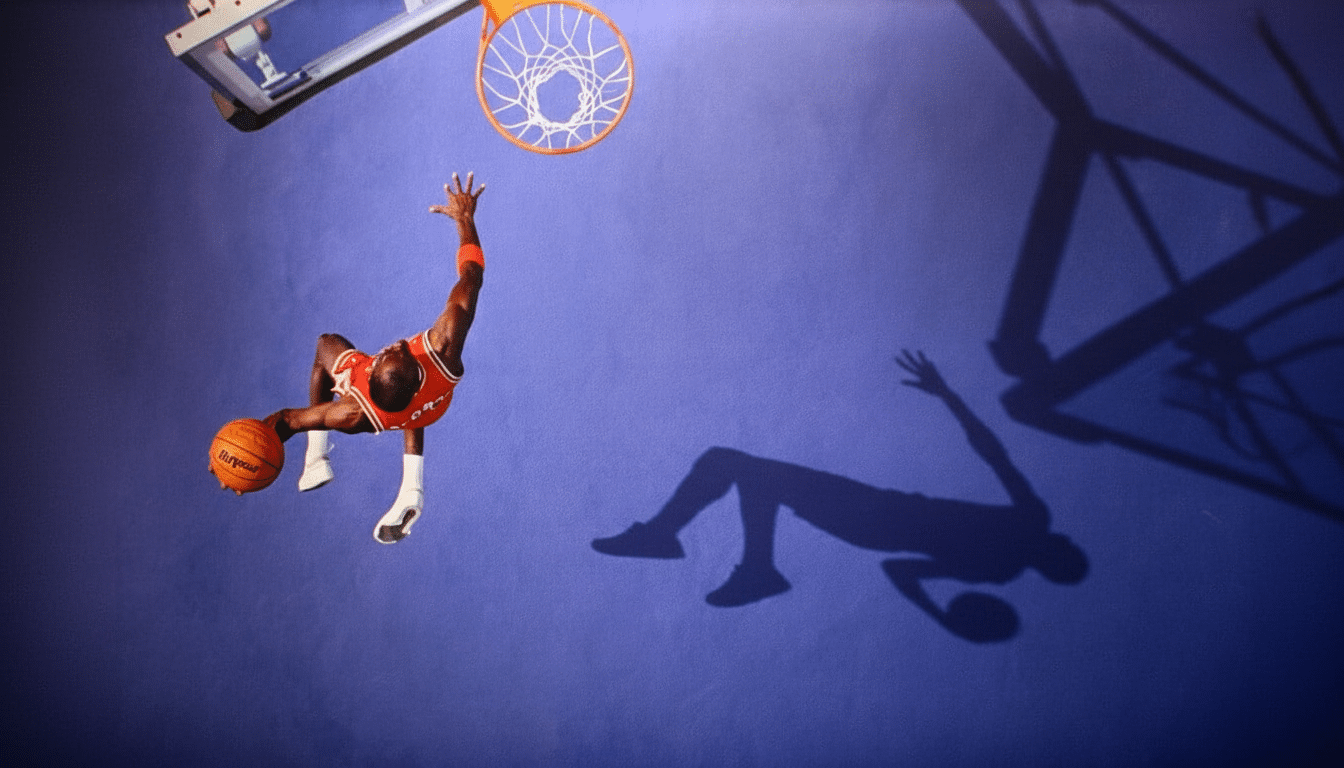

Peripheral Labs is reappropriating autonomous-vehicle perception systems to produce photorealistic 3D replays and interactive angles, allowing viewers to freeze the play, swivel around, and follow any athlete on the field as though they had been inside the action.

How AV Perception Leaps From Roads To Arenas

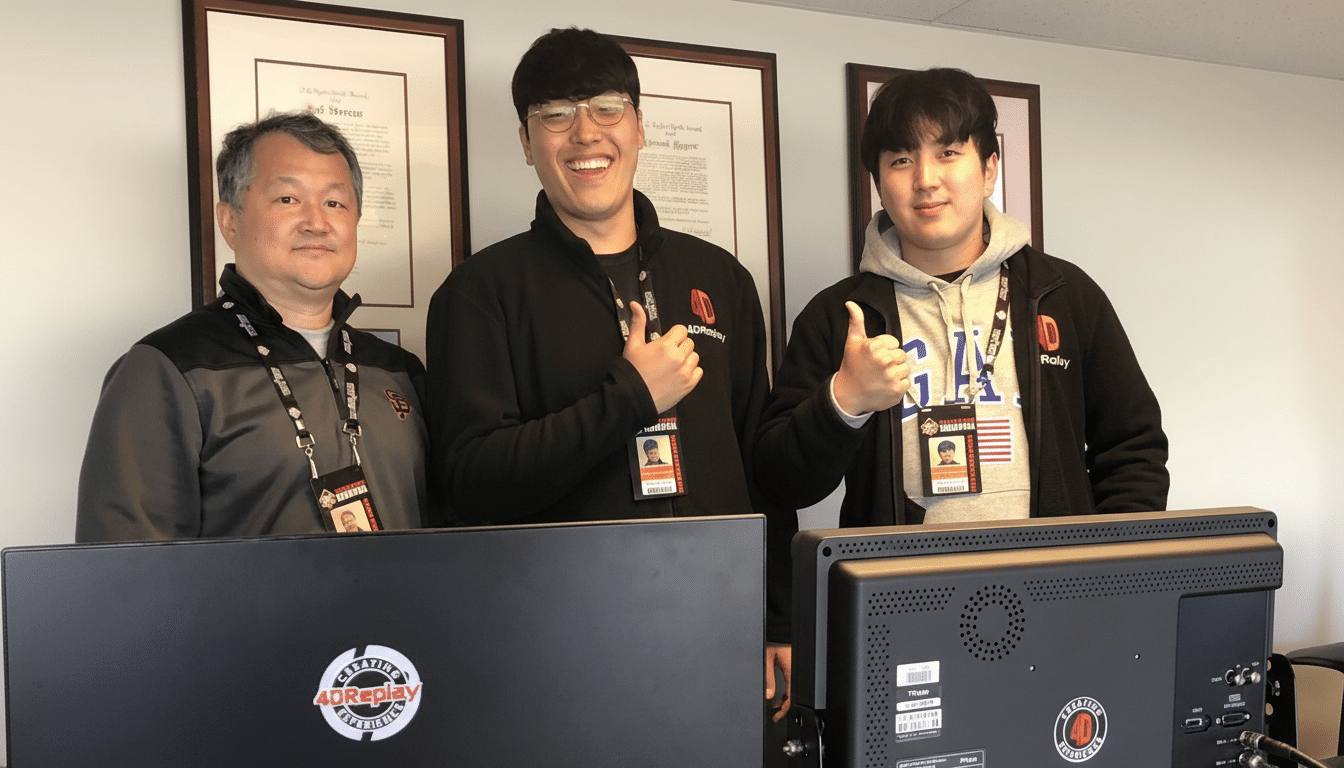

Founded by former University of Toronto autonomous-driving engineers Kelvin Cui and Mustafa Khan, Peripheral Labs applies robotics perception and 3D vision to sports production. Rather than needing to kit out a stadium with 100-plus cameras, as has been the case with legacy volumetric systems to date, the company claims it can recreate the action with just 32 off-the-shelf cameras by leveraging depth-aware models that should be a familiar sight in various AV stacks.

The strategy combines synchronized multi-view video with learned depth estimation, multi-view stereo, and neural scene representations to generate a navigable 3D scene almost in real time. It’s a familiar family of techniques employed in autonomous driving for object detection, pose estimation, and so-called scene flow — adapted to follow players, the ball, and minute joint shifts rather than vehicles and pedestrians.

The company hopes to reduce costs and streamline operations — for teams and broadcasters alike — by slashing bespoke hardware and expensive stadium retrofits. That ambition separates it from previous deployments such as Intel’s True View or Canon’s Free Viewpoint, both of which have created transformative visuals — but at the oppressively high prices of dense camera arrays and massive venue integration.

From Cinematic Replays To Coaching-Grade Data

Peripheral Labs’ platform records the game environment as a 3D model that can be manipulated. Broadcasters can “fly” a virtual camera anywhere or freeze the action of a foul to examine it from several directions, or allow fans to lock on and follow just one forward or ball carrier during a sequence. And because the reconstruction is geometry-aware, player occlusions and depth relationships hold up even under extreme zooms and hard reversals — key for officiating views and second-screen interactivity.

And under the hood, that very same pipeline is giving biomechanical readouts: position of limbs and joints, even finger flexion where resolution permits. That unlocks a level of coaching insight that is usually only available through marker-based motion capture or wearables. Front offices already lean on optical tracking systems such as Second Spectrum and officiating tools from Hawk-Eye; a high-fidelity 3D reconstruction that also provides body mechanics without requiring sensors could become valuable support for skill development and injury risk detection.

Funding, Team, and Go-To-Market Strategy

The start-up has raised a $3.6 million seed round led by Khosla Ventures, and it also includes investments from Daybreak Capital, Entrepreneurs First, and Transpose Platform.

The founders have said that they will be focusing primarily on investors who can help them further develop the product and scale it for commercial use as they grow the company’s current 10-person engineering team, channeling improvements specifically into latency, resolution, and cost per venue.

Peripheral Labs intends to sell its system on multi-year platform contracts with clubs and leagues, with a focus on keeping the hardware footprint as small as possible at the venue and leaning on its software stack for competitive advantage. The company has not yet named any partners, but says it is in discussions with organizations across North America.

Why Now, and Who Peripheral Labs Competes With

Volumetric video is not a new phenomenon, but two trends have combined to differentiate this moment: rapid advances in AI-based 3D reconstruction and a resurgence of interest in interactive viewing. Surveys conducted by firms like Morning Consult and Deloitte have revealed that younger fans prefer highlights, short-form clips, and customizable experiences, forcing leagues to rethink the standard broadcast. That demand is likely to be met by systems that combine broadcast-quality visuals with game-engine freedom.

Competition is heating up. Arcturus Studios is working on volumetric capture and production tools, while Canon and Sony’s Hawk-Eye are increasingly pushing into the replay and officiating tech space. The differentiator for Peripheral Labs is its vision stack inspired by AV, which lowers the number of cameras and obtains granular kinematics on the fly, potentially altering deployment economics.

And there are practical challenges, too: compute and bandwidth at the edge, integration with existing broadcast workflows, and careful handling of biometric data. Now, player unions and leagues have increasingly codified how performance and biometric data can be used, and any system that measures body mechanics will require clear governance and consent frameworks.

What Fans Might See Next From 3D Interactive Replays

Think about how fans might experience a match if they could scrub back 10 seconds, pivot to the view of the referee, and tap an on-screen overlay to follow a midfielder’s run off the ball while heat maps and speed bursts appear on screen in real time. A coach might clip a sequence showing ankle flexion and knee alignment on a key plant step, creating teaching tape in minutes. Broadcasters could bundle them into premium feeds or companion apps that mix cinematically produced programming with game-engine interactivity.

And if Peripheral Labs can produce scalable 3D replays with fewer cameras and richer data, it won’t just be a cool toy for producers. It might then bring back live sports to feeling yet again like a must-watch event — this time from whatever angle you want.