AI agents are becoming inescapable, graduating from lab demos to everyday business tools, embedding themselves into email, CRMs, ticketing systems, clouds, and data lakes. All that convenience also comes with an uncomfortable reality — without guardrails, these agents can act, learn, and connect in ways quickly outstripping traditional security. The OpenID Foundation is now presenting a blueprint that looks at agents as first-class digital identities, role-managed and audited, conformant to open standards.

Why Agentic AI Shatters the Old Models of Access

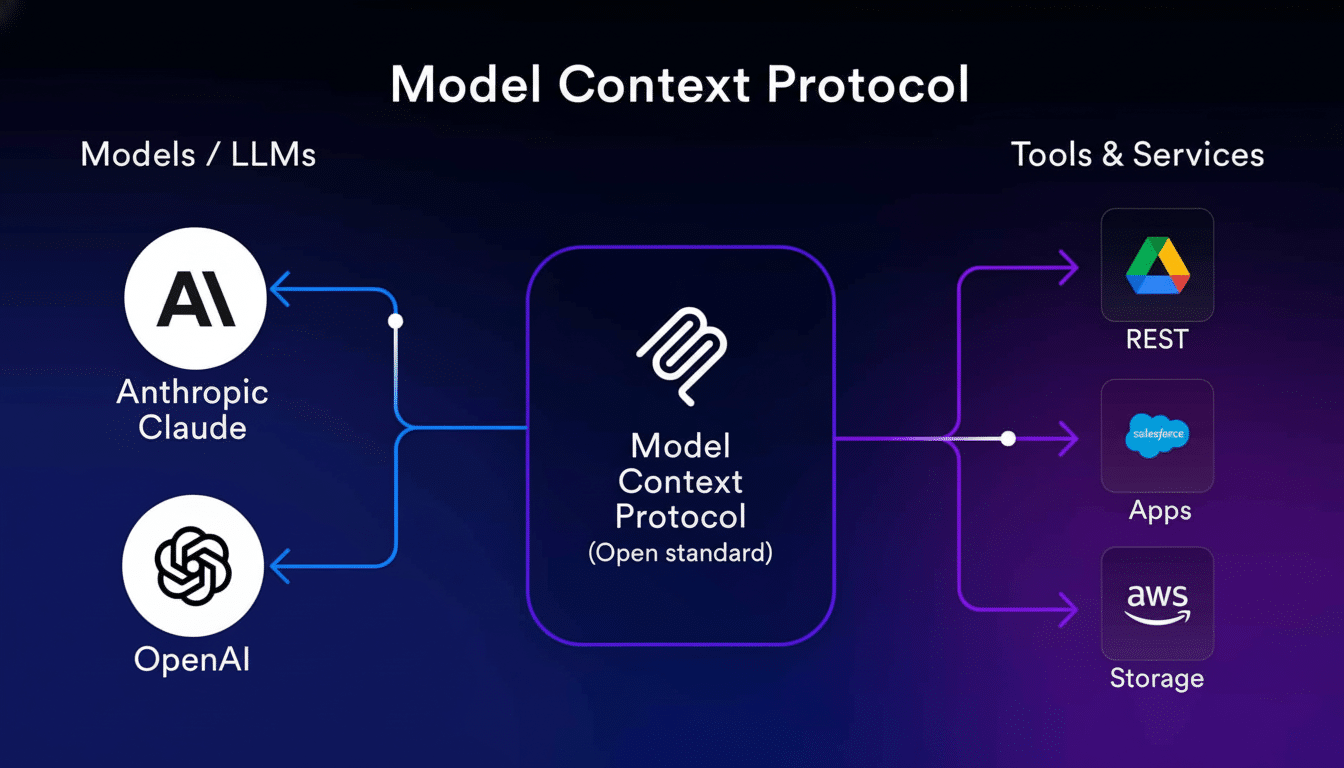

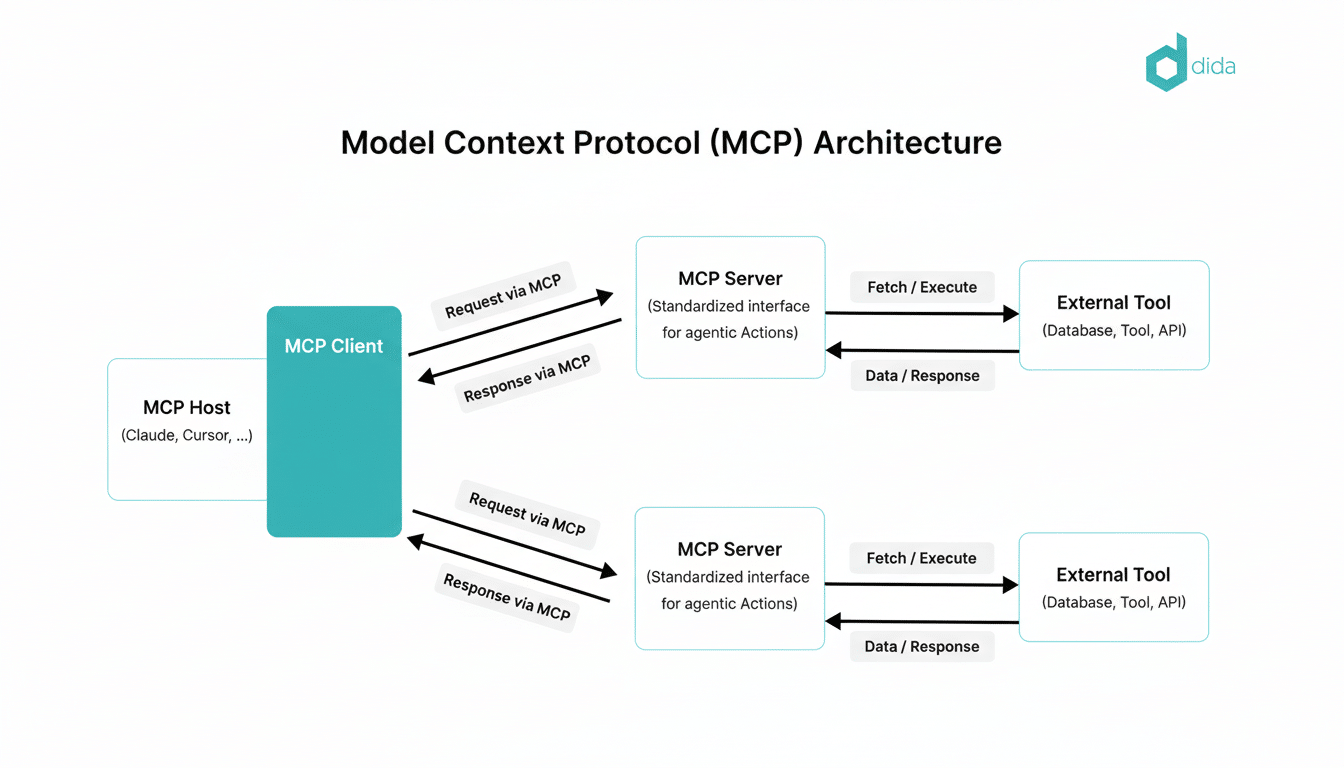

Traditional identity and access management is built around predictable software and human consumers. Agents are different. They reason over dynamic environments, invoke tools, create new agents, and pursue goals with non-deterministic activity. Thanks to the rise of research standardization and Model Context Protocol (an emerging framework that promises to facilitate the discovery and usage of tools, data sources, and compute resources by models), this number is falling fast.

- Why Agentic AI Shatters the Old Models of Access

- The OpenID Foundation Playbook for Securing AI Agents

- Making Agents First-Class Identities with Robust Lifecycle Controls

- Guardrails at the Coalface of AI Agent Operations

- Interoperable Controls Trump Proprietary Silos

- What Leaders Should Do Now to Govern AI Agents

Security teams prize certainty. Agents, by design, introduce uncertainty. British spy agency GCHQ and its National Cyber Security Centre have warned that the use of tool-powered models exacerbates threats such as malicious prompt injection, data exfiltration, and privilege escalation. The average incident, according to the IBM Cost of a Data Breach report, clocks in just shy of five million dollars — damages that accumulate when autonomous systems can turn little mistakes into big catastrophes.

The OpenID Foundation Playbook for Securing AI Agents

The OpenID Foundation, which oversees standards including OpenID Connect and the Financial-grade API profile, maintains that just like with humans and services, we must take the same precautions when dealing with agents — and then some. That’s identity, lifecycle, consent, authorization, and telemetry as interoperable building blocks, not vendor locks.

At the core is identity. Give each agent a real identity, and not one with a shared API key. Associate that identity with provenance signals (who built the agent, by what model, and for what purpose). Use standards such as OpenID Connect for federation; extend OAuth with fine-grained boundaries so tokens reflect intention instead of blanket access. The work of the Foundation indicates toward profiles such as Rich Authorization Requests that specify how and on which resources, with a proper context, the what an agent might perform.

Making Agents First-Class Identities with Robust Lifecycle Controls

And human-based workflows have the mature spine already: onboarding, role changes, entitlements, and off-boarding through SCIM. We need to have a similar lifecycle for agents. The concept is simple: provision an agent with a specific owner, purpose, against a role and expiration; rotate its credentials automatically; decommission it properly. Put “who is this agent?” and “who is responsible for it?” on the same level as first-class questions, enforced at policy rather than with ad hoc scripts.

Shared Signals and Events — an eventing model advocated by the Foundation — distributes alerts and status changes across systems. When an agent starts to access strange datasets or pass its normal rate limits, the receiving service may be able to limit permissions or require re-authorization. This migrates control from unchanging whitelists to actionable, live governance.

Guardrails at the Coalface of AI Agent Operations

Classical Identity Governance tells you who can access what. Agent guardrails address how that access is utilized. The OpenID Foundation’s method augments IGA with runtime controls as to when, exactly, data or tools are launched. Examples include sanitizing personally identifiable information while relaying into a model, constraining the “autonomy budget” for an agent per task, and enforcing least privilege via short-lived tokens bound to purpose.

Risk signals matter here. Interleave model- and tool-level telemetry with policy engines so a user hitting an API sensitive to finance must demonstrate the consent of the agent, produce a constrained token, and pass a real-time risk verification. Proof-of-possession mechanisms will prevent tokens from being replayed by other processes. If something seems to be awry, the system can demand step-up authentication or end the session.

Interoperable Controls Trump Proprietary Silos

Open standards are the fulcrum. OAuth profiles for granular consent, OpenID Connect for federation, SCIM for lifecycle management, Shared Signals for continuous evaluation, and verifiable credential formats to attest agent properties make a toolkit that is applicable across vendors. This is crucial as companies mix and match SaaS, clouds, and model providers; governance needs to travel with the agent, not stop at the API gateway.

Complementary frameworks help too. NIST’s AI Risk Management Framework focuses on quantifiable risks and responsibility and accountability. The EU’s AI Act calls for transparency and oversight. By aligning agent identity and authorization to these regimes, we can ensure audits go faster and eliminate the chances that “shadow agents” slowly accrue privileges.

What Leaders Should Do Now to Govern AI Agents

- Inventory the agents just like you inventory your users and service accounts.

- Assign accountable owners.

- Replace shared keys with federated and measured tokens.

- Use on-demand, role-based access to grant just-in-time, need-to-know rights and establish specific deprovisioning delay periods.

- Catch and inspect agent telemetry, wiring Shared Signals to revoke automatically when behavior changes.

The payoff from agents is authentic — productivity, better customer response times, full-time operation. But hope is not a strategy. By making agents first-class identities while putting guardrails in place at the point of action, the OpenID Foundation’s roadmap to get the benefits of agentic AI without wrangling chaos is practical, and interoperable.