OpenAI recently launched ChatGPT Health, a specialized experience for health and wellness conversations within ChatGPT, signifying how frequently people are already seeking medical advice from AI. More than 230 million people ask health-related questions on the platform every week, and it’s carving out a dedicated space to field those sensitive discussions with more clearly set boundaries.

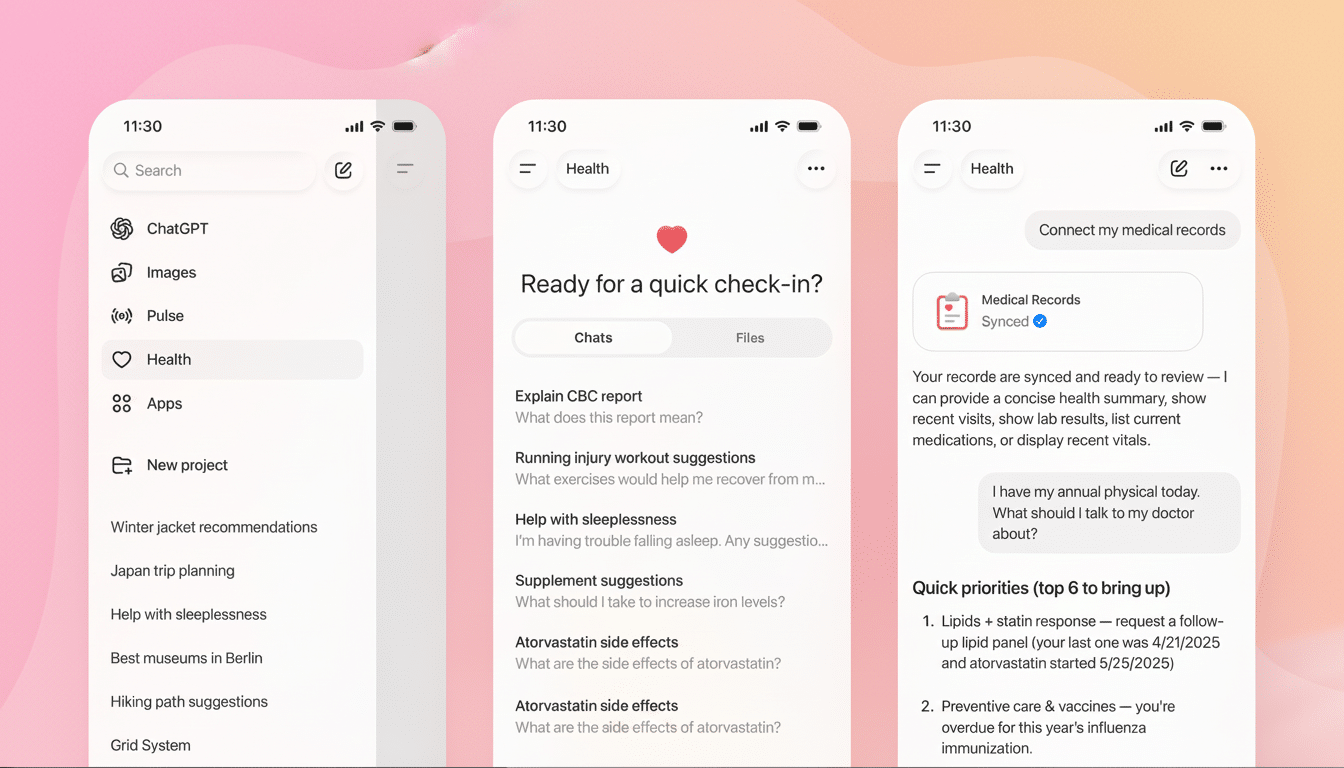

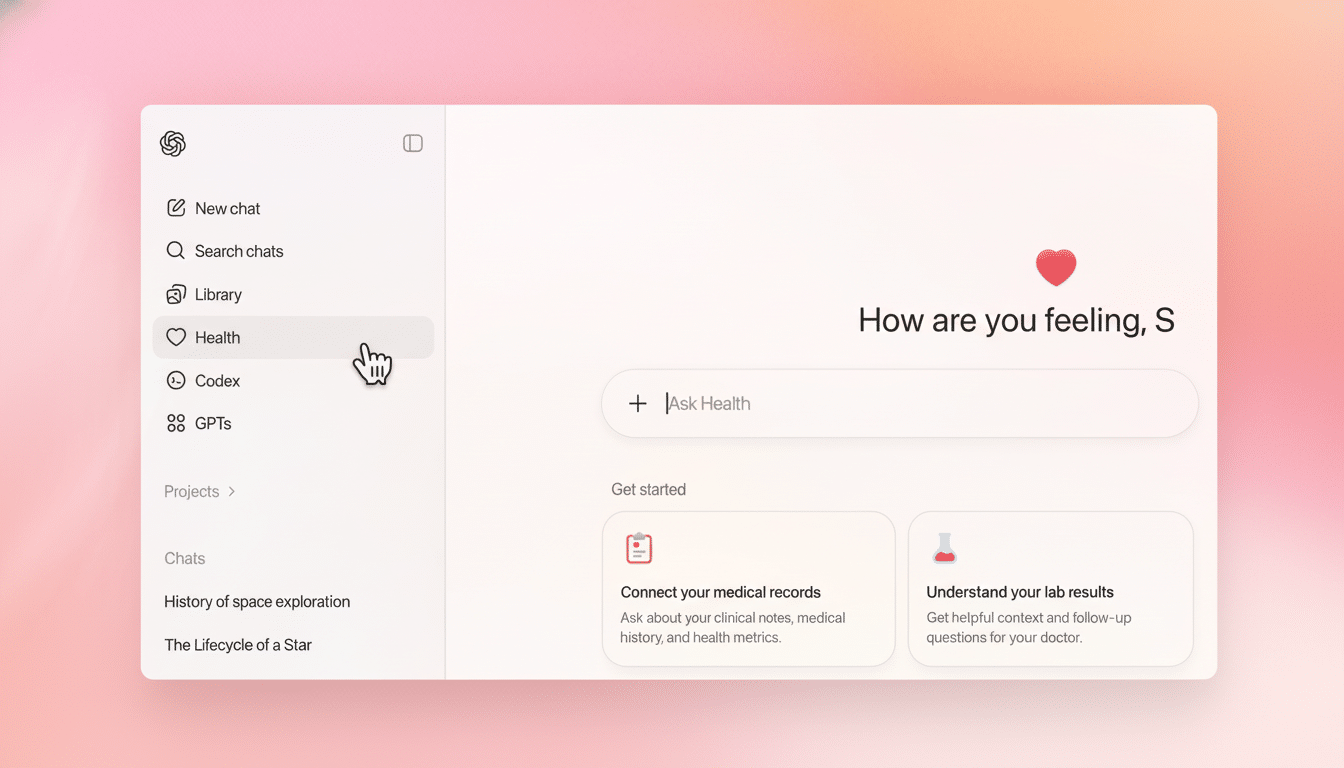

The new Health section is designed to create a bubble for all medical chats that’s separate from other automated prompts, so if you’re chatting about your medication routine, symptoms, or anything having to do with health care plans, you aren’t going to see related topics bleeding out into other chat interfaces. And the assistant will prompt users, should they start a health query somewhere other than Health, to switch over. OpenAI says it will start rolling out the feature in the next few weeks, and Health conversations will not be used to train its models.

How ChatGPT Health Works and Handles Your Context

ChatGPT Health operates as a special mode. Inside it, the system maintains a wall between health context and your wider chat history so stray medical details don’t come up again in a chat about travel plans or coding tips. At the same time, it can also look at contextual information in your everyday chats to tailor Health responses. For instance, if you created a marathon training plan with ChatGPT before, it can take into account that you’re a runner when discussing nutrition or injury prevention.

OpenAI says ChatGPT Health will also be capable of integrating with popular health data services, such as Apple Health, Function, and MyFitnessPal. Those integrations could also allow the assistant to pull in step counts, workout logs, or dietary data into a conversation to make goal-setting and progress tracking more contextual. The company did not pitch this product as a diagnostic device; it’s still an informational aid intended to help — not supplant — clinical care.

The desk, which OpenAI is calling Frida (after the legendary auction house in Paris where artists often hung out), is a way to address common pain points in health care: barriers to access and cost, providers who are stretched thin, fragmented follow-up. By corralling health questions into a purpose-built experience, OpenAI sends a message that it would like to make standard advice easier to find while recognizing that medical data deserves a higher privacy standard.

Promises and Limits on Privacy and Safety

OpenAI says it won’t train its models on ChatGPT Health conversations, a promise designed to ease the concerns of users who might be “taking part” in feeding sensitive data into future systems. The company also makes clear in its terms that ChatGPT is not for diagnosing or treating. That’s a big deal because big language models are producing probable answers rather than verified facts; they can be useful for education and planning, but these systems also hallucinate.

The product exists in a troubled regulatory gray area. It is very difficult to make calls on the product as far as regulation goes. HIPAA privacy rules typically apply to covered entities like health care providers and insurers, but not to most consumer apps. In recent years, the Federal Trade Commission has increased enforcement of the Health Breach Notification Rule for health apps that don’t fall under HIPAA, signaling more attention to how consumer health data is treated. And the Food and Drug Administration exclusively oversees software that makes diagnostic or therapeutic claims; most chatbots sidestep that issue by avoiding any sort of claim to diagnose or treat a condition, which would potentially subject them to medical device regulations.

Against that backdrop, what the guardrails around ChatGPT Health look like will be scrutinized:

- How data is stored from integrations

- How easily users can disconnect sources

- How long information persists

- How safety behaviors escalate when a user describes emergencies or self-harm

It will depend on clear controls and consistent behavior under stress.

Why Demand for ChatGPT Health Is Surging Right Now

The 230 million weekly health queries number suggests that there is a vast need we are not already meeting for fast, understandable answers. Worldwide, the World Health Organization estimates there will be a shortage of 10 million health workers by 2030, and in many markets an appointment that’s not for something urgent can take weeks to schedule. Consumers are seeking digital solutions for transient advice, pre-visit preparation, and lifestyle coaching.

AI also surfs the telehealth wave. Analysts have projected that a large proportion of U.S. outpatient visits could move to virtual or near-virtual form, particularly for low-acuity matters and support for chronic care management. ChatGPT Health seems squarely aimed at those use cases: explaining lab results, assisting with drafting questions for a doctor, translating care plans into daily habits, and monitoring adherence alongside data from wearables.

What to Watch Next as ChatGPT Health Rolls Out

As it gets deployed, the big tests will be for reliability, transparency, and how smoothly patients are handed off to real clinicians when necessary. You can expect OpenAI to optimize how it nudges users into the Health space, signals uncertainty, and encourages follow-up care with licensed professionals for anything more than general information.

Just as important will be data governance across integrations with Apple Health, Function, MyFitnessPal, and others. Clear permissions, deletion pathways, and protections against cross-context leakage will decide whether consumers — and regulators — warm to an AI that sits this near their most sensitive information.