OpenAI has launched GPT-5.2, a new model in its Frontier suite that it is touting as its most capable yet and which is also taking aim at some of Google’s recent moves with Gemini. The launch comes months after Recode reported that CEO Sam Altman issued a “code red” memo calling for teams to improve the ChatGPT experience and stop consumer share loss to Google, signaling a move back toward core product quality and developer utility.

What’s new in GPT-5.2 across speed, reasoning, and coding

GPT-5.2 has three faces of DRIVE.

- Instant puts speed first for everyday tasks such as information search, translation, or even writing an email.

- Thinking targets coding, long-document analysis/annotation, math, and multi-step planning.

- Pro aims to achieve mission-critical reliability and accuracy for complex, production-grade use.

OpenAI said the model is better at creating spreadsheets, building presentations, writing code, interpreting images, handling long context, and coordinating tools across multi-step projects. In the company’s internal evals, Thinking responses are said to reduce errors by 38 percent compared to the previous generation, attributed partly to enhancements in step-by-step reasoning, constraint tracking, and numerical consistency.

On the coding side, OpenAI product leads said there were “significant improvements” to generation, refactoring, and debugging, as well as early partner feedback from AI coding startups suggesting enhanced performance on difficult multistage tasks. For data-heavy work — financial modeling, forecasting, and the like — the company emphasized better processing of structured inputs and tool calls across long contexts.

A direct response to Google’s Gemini push in enterprise AI

The release takes square aim at Google, whose line of Gemini services is being expressed more and more through Search, Workspace, Android, and Cloud with agentic multimodal workflows. Google has been advertising higher-level “deep thoughts” for math, logic, and science via model-native connectors to services like Drive, Maps, and BigQuery for full-stack automation.

OpenAI counters that GPT-5.2’s Thinking version is just ahead of the competition on a slate of reasoning and knowledge tests: SWE-bench Pro for real-world software engineering, GPQA Diamond for graduate-level science, and ARC-AGI for abstract reasoning and pattern discovery. Coding is still a hotly contested field, and Anthropic’s higher-end models are still scoring well on niche developer benchmarks. The bigger takeaway: the race has become less chatty and more about trustworthy, scalable reasoning.

Enterprise and developer playbook for adopting GPT-5.2

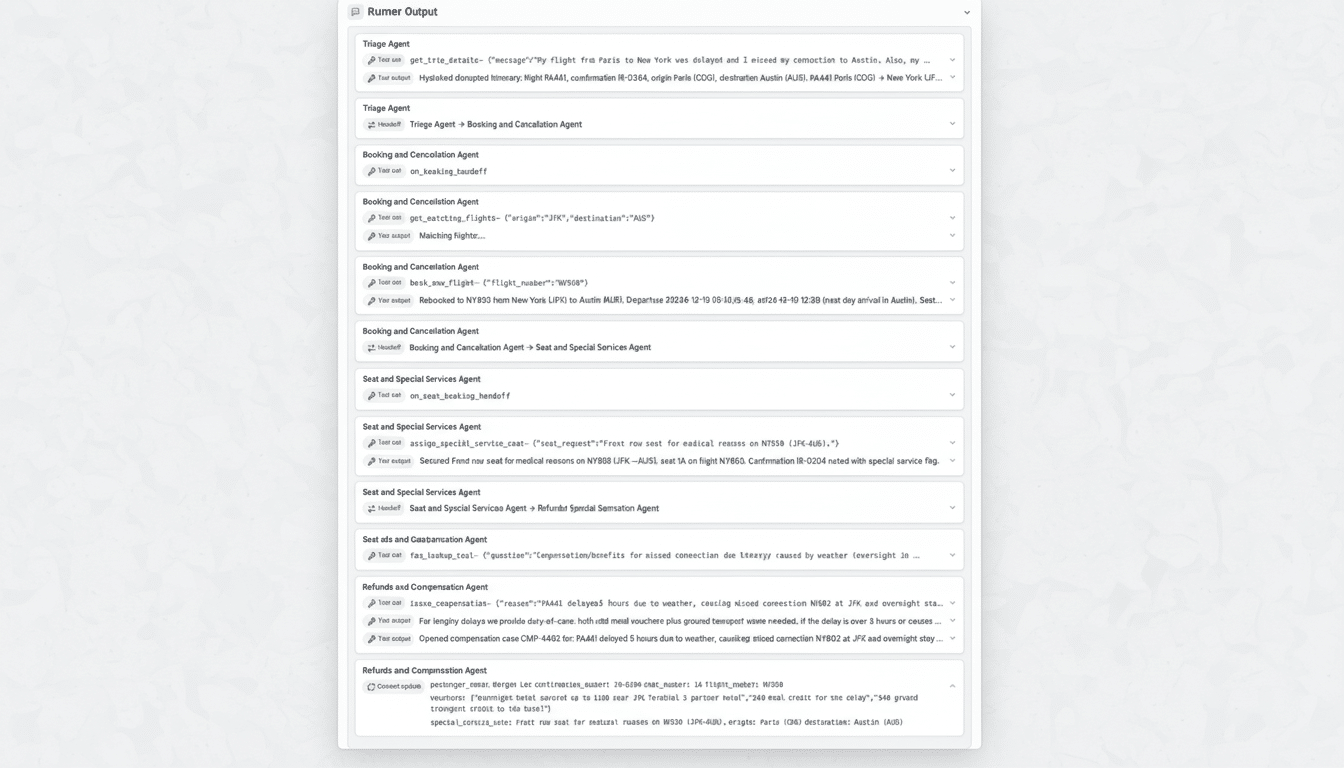

Despite the consumer stakes, GPT-5.2 is a developer-first move. OpenAI is vying to become the default building block for AI-based applications and is betting on tool use, long-context consistency, and production readiness. The company has attributed rapid enterprise adoption of its API stack to models that customers are using to automate document workflows, generate software tests, and analyze streaming business data.

The three-stage release mimics the pragmatic trade-offs teams face when they try to ship AI features: Instant for latency-sensitive UX, Thinking for deeper analysis, and Pro (read: conservative) if correctness and stability trump everything. For CIOs and CTOs, the sell is less about headline benchmarks, more about deterministic behavior, rollback strategies, and observability across agentic pipelines.

The cost and reliability trade-off for reasoning-first models

Reasoning-forward models are expensive. They need more compute per request — especially when they juggle tools, large context windows, and multi-step plans. That elevates the emphasis on routing, caching, and guardrails so teams can maintain control of costs without impacting quality. OpenAI’s approach seems to accept this: win at reliable reasoning, then help customers make it cost-effective with smart orchestration and graduated model offerings.

Safety is also still a work in progress. OpenAI says it’s implementing stricter controls for sensitive use cases such as mental health and teen access, and updating content filters with improved abuse monitoring. But for businesses, the greater interest is in predictable failure modes — unambiguous error surfaces, tool-call timeouts, and auditability when agents act upon real business systems.

What’s missing in GPT-5.2 today and what could come next

Curiously, no new image generator is included, even as the most recent Google image models have achieved ubiquity throughout its product suite. OpenAI has indicated that it intends to support richer multimodal output, faster inference, and more expressive personality on the roadmap for GPT-5.2, but the details are under lock and key.

For now, GPT-5.2 unites OpenAI’s previous two upgrades into a more mature platform, developed to withstand production work. It’s a direct response to Google’s expanded Gemini program — and a bet that winning this next stage of the AI race doesn’t depend as much on flashy demos as it does sustained reasoning, tool-use reliability, and total cost of ownership teams can actually live with.

The subtext of the “code red” moment is pretty hard to miss: OpenAI values a clear-eyed focus on product quality, developer trust, and enterprise-grade execution. If GPT-5.2 performs as advertised across the filthy edge cases of actual, real-world business workloads, the company may have simply reset the leaderboard where it matters most.