OpenAI has introduced AgentKit, a toolkit for developers that it hopes will transform AI agents from promising demos into dependable, production-level code. The package assembles design, testing, deployment and governance capabilities so teams can ship agents that handle real-world work across support, operations and analytics — without juggling half a dozen tools to make it happen.

A Single Toolkit to Power Complete Agent Workflows

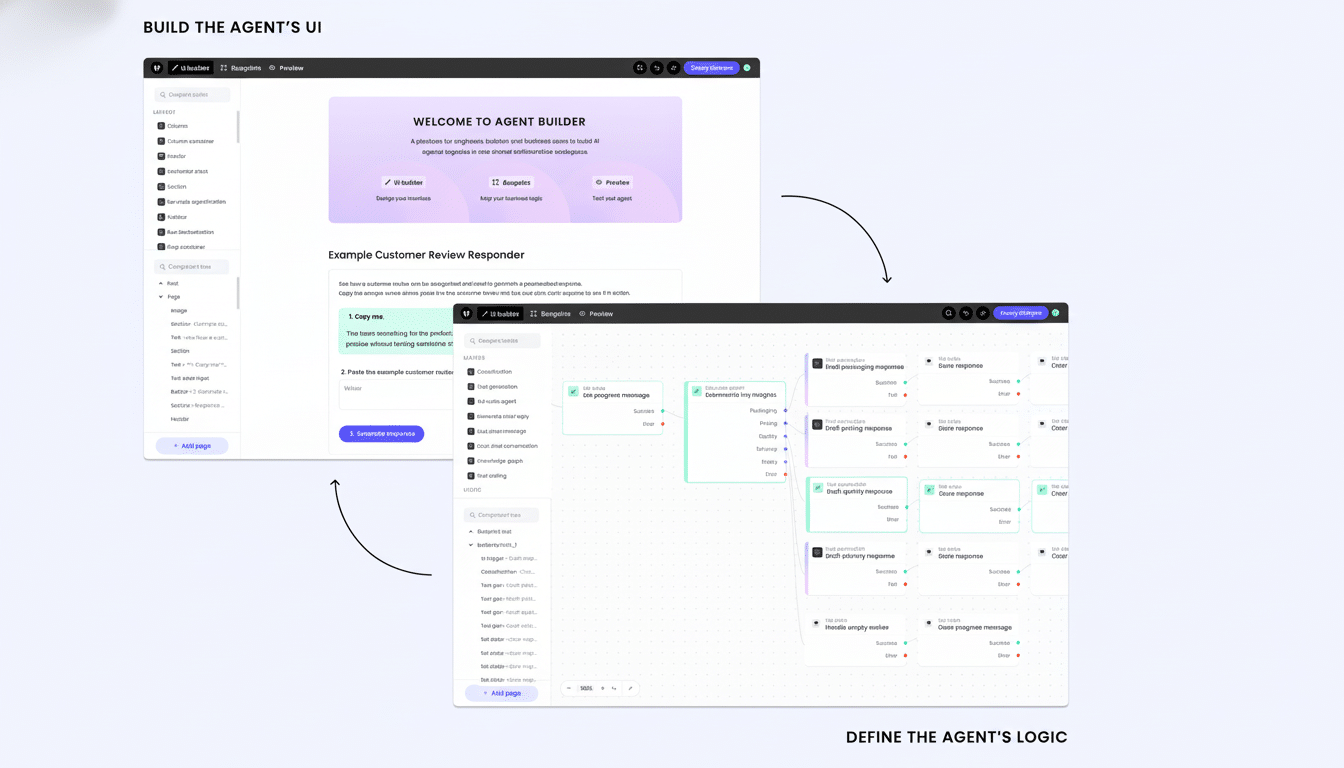

AgentKit focuses on pragmatic building blocks developers want today: a visual Agent Builder for mapping logic and steps, ChatKit to embed branded chat interfaces; the ability to test end‑to‑end; and a connector registry that links agents to internal systems & third‑party apps that IT admins can control.

The Agent Builder is analogous to a canvas for agent logic. Teams can draw complex multi-step workflows, specify tool calls and set guardrails without hand authoring each prompt chain. It is built on top of OpenAI’s Responses API type, which allows agents to mix instructions, function calls and structured outputs in the same space.

ChatKit also offers drop-in conversation UI that devs can skin to match their product while maintaining brand and workflow nuance. Instead of slapping a generic chat widget on, product teams can wire up the interface to custom backends, data stores or approval steps — all essential for enterprise-grade experiences.

Evals for Agents provides considerably more than fundamental prompt testing. It includes stepwise trace grading, biased sets for the individual agent parts, as well as automated prompt optimization. That final point is critical: a lot of organizations are running mixed-model stacks and need apples-to-apples comparisons on accuracy, latency and failure modes.

The connector registry is solving a perennial pain: how to safely allow agents to access company data and tools. Working in an admin control panel, teams can provide scoped access to systems such as CRMs, data warehouses, ticketing platforms or messaging applications while maintaining auditability and the principle of least privilege.

To demonstrate speed, an OpenAI engineer was able to create a full workflow that included two cooperating onstage models in minutes — an anecdote, yes, but a telling one for teams trapped in prototype purgatory.

What This Means for Businesses Adopting AI Agents

The overwhelming majority of agent projects founder during the handoff from labs to production. The blockers are familiar: bug-prone tool use, low observability, governance and brittle evaluation. The pitch from AgentKit is that wrapping these concerns around a single platform reduces time to value and de-risks integrations.

The timing is right for broader demand. An analysis by McKinsey projected that generative AI could generate $2.6 trillion to $4.4 trillion in annual economic value, a large share of it from workflow automation and decision support. Agents are the instantiation of that promise — software not just composing content but also dispatching tasks, updating systems and closing loops.

Imagine a retail operations agent; it can triage return requests, check inventory in the warehouse, generate shipping labels, notify customers of forthcoming deliveries and log outcomes — while surfacing an approval step for edge cases. AgentKit’s evals aid teams to know failure rates on every step, not just global conversation quality, so they can harden the workflow where it actually breaks.

Placing AgentKit in a Crowded Landscape

OpenAI is stepping into a more crowded field. Google has also released the Vertex AI Agent Builder and Microsoft is promoting Copilot Studio, as well as frameworks such as AutoGen and Anthropic continues to extend the utility of tool use and flow. LangChain, LlamaIndex, and CrewAI still see significant usage for bespoke orchestration on the open-source side, with LangSmith also getting evals and tracing.

The key marker for AgentKit is amalgamation and join. Rather than piecing together orchestration, UI, evals and connectors across vendors, teams can keep the stack inside one platform. That lowers the overhead of integration and makes compliance reviews simpler — at the price, of course, of greater reliance on any one provider.

Early Indicators and Practical Considerations

Details will be key to the success of AgentKit: how complete are connector permissions, do evals support user-defined metrics and offline datasets, how well suited is it to long-running tasks and reprocessing, what kind of observability can be achieved in production. Enterprises want to know about governance, role-based access, audit logs, and approval workflows because of the centrality of the admin control panel.

Another make-or-break factor is cost management. Teams integrating agents at scale often find deadly-quiet spend in tool calls, context windows and infinite retries. Built-in evals that highlight costly failure patterns, along with rate controls and caching, can keep projects sustainable.

Real-world adoption will probably begin with tight, high-ROI use cases: customer support triage, sales ops data hygiene, marketing campaign QA and in-house IT automation. Success metrics should be based on:

- Task completion rate

- Time to resolution

- The number of times it escalates to other touchpoints/escalations

Bottom Line: What AgentKit Could Mean in Practice

AgentKit encapsulates the gunky middle of agent development into a coherent, opinionated package. For developers, that means less time wrangling together glue code; for enterprises, clearer paths to governance and measurement. In a year of agent-driven marketing hype, the one who can ship resilient agents — not just fancy demos — will be the real winner.