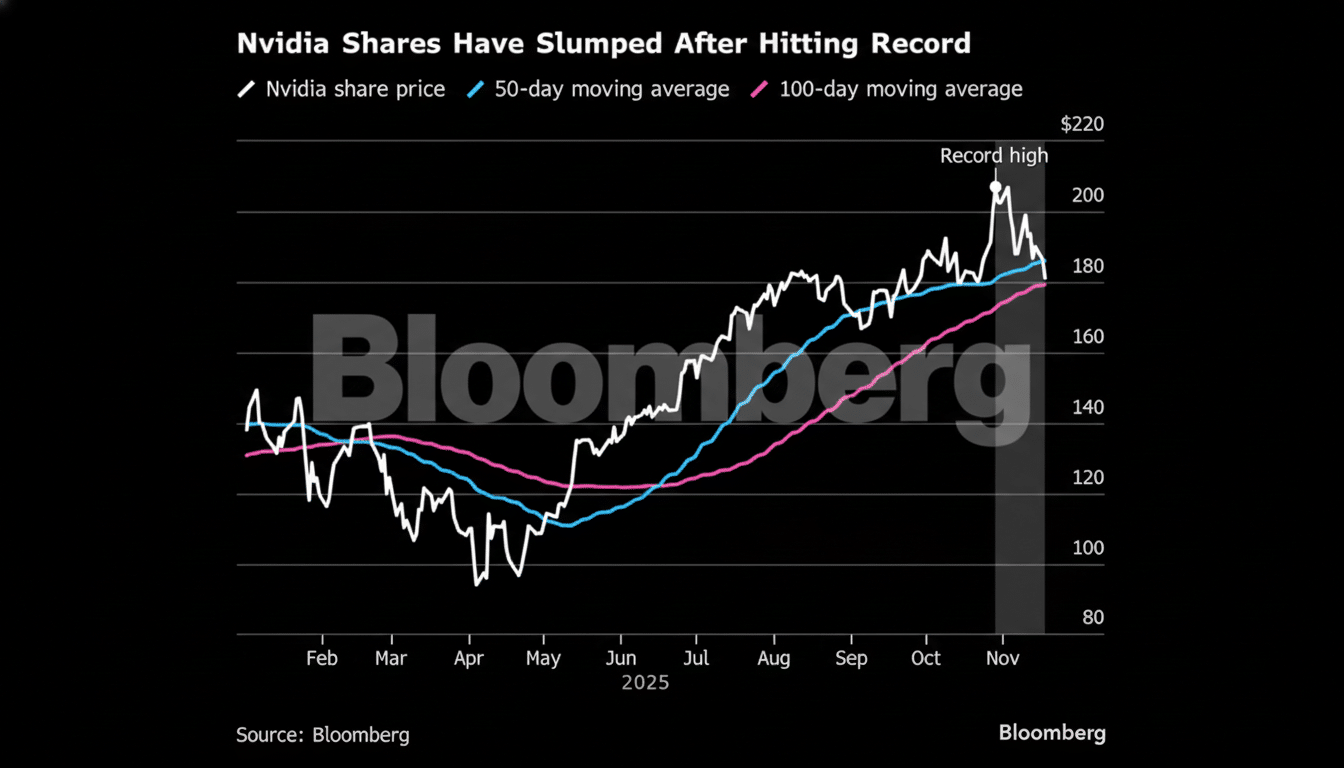

Nvidia’s most recent earnings beat didn’t just wow Wall Street. It reset the bar of how quickly AI infrastructure will scale through at least 2026, and suggests that demand for accelerated compute is still running ahead of supply and the next wave of model training and inference is already banked.

The headline is straightforward: the data center is still the engine. Company disclosures indicate the segment now accounts for the overwhelming majority of revenue, with cloud providers, model labs and enterprises in a footrace to modernize stacks for generative AI. In more practical terms, it means that reservations of capacity for new architectures are extending well into next year, locking in the floor on AI buildouts even if macro jitters reboil.

What the Numbers Tell Us About Compute Demand

“Microsoft and Alphabet management teams recently reaffirmed that they would ramp capex for investments in AI infrastructure on recent earnings calls, while Meta also said something similar,” Bernstein analyst Stacy Rasgon wrote this month. Goldman Sachs notes GPU markets remain “channel constrained” as analysts expect tightness due to supply constraints to continue into the fourth quarter. Nvidia’s beat fits that image: backlog and prepayments imply customers are thinking in terms of multi-quarter ramps, rather than one-off bursts.

For 2026, two dynamics stand out. First, the training cycle is expanding from a few frontier runs to many domain-specific models. As efficiency increases, so do total computational requirements. Second, inference will dominate usage. Inference is likely utilized in the clear majority of AI cycles today — and this number continues to compound as models get deployed into search, ads, productivity suites, customer service, and code tools.

For model builders, this means having to decrease cost per token. Look for yet more aggressive deployment of quantization, sparsity and memory-aware architectures, plus a premium on high-bandwidth memory and fast interconnect to minimize latency and energy consumption.

Supply Chain Will Dictate Prices in 2026

Nvidia’s outlook in part relies on a constrained but recovering supply chain. TSMC keeps increasing its CoWoS advanced packaging capacity while SK hynix, Micron and Samsung are ramping HBM3E. As those restrictions relax, unit availability is expected to be more plentiful and price performance will march upwards — especially as new boards arrive with larger stacks of HBM and faster NVLink or 800G Ethernet fabrics.

That matters for budgets. But if the market shifts from chronic shortage to something of a balance, cloud providers may be able to pass some savings on to customers. A percentage reduction in cost per model token can open up entire new use cases at scale, from real-time assistants living inside customer workflows to multimodal agents spreading across productivity suites.

Watch power envelopes, too. Power and cooling constraints are being reached by data center operators. In 2026, procuring for more tokens-per-watt will decide compactly interconnected GPU-NIC architectural dimensions and system-level invention pieces, such as liquid-cooled technology and rack-scale networking.

Computing Rivals and Alternatives to CUDA

Nvidia’s moat is still lined with CUDA, cuDNN, TensorRT and a rich software stack, but the competitive landscape is widening. The MI300 family is enjoying ROCm uplift; MLPerf progress is substantial and more frameworks are publishing ROCm-first wheels. Cloud-native silicon is also progressing: Google’s TPUs, AWS’s Trainium and Inferentia, and Microsoft’s Maia are all intended to lower TCO for large-scale training and inference.

If these alternatives get good enough for equivalent targeted workloads — think transformer inference, embedding generation or fine-tuning — pricing pressure comes next. An actual multi-vendor market by 2026 might squeeze accelerator margins but boost total AI consumption by making compute cheaper and easier to reserve. The net result for builders is a good one: they’ll have more flexibility to match workloads to the best-priced silicon.

What It Means for AI Makers and Builders in 2026

For start-ups, Nvidia’s beat even extends the runway. There’s still money for companies that can turn compute into sticky products and recurring revenue. Winners may well be those that exhibit unit economics and trends that get better with scale (in CPM tokens, SLO latency and customer retention) instead of headline parameter counts.

Enterprises should build for a hybrid estate: centralized training in the cloud, cost-optimized inference on shared accelerators, and pick where to deploy (or not) on-prem if data residency or latency requires it. Nvidia’s enterprise software subscriptions, DGX Cloud and model-serving stacks will compete head on with cloud PaaS offerings and open-source orchestration layers; procurement teams should model their total cost across compute, networking, storage and MLOps labor — not just GPU list prices.

Regulatory and geopolitics are still swing factors. Product roadmaps and regional availability have already been reshaped by export controls. Any additional restrictions — or incentives that are conditioned on domestic manufacturing — could then shift supply in 2026, affecting delivery times and cost.

What to Watch Next as AI Infrastructure Scales

Three gates will decide the course of AI in 2026:

- Blackwell-class system shipments and yields

- HBM and advanced packaging supply catching up

- Non-CUDA accelerator traction in production workloads

“Also monitor software revenue mix in NVDA results — increasing contribution from Enterprise AI and inference would be evidence of a more durable platform story outside of hardware cycles,” he wrote.

The takeaway is clear. It’s not just a scorecard for how one company is doing, but a readout on the next chapter of the AI economy. With supply loosening, alternatives maturing and demand expanding from frontier training into ubiquitous inference, 2026 becomes poised for AI institutions to shift their focus from scarce capacity to scaled capability.