Nvidia and Deutsche Telekom have announced a €1 billion agreement to build a new “Industrial AI Cloud” in Munich that will put Germany on the map for a step-change in compute capacity but keep sensitive data safely at home. The project is pitched as an “AI factory,” with both companies saying that it could increase the country’s AI computing power by 50% and support industrial-scale workloads across manufacturing, mobility and public sector use cases.

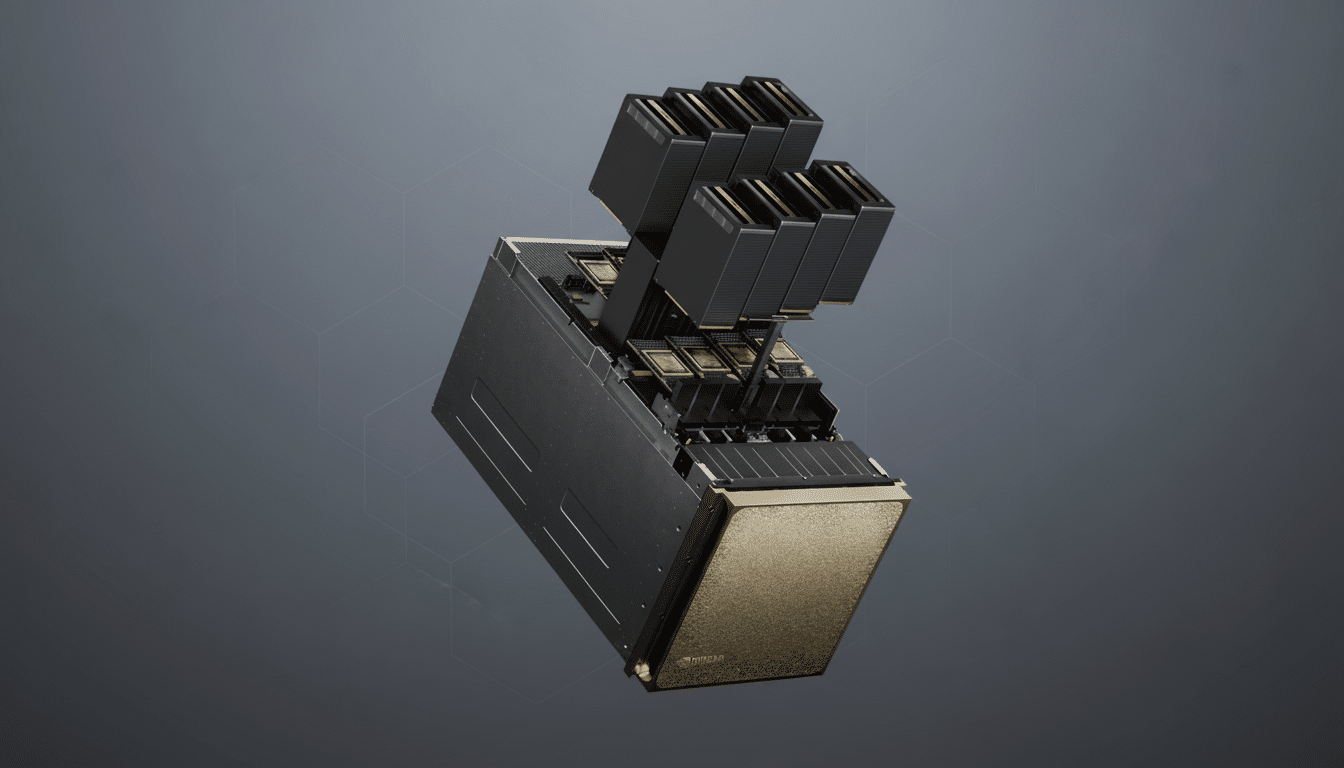

Over 1,000 Nvidia DGX B200 systems and RTX Pro Servers containing as many as 10,000 Blackwell GPUs will be used at the facility. It is specifically engineered for in-country AI inferencing and training on proprietary data sets to meet Germany’s data sovereignty requirements and the demands of regulated industries.

Why Munich, and why this AI initiative is launching now

Munich is a practical choice: It is in the center of Germany’s industrial base and next to automotive, robotics and engineering clusters where digital twins, advanced simulation and AI-assisted production are already being deployed. By placing compute close to data and domain experts, latency is minimized, and time-to-value for mission-critical industrial workloads is accelerated.

The tie-up also fits into Europe’s drive for digital sovereignty. Projects including Gaia-X and national cloud guidance have advocated for infrastructure that maintains data under EU control while keeping up with the scale of global hyperscalers. Although Europe has been playing catch-up in AI infrastructure spending compared with the U.S., this move closes the compute gap at a time when control of important platforms is not being outsourced.

Inside the Industrial AI Cloud powering Germany’s growth

The physical backbone — connectivity, facilities, power and operations — is provided by Deutsche Telekom using its network assets as well as security credentials. SAP will provide its Business Technology Platform and applications, connecting high-performance compute with familiar enterprise workflows for planning, analytics and production execution.

An early ecosystem is emerging. Agile Robots will use its robotics to assist with the installation and servicing of server racks, allowing for faster scaling with automation. Perplexity will perform in-country inference for German users and businesses, demonstrating the need for locally compliant AI services with low latency. Key uses include factory digital twins, physics simulation, generative design and real-time process optimization.

Energy efficiency and compliance considerations for the build

High-density Blackwell clusters, however, focus tremendous power and cooling demands on efficiency as a first-level design constraint. According to industry benchmarks by Uptime Institute, global average PUE is approximately 1.58, with best-in-class new builds aspiring to significantly lower values. Look for power purchasing, heat re-use, and advanced liquid-cooled designs to be all the rage as Germany juggles its compute ambitions with sustainable imperatives.

On the compliance side, the build includes a strong push around data residency under GDPR and German sectoral rules, with reference architectures that chart to BSI cloud security frameworks. Processing in-country can also shrink procurement cycles, minimize legal friction for sensitive data sets and lower latency for interactive AI products used on the factory floor or in the field.

Impact on the market and the evolving competitive landscape

The European Commission has indicated support for AI “gigafactories” in the field of industrial and mission-critical workloads, but infrastructure spending in Europe has lagged behind the U.S., where major vendors have invested massive amounts in AI data centers. Deutsche Telekom’s effort is not formally linked to EU programs, but it reflects the strategic need to create a base of strength domestically with a global dimension.

For Nvidia, the Munich project extends its European presence and demonstrates Blackwell’s role in large-scale inference and simulation. For Deutsche Telekom, it is an upshift in the value chain – from connectivity to AI infrastructure-as-a-service – based on relationships with blue-chip enterprises and network capabilities that ensure performance and security all the way through.

What this announcement means for core German industries

German manufacturers can train and service bigger models on their own data without moving sensitive information across national borders. I’m talking real-time visual on the plant floor in automotive, line balancing in electronics, predictive maintenance in chemicals, and software driving PLM copilots that streamline product cycles, taking into account data from your PLM & ERP systems.

Importantly, the plan is designed for mid-sized companies as well as multinationals. With SAP integrations and in-country inference, SMEs can access high-end compute with predictable costs for low latency, while adhering to strict security policies prevalent in the Mittelstand. Lower latency and in-region support result in lower operational overhead than if critical workloads were run overseas.

The number to watch is utilization.

The alarm should sound when GPUs are 90 percent full, with ships floating idly at anchor and in the roadstead waiting for their turn. If digital twins, copilots, and retrieval-augmented applications take off — if those clusters are filled in short order — the 50% uplift in national AI compute advertised by crossing the HPC Clusters Finish Line is more than a headline; it’s proof of concept that European-scale AI can be developed and run on European soil, with Germany’s industrial economy as its proving ground.