Moonshot AI unveiled Kimi K2.5, a new open-source model that natively understands text, images, and video, alongside Kimi Code, a coding agent aimed at everyday developer workflows. Backed by Alibaba and HongShan, the company is positioning this release as both a research milestone and a practical toolkit for software teams.

Kimi K2.5 was trained on 15 trillion mixed visual and text tokens, a scale designed to make multimodality a first-class capability rather than an add-on. Beyond typical chat and analysis tasks, Moonshot emphasizes two strengths: code generation and “agent swarms,” where multiple specialized agents coordinate to solve complex problems across repositories and documents.

Benchmarks and early signals for Kimi K2.5 performance

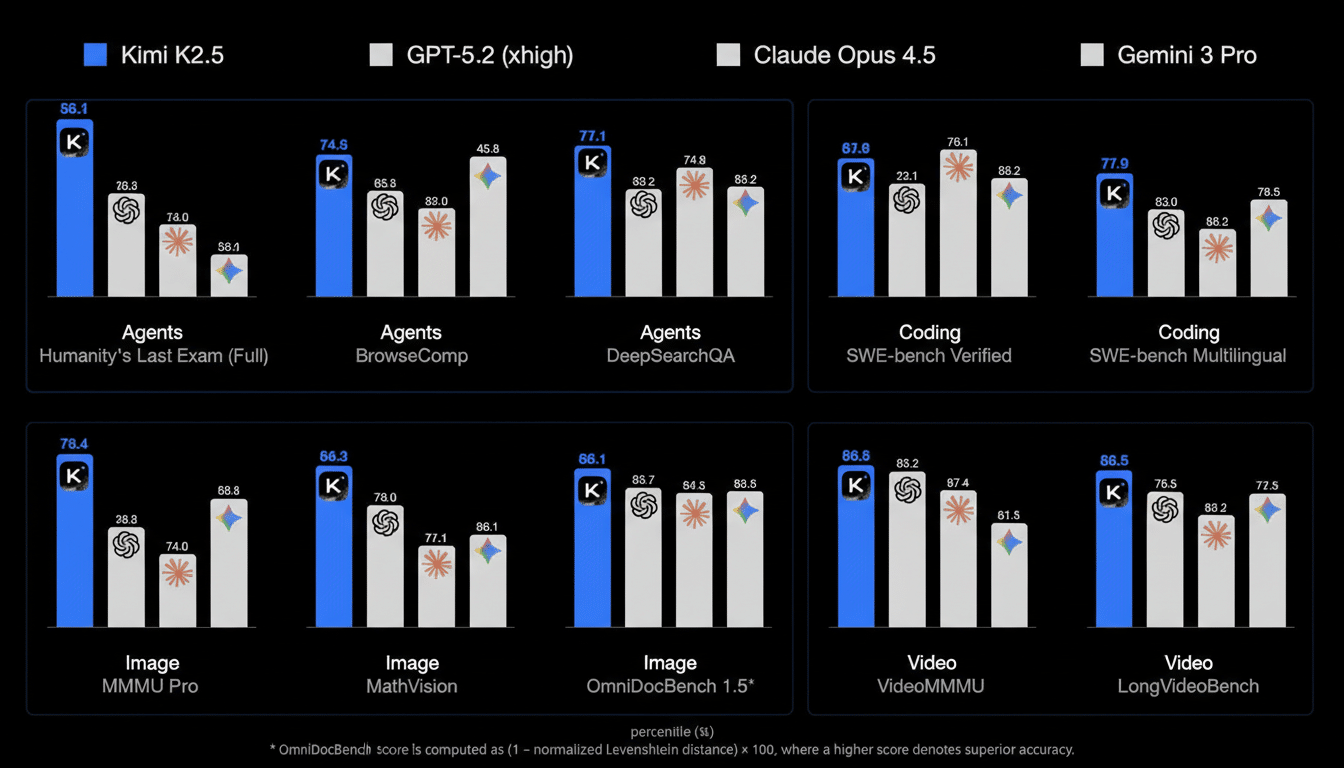

In released evaluations, Kimi K2.5 shows competitive—and in some cases superior—performance to proprietary peers. On the SWE-Bench Verified benchmark, which asks models to fix real bugs in open-source projects under reproducible conditions, the model outperforms Gemini 3 Pro. On the SWE-Bench Multilingual variant, it scores higher than GPT 5.2 and Gemini 3 Pro, indicating stronger generalization across languages and project styles.

Video reasoning is another highlight. Kimi K2.5 beats GPT 5.2 and Claude Opus 4.5 on VideoMMMU, a multi-discipline evaluation that tests comprehension and reasoning over multi-frame sequences. While all benchmark claims deserve independent replication, the spread across code and video suggests the model’s multimodal pretraining is translating into real task performance.

A practical example of that multimodality: developers can feed an image or short video of an interface to Kimi K2.5 and request code that reproduces the layout and interactions. That’s a step beyond “describe a UI and get scaffolding”—the model is attempting to parse real visual artifacts and map them to workable components and styles.

Kimi Code targets developer workflows across editors

To operationalize the model’s coding strengths, Moonshot is releasing Kimi Code as an open-source tool that runs in the terminal and integrates with popular editors including VSCode, Cursor, and Zed. Crucially, it accepts images and videos as input, enabling workflows like “generate a similar interface to this mockup” or “mirror the behavior shown in this screen recording.”

The company is pitching Kimi Code as a rival to Anthropic’s Claude Code and Google’s Gemini CLI. That’s an ambitious target: Anthropic has said its coding product reached $1B in annualized recurring revenue, and Wired reported a subsequent $100M increase. Those figures show how quickly coding agents have become a core commercial pillar for AI labs. Moonshot’s open-source approach could seed adoption at the edge—inside companies’ own stacks—while creating opportunities for paid hosting, support, and enterprise features.

For teams, the draw is workflow speed rather than novelty. A plausible loop looks like this: point Kimi Code at a repository, provide a design image or snippet of a demo video, and ask it to scaffold components, tests, and integration code. With agent swarms, one agent might propose refactors while another validates unit tests and a third reviews performance implications, all mediated by the K2.5 backbone.

Funding Momentum And Competitive Context

Moonshot was founded by Yang Zhilin, a researcher with experience at Google and Meta AI. The company has raised significant capital, including a $1B Series B at a $2.5B valuation, and Bloomberg has reported an additional $500M round that lifted valuation to $4.3B, with further fundraising discussions that could push it higher. Those numbers reflect investor conviction that high-performance, open-source multimodal models will shape both consumer and enterprise AI adoption.

The competitive pressure is intensifying. The Information has reported that DeepSeek is preparing a new model with strong coding capabilities, setting up a direct comparison with Kimi K2.5 in China’s rapidly evolving AI landscape. Global incumbents continue to iterate on proprietary systems, but the open-source push is reshaping developer expectations around transparency, cost control, and deployment flexibility.

What to watch next for Kimi K2.5 and Kimi Code adoption

Key details will determine real-world traction: licensing terms, availability of model weights and training recipes, red-teaming breadth, and data governance disclosures. For developers, practical metrics like context length, inference speed on commodity GPUs, and the reliability of image/video-to-code pipelines will matter more than leaderboard deltas.

If Kimi K2.5’s benchmark claims hold up under independent testing, and Kimi Code proves durable inside editors and CI pipelines, Moonshot will have delivered a notable one-two: a general-purpose multimodal model and a developer-first agent that turns those capabilities into shipped software. In a market where coding tools are already moving revenue at scale, that combination is timely—and strategically smart.