Microsoft’s Detection and Response Team has warned that threat actors are silently abusing OpenAI’s Assistants API to operate long-haul espionage campaigns, hiding nefarious traffic in plain sight as routine AI activity. The company’s incident responders blame it on a new backdoor they call “SesameOp,” emphasizing that the problem is abuse of legitimate system features and not some exploitation of any OpenAI bug or bad code.

How the SesameOp backdoor abuses the Assistants API

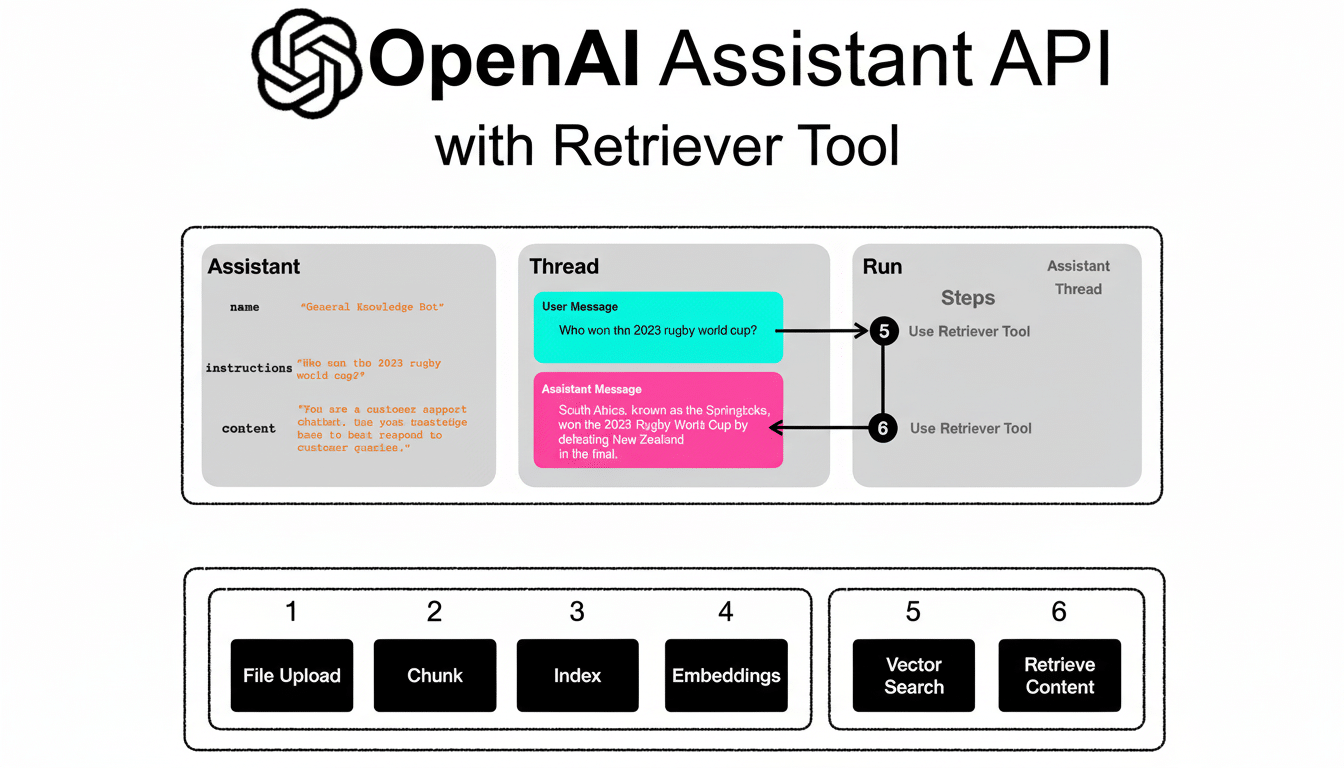

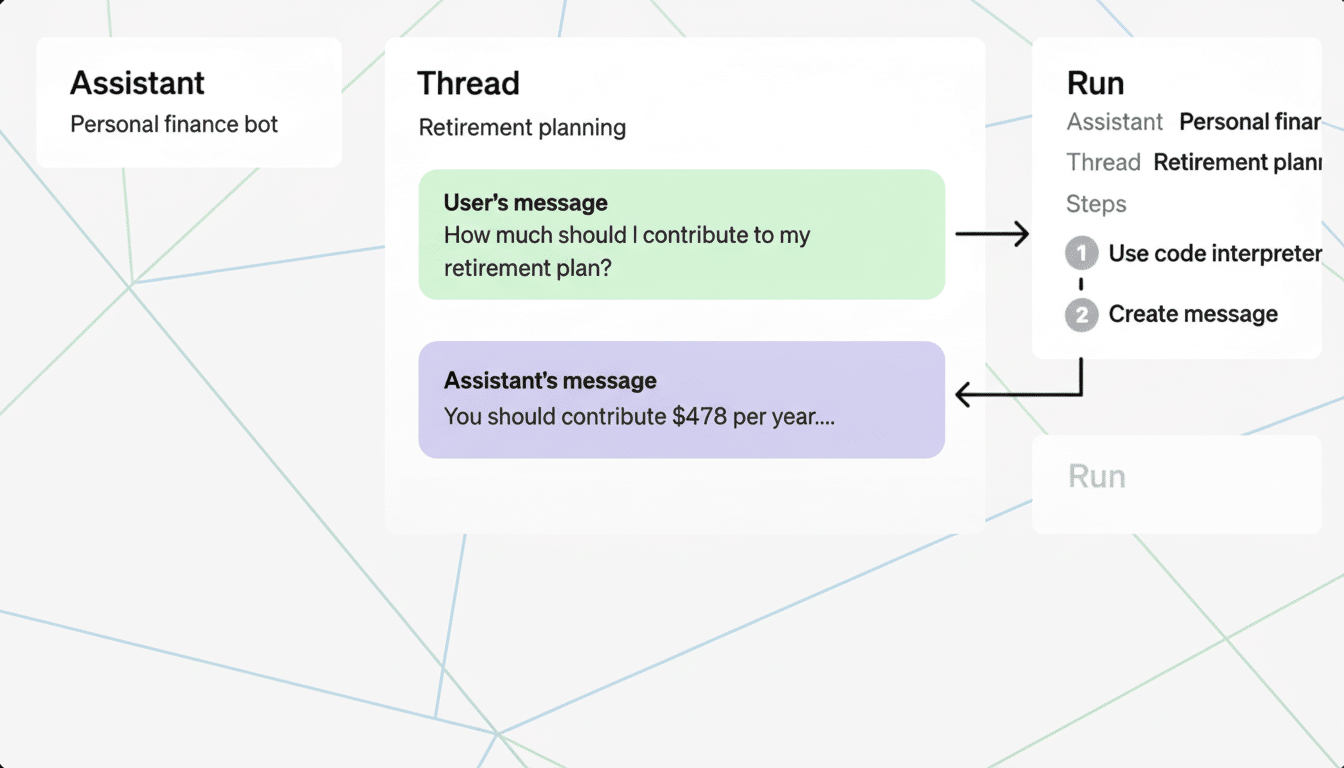

According to LianTao, the hackers insert their commands and data exchanges within standard API interactions that enterprise apps are using more and more. Part of the malware frequently polls an Assistants API endpoint for encrypted instructions, runs those commands on compromised systems, and sends responses via this same API channel.

Since the traffic flows to a trusted AI endpoint, and closely mirrors legitimate developer usage, normal reputation checks and loose allowlists are much less likely to trigger an alarm.

The technique ingeniously repurposes an AI developer’s capability into secret command-and-control and relay while also masquerading as benign use of sanctioned SaaS.

Microsoft says the technique was first seen during an investigation of a high-profile breach in which the attackers focused just on harvesting data, signaling it wasn’t pursuing smash-and-grab monetization schemes but rather something like espionage. The Assistants API, which allows teams to develop bespoke AI agents across their entire product lines, is effectively the cover transport layer.

A familiar command-and-control tactic with a new wrapper

Utilizing prominent cloud services for secret file sharing has been a trend that goes way back. Security companies have documented campaigns that leverage platforms such as Telegram, Slack, Dropbox, GitHub Gists, and Google services to hide attacker beacons in ordinary traffic. MITRE ATT&CK describes this pattern as Web Service–based command and control.

What’s new here is the focus on AI infrastructure in particular. With organizations quickly incorporating generative AI into products and workflows, endpoints including api.openai.com and the like are widely allowed, providing adversaries a high-signal, low-noise highway. According to the first reports published by independent researchers and trade journals, one theme emerged clearly: Attackers are tracking defenders’ allowlists.

OpenAI has been shifting developers to a newer Responses API, but Microsoft notes that the abuse in this case comes more from the misuse of a legitimate feature than an exploitation of a security hole. In other words, merely changing APIs will not address the observation blind spots left behind by permissive egress and insufficient insight into sanctioned SaaS traffic.

What security teams can do now to detect and disrupt

Microsoft’s advice is to tighten egress and improve observability. Organizations must scrutinize firewall and web server hits captured in their logs, enforce proxying, limit outbound access to only authorized domains or ports (if needed), and review exceptions permitting all AI and developer endpoints without scrutiny.

Apply API allowlisting with purpose-built inspection where possible, and alert on unusual patterns such as regularly scheduled beaconing to AI endpoints from servers that have no need of AI access. Bring DNS and HTTP telemetry revealed through generative AI domains into your SIEM, and see if you can hunt for long-lived sessions, weird user agents, or strange data-size extraction.

Harden identity and secrets hygiene: rotate OpenAI API keys, scan code repositories for key exposure, and scope these keys to least-privilege. Check OAuth permissions for AI-enabled apps and leverage a CASB or SaaS security solution to establish the norm of API utilization in AI. For companies in the process of migrating to the Responses API already, ensure that migration does not increase network exceptions or reduce logging detail.

Map detections to MITRE ATT&CK tactics pertaining to web services and exfiltration over application-layer protocols. Leverage SSL inspection for egress segments at highest risk where policy permits, and integrate with data loss prevention controls to alert on sensitive content flowing to AI endpoints.

What it means for enterprise AI security and defense

The SesameOp scenario is a stark reminder that AI platforms are now officially part of the enterprise attack surface. Governance for generative AI needs to be based around more than just model safety, and also cover network policy, logging, abuse detection, and incident response playbooks specifically tailored to AI API traffic.

Microsoft’s investigators are clear: this is not a vulnerability in OpenAI’s system itself. It is a lesson in how enemies are adept at twisting trusted tools. Closing the gap will mean that AI platform owners, security teams, and vendors need to work together more closely to offer richer audit trails, anomaly alerts, and least-privilege patterns for API access so that the next espionage backdoor can’t hide in plain sight.