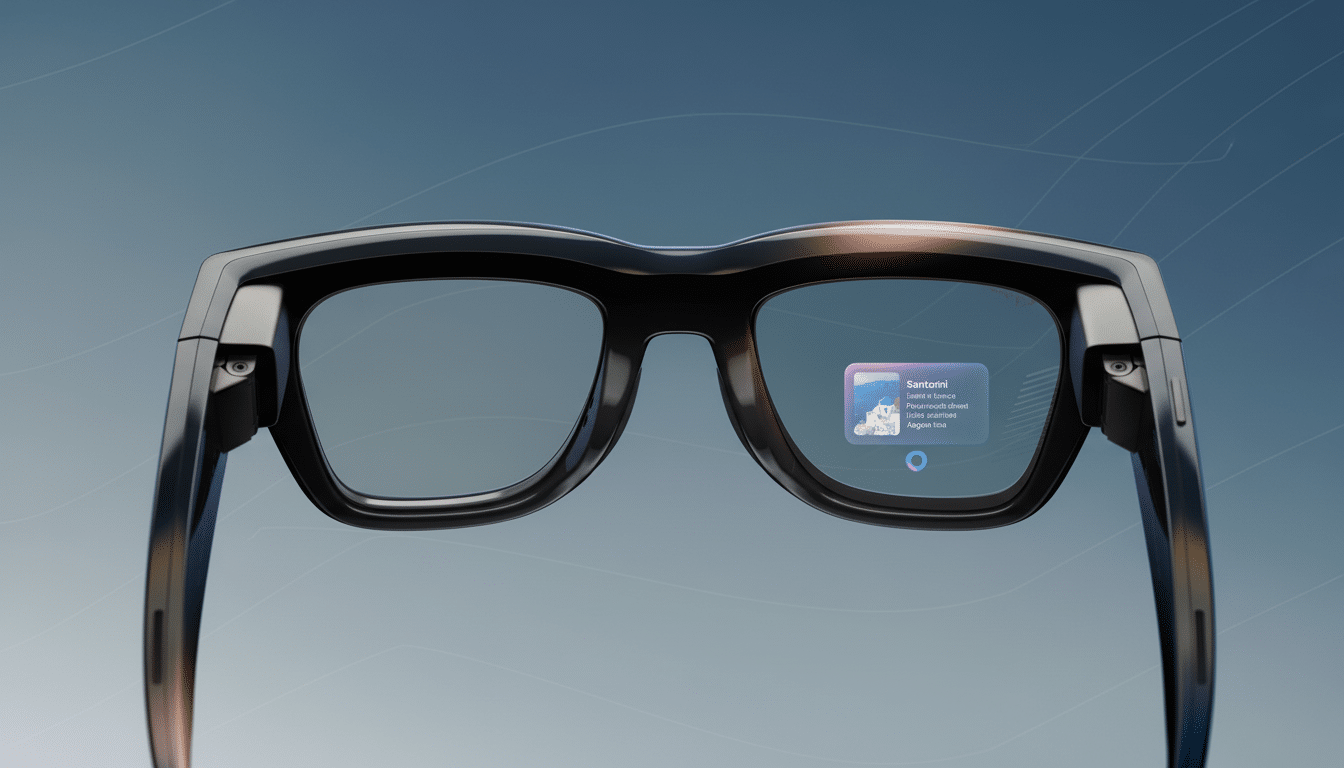

Meta is taking a major step toward bringing everyday augmented reality to the masses with new Ray-Ban smart glasses with an embedded transparent display in the right lens. Available for $799 and shipping alongside a wrist-worn controller, the eyewear layers glanceable information — maps, messages, camera previews, translations, and Meta AI responses — on top of your field of view without the bulk of a headset.

What the In-Lens Display Is Actually Showing

The display works as a subtler head-up layer. You can summon turn-by-turn directions, read messages from WhatsApp, Messenger, Instagram, or your phone; preview photos and videos before sending them; and view visual answers from Meta’s AI assistant. Unlike previous audio-only glasses, you’re not guessing what is happening — you see it.

- What the In-Lens Display Is Actually Showing

- Hands-Free Control Through the Neural Band

- Camera + AI: Seeing and Explaining the World

- Messaging, Calls, and Everyday Usage Details

- Design, Battery Life, Comfort, and Fit Considerations

- Privacy and Safety Signals for Smart Glasses

- Where These Glasses Fit in the Broader AR Race

- Early Outlook for Meta’s Ray-Ban Display Glasses

Meta says the visual interface will also work for live captions and on-device translation, which could be game-changing in noisy environments or for users who are hard of hearing. During a keynote demo, speech appeared projected onto the lens as subtitles — an accessibility use case that wearables have long told us they’d be great at but never do particularly well.

Hands-Free Control Through the Neural Band

Navigation is based on the Meta Neural Band, a wrist-worn device that relies on surface electromyography (EMG) to capture very small electrical signals from your hand. With quiet finger moves (pinch, tap, and slide) that don’t even rise to the level of arm flailing, you can turn the volume up or down, skip through tracks, snap photos, and swipe through on-screen cards.

This approach harks back to Meta’s 2019 acquisition of CTRL‑Labs, a neurotech startup that vaunted low-effort, low-latency wrist-based input. EMG should offer more precise control than gesture cameras and is less socially awkward in public, which matters for mass-market wearables.

Camera + AI: Seeing and Explaining the World

Built-in cameras, microphones, and speakers allow the system to “see” what you see. You can inquire of Meta AI whether produce on your counter appears ripe, solicit a recipe for something fast to prepare, or translate visible signage. The view inside the lens (the camera?!) is actually a real upgrade over my previous models, which forced me to make my best guess based on general composition.

This AI-on-your-face approach reflects a larger industry trend toward multimodal assistants. It’s not simply a voice whispering in your ear; it’s context-aware computing, on the fly, based on what the camera sees.

Messaging, Calls, and Everyday Usage Details

You can take video calls over WhatsApp, and Messenger is another option for that; callers will be able to see your view. Text and multimedia notifications pop in-lens, and a discreet dial-turn action brings the music app volume up or down without ever having to lay hands on your phone. Meta says “phone-free” directions will roll out to select places at first, allowing you a map view that fits into your line of sight.

Meta is also experimenting with handwriting-style text input, allowing you to “write” on a surface in order to type messages — a useful option when voice won’t do and tapping on a watch screen seems too cramped.

Design, Battery Life, Comfort, and Fit Considerations

The glasses retain the familiar Ray-Ban silhouette, and they weigh in at around 69 grams — heavier than typical sunglasses but much, much lighter than AR headsets. Frames are offered in two sizes and two finishes (black or sand), with Transitions lenses to adjust to light.

The glasses are rated for up to six hours of typical use by Meta, with the Neural Band given a rating of up to 18 hours and an IPX7 water resistance rating. The package will go on sale through regular retail chains (i.e., Best Buy, LensCrafters, Sunglass Hut, and Ray-Ban stores) in the U.S.

Privacy and Safety Signals for Smart Glasses

Previous smart glasses Meta has demonstrated have had a capture LED to indicate when cameras are recording, and the company says visual indicators will be part of the design here. “Meta’s previous smart glasses included a capture LED to show when cameras were recording — we’re learning,” Meta spokesperson Alex Colev said in an email.

That’s crucial from a trust standpoint; regulators and campaigners have warned that always-on lenses could too easily become an accepted standard for covert recording.

Best practice still applies: comply with local laws, respect no-recording zones, and get consent when reasonable. Clear, bright indicators and audible cues are table stakes when it comes to wearable cameras, privacy researchers and digital rights organizations have said.

Where These Glasses Fit in the Broader AR Race

Analysts at firms like IDC and CCS Insight have long made the case that glasses — not unwieldy headsets — would serve as a bridge to mass-market AR. Meta’s strategy threads that needle: retaining the familiar form factor, adding a scant display layer, and relying on AI for context.

The firm also unveiled new Ray-Ban Meta glasses that don’t include a display, as well as an Oakley-branded model with a camera on the center edge of the lenses, indicating it has developed multiple levels of wearable technology. Relative to the tethered AR viewers and premium-priced mixed reality headsets that have captured so much attention over the years, these glasses with in-lens displays opt for a focus on convenience rather than immersion — more “assistant on your face,” perhaps, than full-on holograms.

Early Outlook for Meta’s Ray-Ban Display Glasses

Success will depend on three factors: the display readability in bright sun, reliable EMG controls amidst a ruckus, and battery life that makes it through a busy day. If Meta executes well, these glasses could take smart eyewear from novelty to necessity — at least for navigation, messaging, and accessibility applications.

Not all of us need a screen in our sunglasses, but the pitch is pretty compelling: glanceable information when you want it; not when you don’t — and no phone in your hand. That combo of subtlety and usefulness is exactly what earlier attempts at smart glasses were lacking.