And Meta is using this year’s Connect to take its smart glasses from novelty to something resembling a necessity, with planned reveals including a new line of Meta Ray-Bans, an even higher-end “Hypernova” model, and a fresh Oakley collaboration. The throughline is obvious: display-forward wearables, neural input, and a developer-ready platform that makes glasses useful the second you put them on.

Ray-Ban Display and Ray-Ban 3: Two tracks, one ecosystem

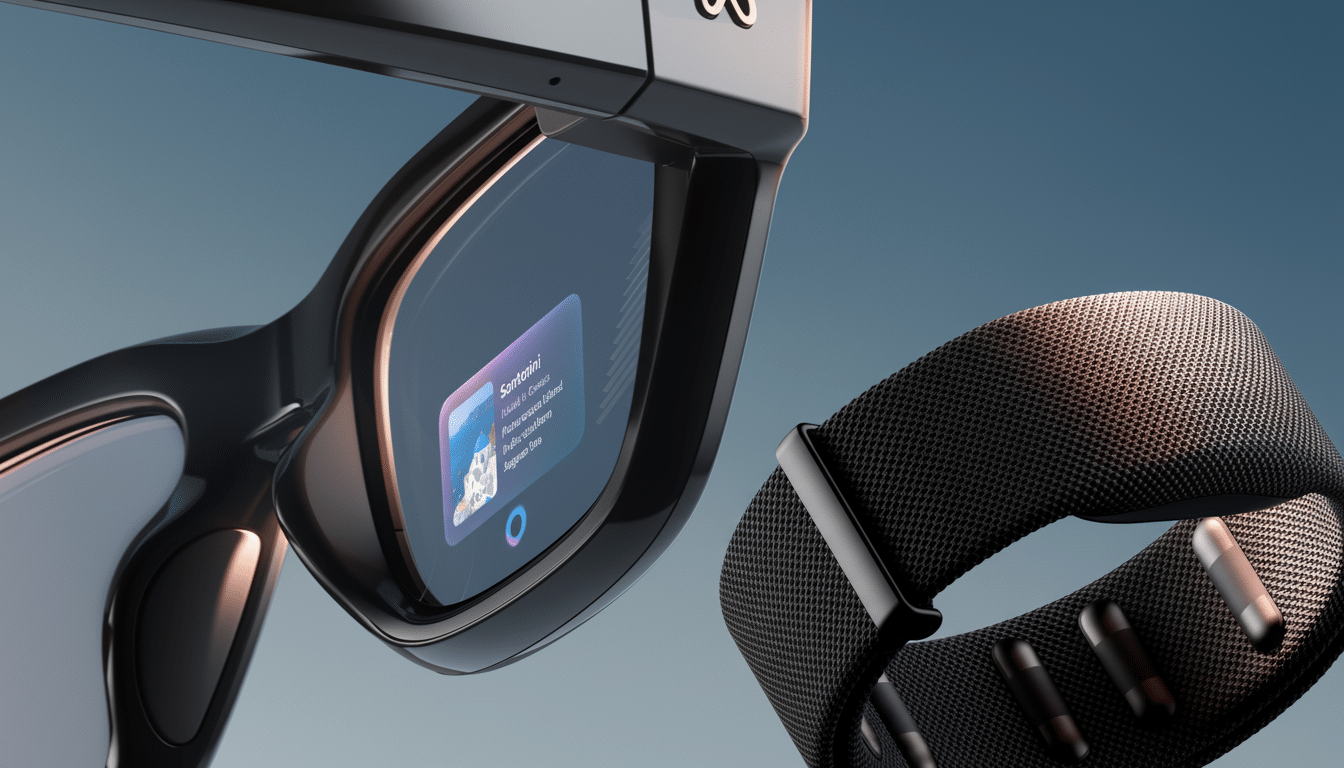

Meta is ready to split the Ray-Ban family in two directions. First up, a Ray-Ban Display model with a monocular heads-up display keeps key information — say translations, directions, or messages — anchored in your field of view without the heavier lift of real AR spatial anchoring. A leaked teaser revealed UI elements stuck in the corner of the lens, a sensible trade-off that prioritizes battery life and brightness while maintaining a recognizable Ray-Ban accessory.

- Ray-Ban Display and Ray-Ban 3: Two tracks, one ecosystem

- Hypernova: High-end glasses with a UI you can’t miss

- Oakley Meta Sphaera: Smart eyewear comes for your sport

- Quest Horizon OS broadened with Asus ROG Tarius

- Why this shift in smart glasses matters right now

- What to look for in Meta’s Connect 2025 announcements

Second, the Meta Ray-Ban 3 leans into what helped the line take off: a camera-first wearable with improved optics and clearer mics, as well as comfort tuning for all-day wear. Last year’s glasses brought multimodal AI assistance and live translation — anticipate faster on-device processing and longer runtime this time. And Meta has partnered with EssilorLuxottica, so the frames should still appear like eyewear first and tech second — a must-have for mass-market success.

It is also believed that an sEMG wristband will launch alongside the display-capable glasses. As Meta’s research arm demonstrates, wrist electromyography can detect micro-movements with submillimeter resolution — translating a finger pinch into a click or slight wrist rotation into scroll. If this were formed as a lightweight “neural” carried bracelet, it could solve the biggest input problem for glasses: how to control a UI without your voice echoing across the room.

Hypernova: High-end glasses with a UI you can’t miss

As reported by Bloomberg’s Mark Gurman, Meta’s top-of-the-line model — codenamed Hypernova and said to be rumored to bear the “Celeste” moniker — will aim for a price range around $800 depending on configuration, or potentially higher. Essentially, the bottom right houses a monocular display panel that pops up a discrete home screen with at-a-glance information alongside preloaded apps including camera, gallery, and maps and enables phone notifications when paired.

Hypernova will be closely integrated with a neural wristband, code-named Ceres, to understand muscle signals for controlling a UI. Early imagery circulating developer circles depicts the device with a cloth strap containing high-performance EMG sensors, an onboard processor, and haptics for click-level feedback. If the execution lives up to the demos I’ve seen of Meta’s work in academic conferences, it has the potential to make voice optional and gestures near-invisible — two changes that count for a lot out in public.

Oakley Meta Sphaera: Smart eyewear comes for your sport

An updated Oakley collab, said to be called the Meta Sphaera, brings a centralized camera and wrapped lens geometry look to a performance frame. The play here is obvious: athletes, creators, and average Joes all want stabilized, hands-free POV video without a helmet. Oakley’s sporting pedigree should mean you get grip, balanced weight, and sweat resistance that the average everyday glasses can’t match, while Meta’s software can auto-level, caption, and clip on the fly.

Look for what should be a familiar round of privacy protections, such as a capture indicator and audible signals, which regulators and civil society groups like the Electronic Frontier Foundation have pushed as table stakes for any camera-on-face product.

Quest Horizon OS broadened with Asus ROG Tarius

Meta’s open platform bet continues to factor, with an upcoming Asus ROG Tarius headset expected to be joining the Horizon OS ecosystem. Early leaks suggest eye and face tracking, as well as micro‑OLED or QD‑LED with local dimming for better contrast — specs geared towards competitive gaming where latency and visual fidelity are non-negotiable. It’s one of the first third-party headsets for Meta’s OS since the company announced that it would start inviting partners (like Asus and Lenovo) to build devices on its stack.

The strategy looks a lot like successful playbooks in mobile: grow the installed base across multiple hardware brands while keeping developers on a single distribution and identity layer. For VR/AR studios, this could mean a larger total addressable market without the burden of multi‑platform ports.

Why this shift in smart glasses matters right now

For years, analysts have been predicting that the next wave of consumer tech products will be much more intimate: think smart glasses, not monolithic headsets. IDC forecasts consistent double‑digit growth for the AR/VR market in the next few years due to software maturing as more content is available and price points decline, which will lead to a normalization of wearables as the hardware shrinks. If Meta can put out a display that you really want to be seen in — with controls that don’t make you feel ridiculous — it might finally move the category beyond the world of early adopters.

There are real constraints. Outdoor brightness and evenness of color are still challenges for waveguide optics, and every milliamp-hour is crucial when you want to keep the weight of frames below 50 g. Test-and-measurement leader Display Supply Chain Consultants has been saying that for advanced optics the costs and yields are stubborn, which is why we are seeing down-to-earth, monocular solutions appearing first. We should expect Meta to also focus on efficiency wins as well as flashy bells and whistles.

What to look for in Meta’s Connect 2025 announcements

Expect a clear divide between camera-first and display-first glasses, and how Meta makes each suitable for daily use cases like translation, turn-by-turn navigation, or messaging.

Pay attention to input. And if the Ceres wristband comes through with beefy EMG controls and haptics, it could be filling in a missing piece for silent/accurate interactions while on the move.

For developers, the most pressing question is API depth: how many display primitives, notification pipelines, and gesture events are available across all glasses, not just flagship?

Finally, look for verification of privacy assurances, opt-in data collection, and on-device processing. Consumer confidence will dictate how fast smart glasses become something more than a niche.