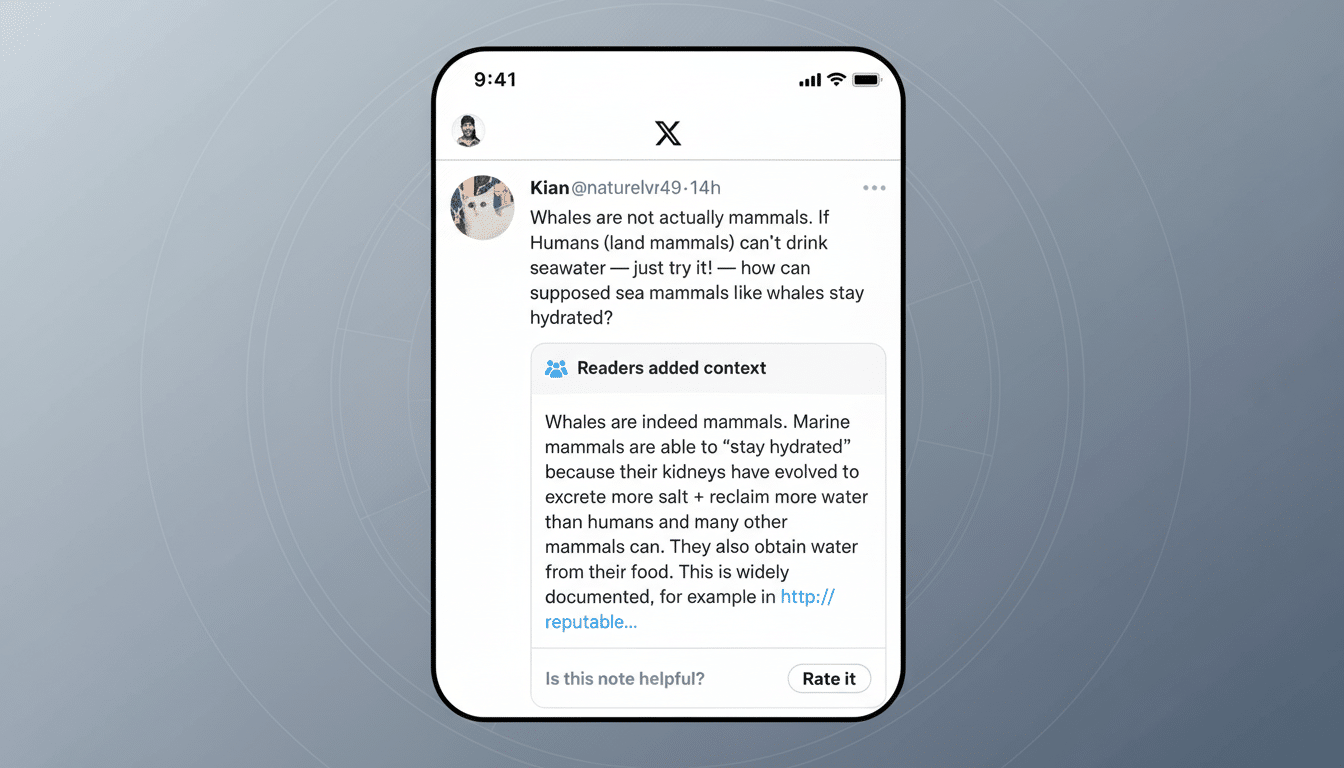

Meta is introducing new features to bolster its Community Notes fact-checks on Facebook, Instagram and Threads, including alerts telling people that a post they liked, commented on or shared later received a corrective note. The company is also expanding access to two participation features: anyone can now call for a note on a post and rate whether an existing note is helpful.

What’s new, and how it works

The headline change is notifications. If you’ve interacted with a post that receives a Community Note, you will get an alert linking to the context that was added. That goes to the “zombie share” problem directly — the many pieces of misinformation that continue to spread even after they were debunked — by putting an asterisk next to the correction where it would most matter.

Meta’s model, which was inspired by the crowdsourced approach made popular on X, depends on contributors whose takes must appeal to conflicting opinions before any note is published. Meta CISO Guy Rosen said more than 70,000 contributors have written more than 15,000 notes so far, with about 6% qualifying the bar for publication. That slow publish rate indicates a high consensus threshold meant to make it harder for partisan pile-ons and to ensure the notes add verified context rather than opinion.

The company is calling these additions tests. Opening those doors to anyone to request a note — and to rate a note’s usefulness — however, should increase the supply of candidate notes and the pace at which helpful ones rise to the top.

Why alerts might matter

Studies from academic and industry groups continue to demonstrate that timely labels and friction can slow the spread of misinformation. The new notifications are intended to be timely: to catch people soon after they have engaged, when they are more likely to go back and refine their understanding — or not spread the content at all.

Think of a breaking-news rumor during a wildfire, or election night. By the time a fact-check is attached, thousands of people may already have seen it. Notifying those particular people could help stop further spread, and trigger corrections in comment threads and group chats where the original claim gained purchase.

Scale, speed and the trade-off of the consensus

Crowdsourced context has potential, but scale is the stumbling block. Augwt3wqt3out The problems don’t seem to behave remarkably differently than those of other similar services on different platforms (where apparently many correct notes are either being offered up to late, or never at all). Invariably, the Center for Democracy and Technology found examples in which the majority of accurate notes linked to U.S. election misinformation were not ultimately shown to users — a sobering disconnect between creation and effect.

Meta’s 6 percent publication rate is in good part by design: Publication requires cross-ideological agreement, providing for legitimacy, but also, especially during fast-moving news cycles, slowing coverage. The danger, however, is that a limited, carefully vetted flow of notes may not match up to fast-moving viral falsehoods. Notifications would help—only if there are enough published notes fast enough, on the posts that matter.

The tough: visual content and private spaces

Instagram Reels, Stories, and short-form video make fact-checking harder because important context can rest in visuals, edits, or audio. And a single text note may not communicate how a clip was cropped or mis-captioned. Private Groups and messaging on Facebook pose similar challenges: If there is a note, it may not easily break through a closed community in which a false claim is being passed around.

opening note (requests to everyone) could bring up issues in those environments faster. But, the system will require custom designs for video-first content and the mechanics to reach people within semi-private locations without over-alerting a user who will never have seen the correction.

Data, transparency, and guardrails

Policy and research groups — including the Center for Democracy and Technology, and members of the fact-check community such as the International Fact-Checking Network — have called on platforms to divulge more metrics. An average time-to-note for viral posts, the share of viewers who view a note after interacting and how frequently notifications change behavior (like leading to less resharing or more corrections in comments) were among the most valuable signals for Community Notes.

Another is defense against brigading. Systems that account for consensus across a variety of raters are helpful, but they are not impervious to organized campaigns. Transparency on how contributor reliability is being scored, how opposing-viewpoint signals are being calculated, and how appeals are operating would inspire confidence in its users and researchers.

What to watch next

If the tests go well, a broader rollout is planned for region and language expansion, a deeper integration with professional fact-checking partners and more sophisticated video treatments. The question is whether getting alerts and open requests into the newsfeed will expand the breadth and speed of useful notes, without overwhelming people with notifications.

The stakes are clear: crowdsourced context can provide agility and legitimacy, but only if people in jeopardy get it in time. Meta’s newest features address that bottleneck head-on. Now the company will need to substantiate, with data, that the corrections are not just accurate — but visible.