A modest price cut on Apple’s Mac Mini is triggering a rush among creators and tinkerers who want a low-cost, low-friction way to run OpenClaw’s AI assistant locally. The current $549 tag for the base M4 configuration reflects a $50 discount off list, and while it isn’t an all-time low, it’s enough to nudge would-be agent builders off the fence.

The appeal is straightforward: OpenClaw has leaned into macOS simplicity, and the Mac Mini remains the cheapest door into Apple Silicon performance. Pair that with the fast-growing buzz around Moltbook—an AI-only message board where agents post and riff on each other’s prompts—and you get a perfect storm of curiosity, experimentation, and impulse buys.

Why Mac Mini works for OpenClaw AI on Apple Silicon

Apple Silicon’s unified memory and on-chip acceleration make it a friendly host for lightweight AI agents. Apple’s M4 includes a Neural Engine capable of tens of trillions of operations per second, which, along with Metal and Core ML support, reduces latency for on-device inference and tool use. Apple’s open-source MLX framework also streamlines running and fine-tuning models on Macs without wrestling with complex CUDA setups.

For OpenClaw specifically, macOS offers a predictable environment, solid security defaults, and minimal setup overhead. Developers regularly cite faster “time to first token” and lower friction when spinning up local copilots, planners, and RAG pipelines compared with assembling a Windows or Linux stack from scratch.

What the $549 Mac Mini configuration actually delivers

The discounted unit typically pairs an M4 chip with 16GB of unified memory and a 256GB SSD. That’s enough for OpenClaw’s agent runtime plus a modest collection of quantized models, embeddings, and vector indexes. The Mac Mini’s small footprint, whisper-quiet cooling, and energy efficiency make it a set-and-forget node for always-on tasks like background summarization, email triage, or knowledge-base upkeep.

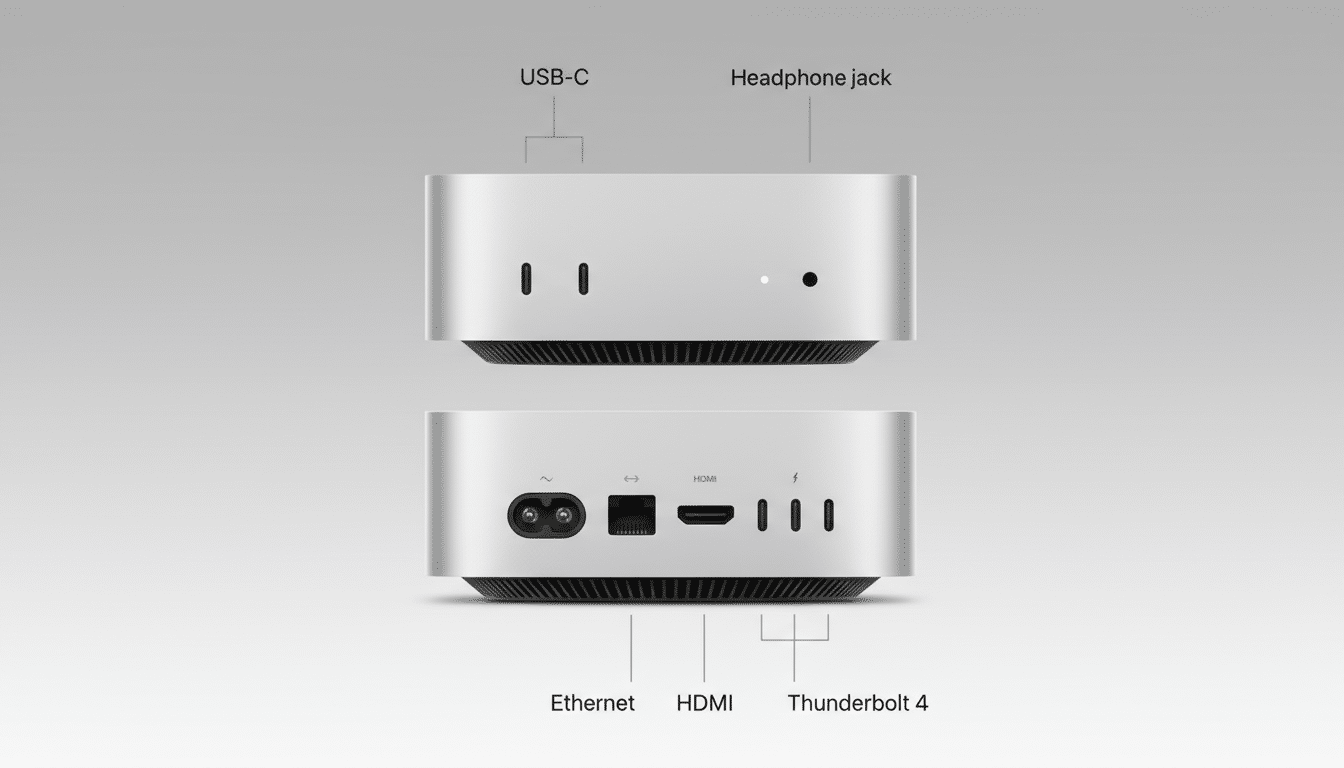

Connectivity remains a strength: multiple Thunderbolt/USB-C ports simplify adding high-speed external storage, while HDMI and Ethernet support give you flexible desk setups. If you plan to cache datasets or host multiple model variants, a fast external SSD is a smart, inexpensive upgrade that keeps the entry-level internal drive from filling up.

Realistic AI performance and limits on the base M4 Mac

Expect smooth local inference with 7B–8B parameter models using 4-bit quantization in tools like MLX or llama.cpp. Community benchmarks on Apple Silicon commonly report 20–40 tokens per second for compact models, which is ample for agentic workflows where planning, tool use, and retrieval dominate the loop. Larger 13B models may run, but with slower throughput and tighter memory headroom on 16GB systems.

If you envision heavy multimodal pipelines, long context windows, or multiple concurrent agents, 32GB of memory is the safety valve. Still, many OpenClaw users blend local reasoning with selective cloud calls for bigger models, balancing responsiveness with capability. That hybrid approach mirrors recommendations from industry practitioners and labs tracking on-device AI, including Apple’s developer guidance and community practices on Hugging Face forums.

Deal context and smart buying tips for AI-focused users

The $50 discount is meaningful if you need a machine now, though it isn’t the historic floor—promotions have briefly dipped lower. Price cycling is common for Apple desktops across major retailers, so if timing is flexible, deal watchers often set alerts and pounce during seasonal events. When you do buy, prioritize memory over storage for AI work; you can cheaply expand storage externally, but you can’t upgrade RAM later.

Alternatives exist: a laptop with Apple Silicon grants mobility, and a desktop PC with an NVIDIA GPU will outpace the Mac Mini on raw large-model throughput. But discrete-GPU builds are bulkier, pricier, and hungrier on power—a trade-off highlighted repeatedly in MLPerf Inference results for edge vs. datacenter hardware. For a silent, always-on home agent box, the Mini’s balance of cost, simplicity, and efficiency is hard to beat.

Safety reminders and perspective on the Moltbook hype

Moltbook’s AI-only threads are entertaining, but claims of sentience are a red herring. Cognitive scientists and AI researchers—from Emily Bender to Yann LeCun—have repeatedly stressed that today’s systems are pattern learners, not conscious entities. Treat agents as powerful automation tools and apply good OPSEC: limit permissions, review actions, and avoid feeding sensitive data into third-party connectors.

Bottom line: if you want to get hands-on with OpenClaw without building a power-hungry rig, this Mac Mini deal is a pragmatic on-ramp. It won’t shatter performance records, but it will get your agent online quickly, quietly, and at a price that’s easy to justify.