LangChain, the open-source engine powering a phalanx of ‘agentic’ AI apps, has garnered a $1.25 billion valuation as it doubles down on tools that allow big language models to plan and place calls to external APIs as well as work across data sources with more autonomy. Accompanying the milestone, the company also announced updates across its stack — its agent builder LangChain, the orchestration layer LangGraph, and the testing and observability suite LangSmith among them — in an apparent push from initial experimentation to production-grade readiness.

Agentic AI goes mainstream as enterprises seek orchestration

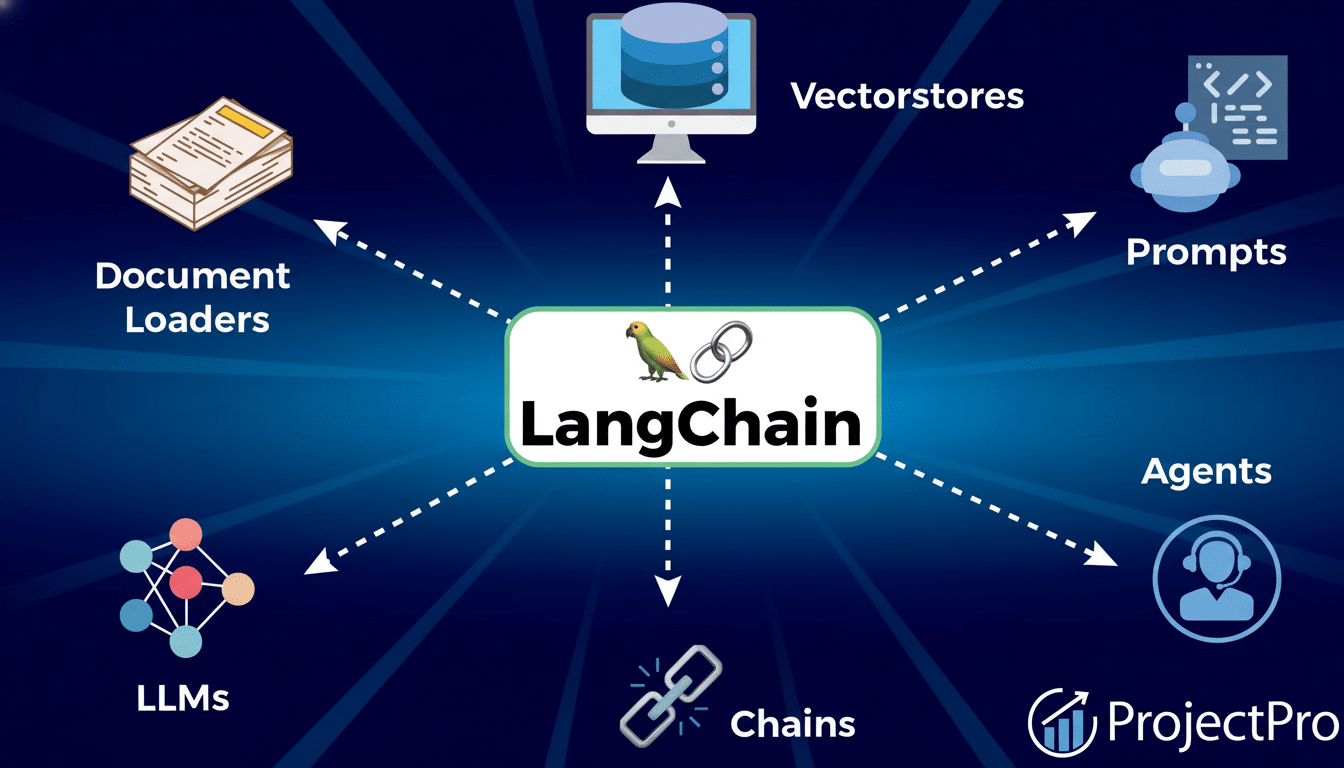

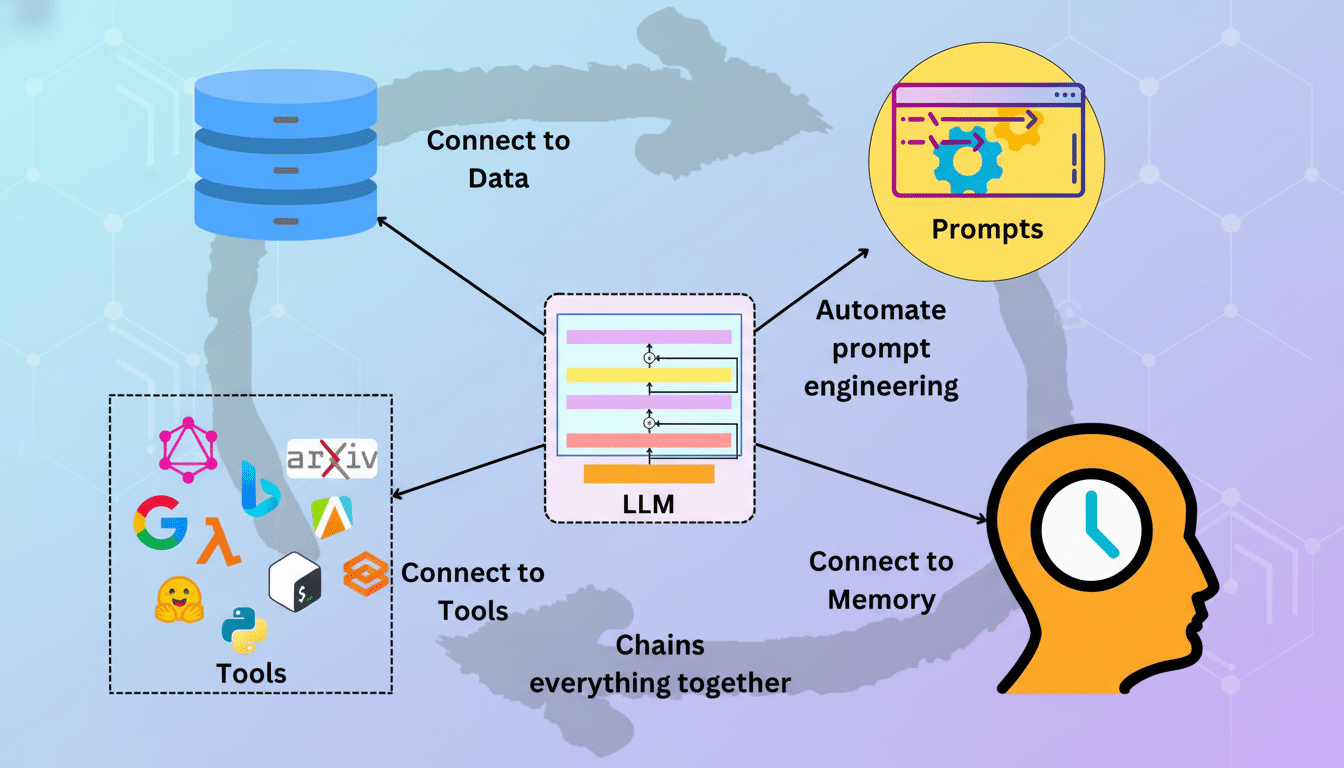

“Agentic” is the mot du jour in enterprise AI for systems that go beyond one-turn prompts and execute multi-step tasks — like researching a topic, querying internal databases on behalf of users, calling up tools, or assembling results into an action plan. It’s the lane LangChain has helped to define. The framework took off in 2022 by addressing unglamorous but crucial issues — tool calling, retrieval, and memory — that were not as well-established by model providers at the start.

As model makers introduce native agents and function calling, the gravity is moving toward strong orchestration, evaluation, and runtime controls. That’s where LangChain’s wager now lies: to provide an open-source toolkit that runs across models from OpenAI, Anthropic, Google, Meta, and Mistral, while also adding enterprise-grade testing and telemetry to tame real-world complexity.

From open-source roots to a hardened enterprise stack

Conceived by machine learning engineer Harrison Chase, LangChain launched as a side project and soon took off as the default choice for developers building their first LLM apps. The company later raised $10 million in seed funding, led by Benchmark, and a $25 million Series A, led by Sequoia, to build the commercial platform around this open-source core.

The community signal is strong: LangChain’s repositories alone have garnered over 100,000 stars and tens of thousands of forks, a measure of wide experimentation. That sort of grassroots momentum counts in a market where projects like LlamaIndex, Microsoft’s Semantic Kernel, or Haystack are all vying for developer mindshare. The edge that LangChain had was breadth — wrappers for many models and vector stores — and a quick iteration cycle, inspired by feedback from the open-source community.

Product updates across LangChain, LangGraph, and LangSmith

The current release cycle is dominated by issues related to production readiness. The model is directly based on the mechanism of the GM-VAE and is further tailored to multi-step environments by refining the tool selection mechanism and control flow to avoid one-shot behavior when dealing with brittle multi-step tasks. LangGraph, the orchestration and memory layer, embraces deterministic state management — graph-like workflows, resumability, and guardrails that allow teams to encode when and how an agent should behave.

LangSmith increases testing and observability through improved trace capture, dataset-based testing, and regression testing of prompts and agent policies. This fits into a wider theme: companies have been looking for proof that AI systems continue to progress incrementally and don’t quietly degrade after updates to models or prompts are made. The work reflects guidance from industry researchers and practitioners at institutions like Stanford HAI, the Allen Institute for AI, and model suppliers who advocate rigorous testing as deployments grow.

Combined, the updates tackle the toughest aspects of going live: reproducibility, cost control, latency trade-offs, and post-deployment monitoring. In practice, this results in fewer flaky agents and more dependable workflows for common use cases like customer support agents that escalate intelligently, data copilots that help produce and validate SQL, and research agents that weave together insights from internal and external corpora.

A crowded field and LangChain’s bid for a strategic moat

Competition is intense. Perhaps due to these limitations, model platforms — including OpenAI’s Assistants and Realtime APIs, Google’s Vertex AI Agent Builder, AWS Agents for Bedrock, and Azure AI Studio — now ship their own agent frameworks, in some cases obviating the need for third-party scaffolding. Meanwhile, observability vendors and academic toolkits alike are sprouting up — including Arize Phoenix, WhyLabs, and evaluation frameworks like Ragas and DeepEval.

LangChain’s position is vendor-agnostic and composable. By serving a range of models, vector databases, and tool ecosystems, it aims to shield teams from lock-in, making it easy to swap components as pricing, quality, or latency change. And it is not just a philosophical flexibility; procurement teams are increasingly calling for multimodal strategies as a way to limit risk and gain spend efficiency.

Why the new valuation matters for builders and teams

The $1.25 billion valuation is a vote of confidence that the next stage in AI adoption will be agent-driven and cross-model, too. It also indicates that open-source-first strategies can lead to sustainable businesses when combined with enterprise-grade evaluation, governance, and support. For developers, faster tooling will revolve around stateful agents, improved safety controls, and heavier benchmarks that look more like software testing than one-off demonstrations.

What does this mean for enterprises? It means you must invest in two capabilities:

- Orchestration (uniquely separating out business logic from model choice)

- Continuous evaluation (that is, keeping an eye on quality, costs, and risks)

Whether implemented on LangChain’s stack or an in-house solution, those are the pillars that are starting to make a difference between just novelty and tangible ROI. Inertia is now behind it, and with a refactored product offering in its arsenal, LangChain is proving that agentic systems are ready to step out of the lab and into line-of-business.