Lambda is broadening its collaboration with Microsoft via a multibillion contract to deliver AI infrastructure at scale on Azure, demonstrating that the surge in demand for HPC continues.

Still, the financial terms have not been disclosed; the firms intend to put in place tens of thousands of Nvidia GPUs, including advanced GB300 NVL72 systems, into operations, according to Lambda.

Lambda chief executive Stephen Balaban characterized the transaction as the newest chapter in an existing agreement, claiming that the two companies have been working on large AI clusters for many years.

The two enterprises are cooperating since Microsoft has recently implemented numerous new capacities powered by Nvidia-based supercomputers, and this cooperation will open opportunities for firms and AI developers who have had trouble obtaining secure GPU supply.

What the contract encompasses across Azure AI infrastructure

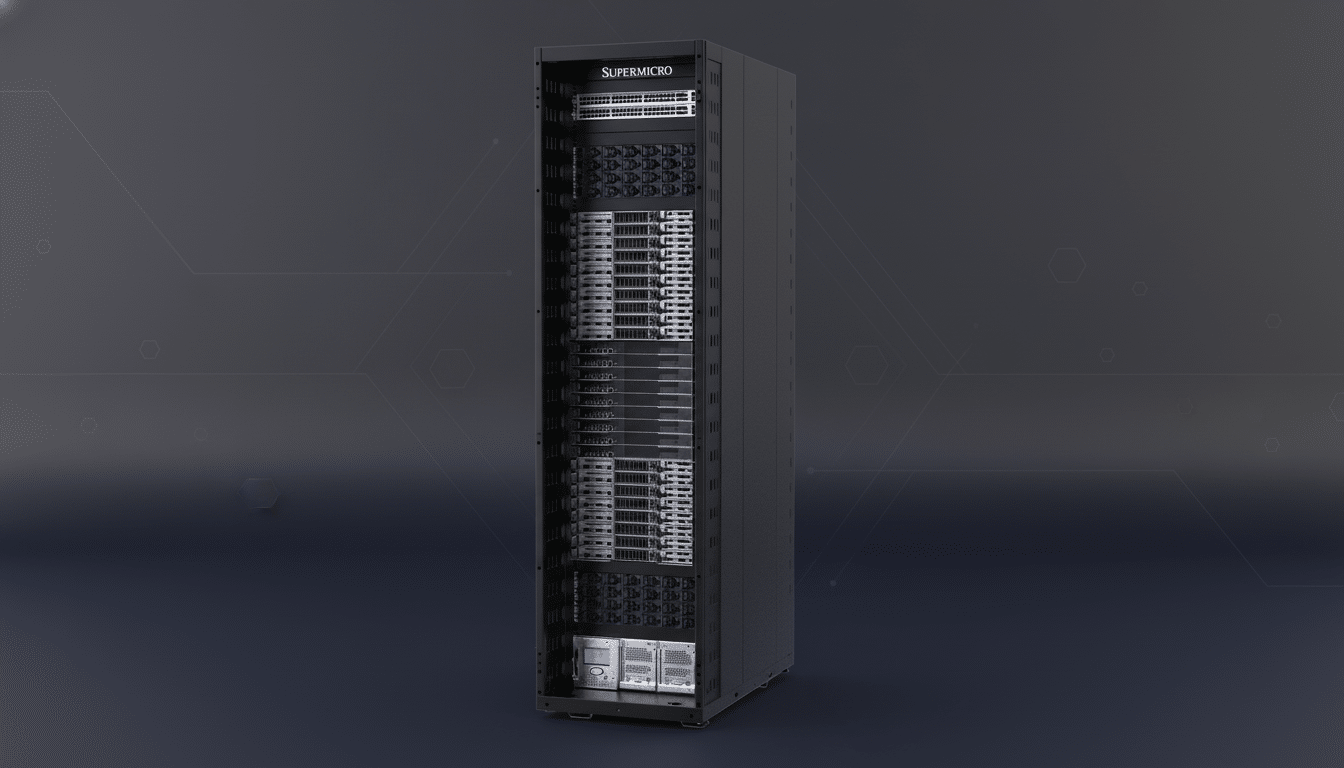

The new purchase is an expansion of Microsoft’s Nvidia GB300 NVL72 systems, which are a rack-scale framework that incorporates dozens of NVLink GPUs at high bandwidth. The systems are designed for training multimodal foundation models and high-throughput inference for copilots and search, as well as serving cutting-edge models.

The acquisition of “tens of thousands” of GPUs is a huge amount of bandwidth that can put multiple GPUs together for training sessions with hundreds of trillions of outputs and latency-sensitive operations.

The contract benefits buyers because they receive faster delivery times and more consistent access to Azure’s GPU fleets utilizing dedicated clusters and managed solutions. There will be enhanced connectivity with popular ML software, container orchestration, and optimized storage for whole-job acceleration. Both businesses have invested heavily in these requirements to reduce staff frustration.

Why it matters in the compute arms race for AI

Indeed, the pact resounds in a market awash with multi-year, multi-billion-dollar AI capacity purchases. Its budget commitment comes as Microsoft announced a $9.7 billion AI cloud capacity expansion deal with Australian data center operator IREN, and OpenAI disclosed a $38 billion cloud expenditure pact with Amazon and reportedly another separate $300 billion compute deal with Oracle. Soon, the hyperscalers will have acquired multi-year, multi-provider GPU reservations to mitigate the threat of shortages and manufacturing constraints.

Amazon’s comments on cloud growth paint a picture of rapid infrastructure expansion, asserting strong AI demand and cloud growth reacceleration. In its last earnings call, the company reports cloud unit sales at $33 billion for the year, growing 20.2 percent YoY, and implies newfound gigawatts of power capacity attributable to new infrastructure. The consistent message across all hyperscalers is one of AI workload-driven power and capacity, reshaping data center design and energy contracting and long-tail capital planning.

Lambda’s role and strategic edge in Azure expansion

Founded in 2012, Lambda has raised about $1.7 billion and established three dimensions of reputation: an ability to deliver GPU infrastructure to cloud operators, on-premises clusters, and hybrid; an actual institutional knowledge of how to tune networking, cooling, and job schedulers to feed ever denser racks of these accelerators without falling into the black hole of diminishing returns; and finally, tooling and standards to enable enterprises to select compatible hardware and a toolset aligned to their needs from research to production.

The Microsoft tie-up also gives Lambda distribution leverage on Azure, where enterprise customers already run data pipelines, vector databases, and observability stacks. Reducing the gap between data gravity and compute availability can cut training times and lower egress costs—two of the biggest pain points for teams scaling generative AI.

Near term, the partnership should expand access to Nvidia GPU capacity for model training, fine-tuning, and high-volume inference. These include teams building large language models, vision-language systems, code assistants, and personalization engines.

Offerings that package compute with curated software stacks, frameworks like PyTorch and JAX, optimized kernels, and orchestration tooling will aim to support checkpointing, sharding, and distributed training best practices.

Customers will also watch for improvements in job queue predictability, multi-tenant isolation, and cost transparency. As GPU scarcity eases in specific regions, workload placement strategies balancing latency, price, and data residency will become a clearer competitive lever.

Key details, including the exact contract size and rollout schedule, remain undisclosed. Execution will hinge on Nvidia’s supply cadence, power availability, and the ability to stand up liquid-cooled, high-density clusters at speed. Yet concentration risk is another factor: as more compute aggregates with a few hyperscalers, regulators and large buyers will scrutinize resilience, portability, and pricing power. Even so, the signal is clear. By pairing Microsoft’s global cloud with Lambda’s GPU-first expertise and Nvidia’s newest systems, the two companies are positioning to meet surging AI demand with scale and reliability—turning today’s compute bottlenecks into tomorrow’s competitive advantage.