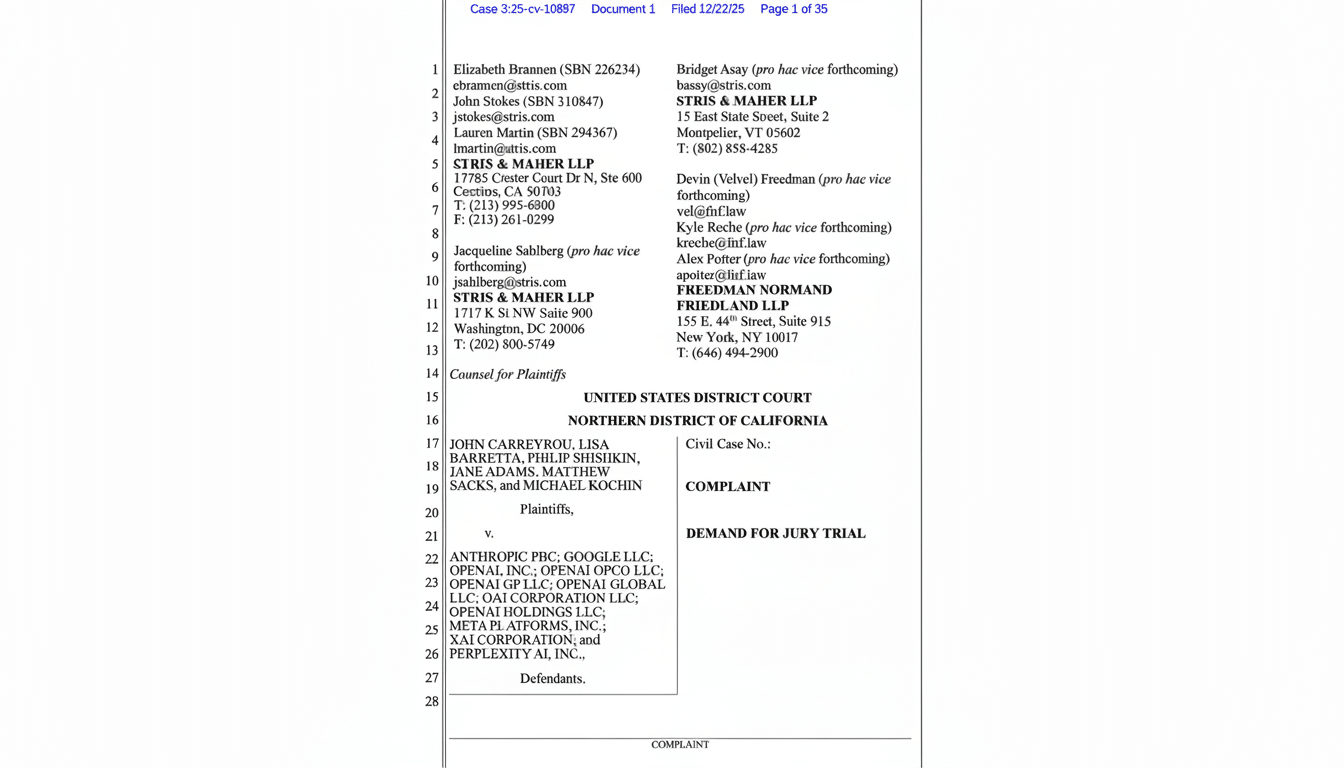

Six of the world’s most influential AI developers — Anthropic, Google, OpenAI, Meta, xAI, and Perplexity — have been named in a new lawsuit led by several high-profile writers including “Bad Blood” author and Theranos whistleblower John Carreyrou that claims the companies trained large language models on pirated copies of their writing. The complaint claims that the tech companies created billion-dollar systems from pirated datasets, culled from the so-called shadow libraries, without permission, credit, or compensation.

The case comes at a moment of increasing tension between creators and AI companies over the limits of fair use, data scraping, and memorization in generative models. It also comes amid increasing backlash from authors to the previous settlement offers, which provided only modest checks and scant accountability for the purported copying that made it possible in the first place.

Who Is Suing, and on What Specific Legal Grounds

The plaintiffs in the suits represent a who’s who of nonfiction and literary authors, some of whom say their works were “plucked” from illegal online repositories like Library Genesis and Z-Library, ingested into massive training corpora, and used to tune commercial models now built into consumer- and enterprise-facing tools. Carreyrou’s contribution highlights how big-name investigations may prove especially attractive as training material for their clarity, structure, and cultural context.

Those named as defendants in the filing are many of the AI market’s leading edge. Anthropic and OpenAI power widely used assistants; Google and Meta bake models into search and social; xAI advances frontier-scale research; Perplexity constructs a conversational search engine. The suit contends that each profited from the same underlying practice: reproducing full books without a license to improve the fluency and factuality of their systems, and monetizing the outputs.

Authors say the damage is twofold. To begin with, unauthorized copying of works as a whole is contrary to the exclusive right of reproduction. Second, the models occasionally regurgitate recognizable passages from training data, a phenomenon researchers call memorization. Both, the complaint argues, stifle the market for books and sap incentives to produce.

A New Front After a Disputed Settlement Offer

(This is a separate suit from another class action filed against Anthropic, where the judge made it clear he viewed there to be a difference between the legality of training models and illegally obtaining pirated text.) A proposed $1.5 billion settlement from that case would pay as much as $3,000 per eligible writer — a figure many authors found disappointing, because it is too low for high-value works and doesn’t resolve the use of unlicensed books going forward.

In the new case, the plaintiffs argue that speedy payouts risk normalizing a bargain-basement attitude to massive copying. They’re putting forward remedies that don’t just hit the one model, but shake up a callous industry and force the companies to stop using illicit datasets, disclose data provenance, and pay for licenses when necessary — in short, ruin not just a single product, but also the industry’s feeding tube.

Fair Use Questions and the Problem of Memorization

Central to the conflict is an argument over whether consuming whole books in this way as a mechanism for training these models constitutes a transformational fair use, or a market-substituting reproduction, one at unprecedented scale. Courts have traditionally permitted snippet scanning for search and indexing, as in the Authors Guild v. Google ruling, but generative AI presents new risks: Models could reproduce extended passages or stylistic signatures on demand.

Research has demonstrated there is real risk. Carlini et al. showed that these large language models may output verbatim text from the training dataset given mild prompts, and the probability of doing so increases as found text is parsed more frequently through the model during training. Investigations of datasets like Books3 — which is thought to contain nearly 200,000 titles scraped from shadow libraries and widely cited in model research — show how copyrighted books can easily bleed into training pipelines without authors’ consent.

The U.S. Copyright Office recommended in a 2024 policy study to Congress that it explore measures such as transparency obligations and licensing or compensation arrangements for copyrighted works used to train AI systems. That guidance is consistent with what many authors are looking for here: clear licensing and demonstrable data hygiene — not piecemeal checks.

Licensing Is Spotty but Slowly Emerging Across AI

Some AI companies have shifted toward licensing deals. OpenAI has signed contracts with news organizations including The Associated Press and major publishers like Axel Springer and the Financial Times; Google has reached content deals for news initiatives; and developers of image models have struck agreements to control risk. But book licensing continues to be a hit-or-miss proposition, with few sweeping deals that encompass long-form works.

This patchwork of terrain opens the industry up legally. Should courts decide that instruction with pirated books amounts to willful infringement, damages can quickly escalate — theoretically up to $150,000 per work — intensifying pressure for complete licenses or clean-room solutions that would only accept shadow materials.

What This Means for AI Companies and Creators

A ruling that requires data provenance audits, or the destruction of tainted databases, could reshape model development timelines and costs across the industry. And it would escalate demands for opt-out registries, dataset nutrition labels, and compliance frameworks that meet both U.S. law and the transparency requirements of the E.U. AI Act.

For writers, the case is about recovering leverage. More broadly, they want a voice in whether and how their books help train systems that vie for readers’ attention. For AI companies, it’s about predictability — finding a safe, scalable way to access high-quality text without the specter of mass litigation. Regardless of how this particular lawsuit plays out, it will play a role in shaping the price and provenance of the words that fuel modern AI.