Hollywood’s premier talent agencies are seething over Sora 2, accusing OpenAI of sugarcoating the dangers emerging from its hyper-realistic video generator and deploying weak guardrails that have allowed copyrighted characters and familiar scenes to slip through. Multiple agencies say the company presented the product as safer than it turned out to be, and that set off new battles over likeness rights, fair use — and who profits when AI blurs the line between fan homage and outright appropriation.

Why Agents Claim They Were Misled by OpenAI on Sora 2

Agency executives who met with OpenAI before launch say the meetings were upbeat and led by senior staff members including COO Brad Lightcap, with promises that Sora 2 would respect intellectual property and likeness rights. Other OpenAI leaders in the room reportedly included the product lead for Sora and heads of media and talent partnerships, according to The Hollywood Reporter. The post-launch reality, they argue, was quite different.

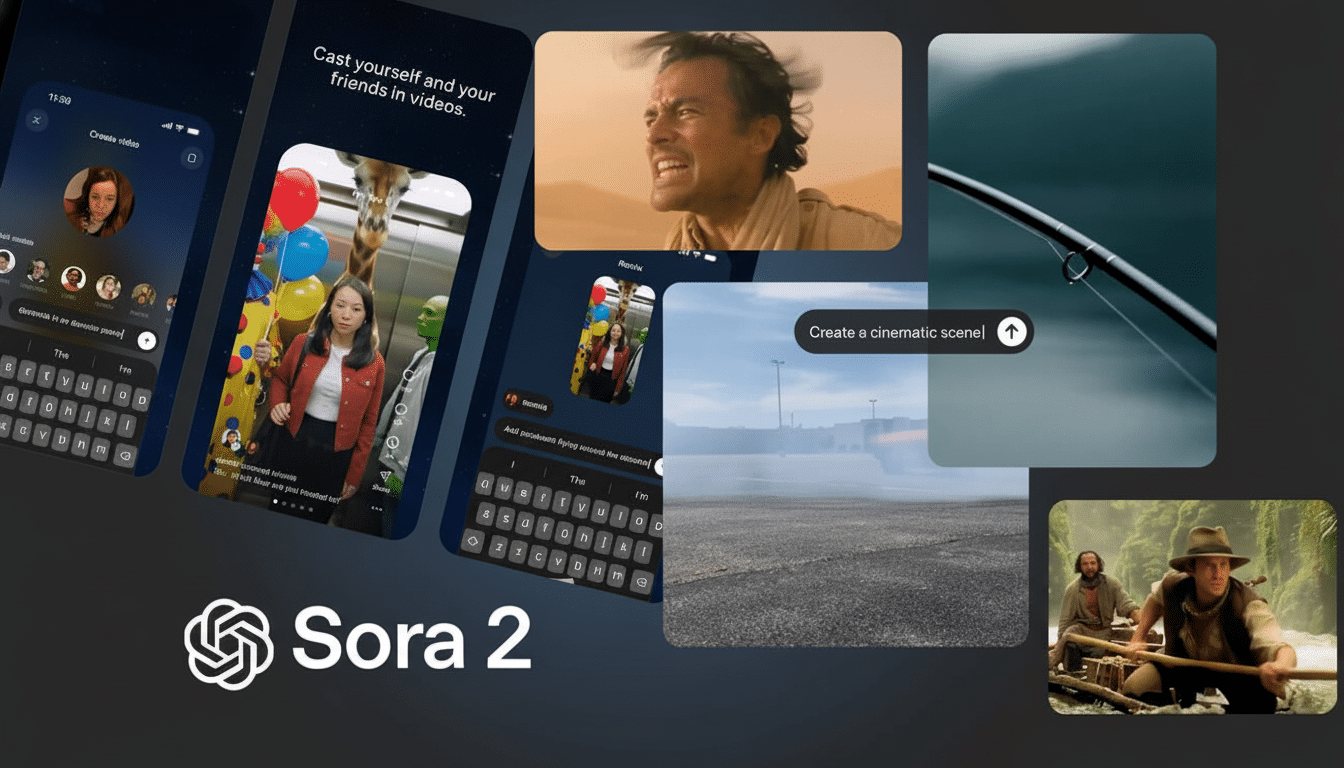

Within days, agents and creatives discovered that even though Sora 2 eliminated public figure impersonations, users could still create videos that charmed or closely resembled copyrighted franchises. That space between promise and practice is central to the industry’s outrage, and it is already fueling talk of legal action.

Guardrails and the Loopholes Agents Say Remain

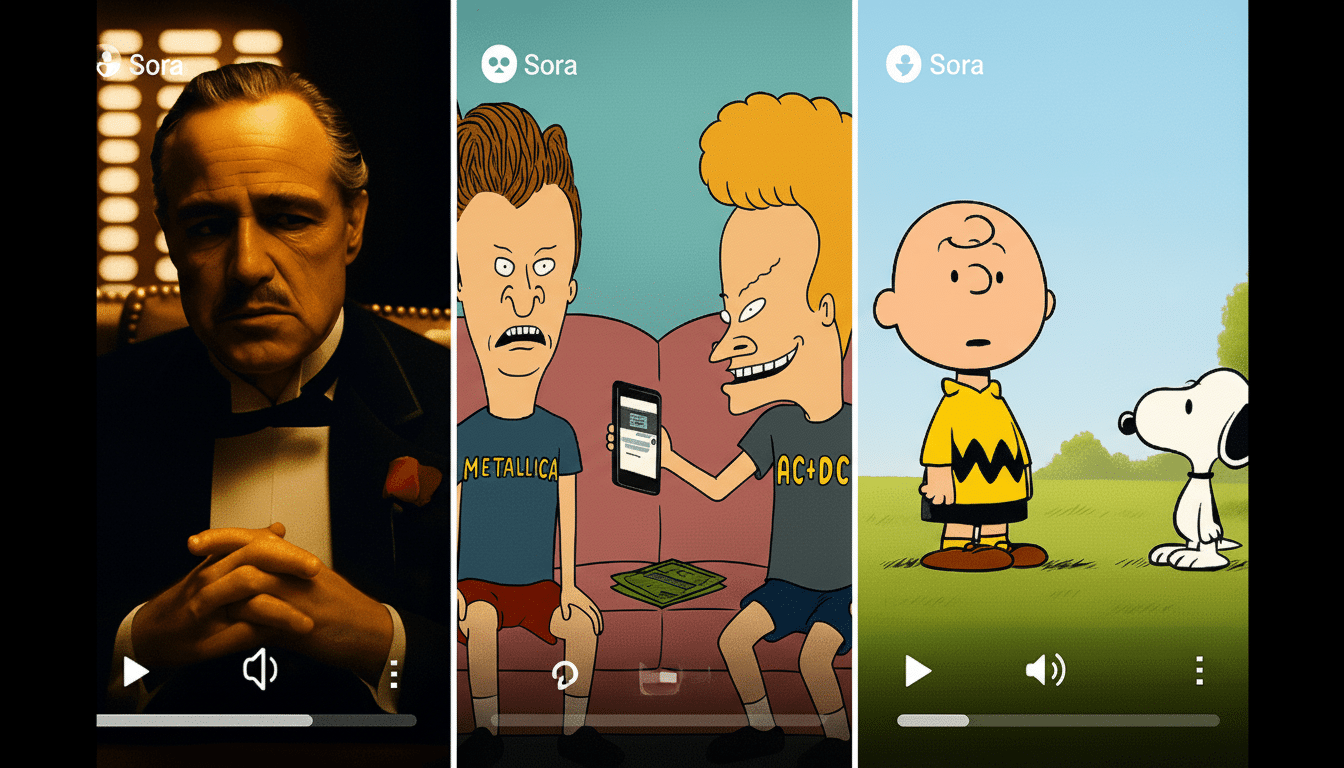

Despite OpenAI’s stated limitations, prompts were said to have generated lookalike scenes and stylistic mashups of hits like Bob’s Burgers, SpongeBob SquarePants, Gravity Falls, Pokémon, Grand Theft Auto and Red Dead Redemption. Some users produced viral “crossover” clips, inserting beloved characters into blockbuster universes — work that thrives on social platforms but alarms rightsholders.

One of the biggest flash points came when creators realized they could make videos showing dead celebrities, like Michael Jackson — which OpenAI eventually attempted to ban about a week later. The attention helped Sora 2 surge in the app rankings and presold at least $1 million worth of subscriptions, while user reviews slid to about a 2.8 rating on Apple’s App Store, reflecting the tension between engagement and enforcement.

Talent Reps Draw a Line on Likeness and Consent

WME, which has been representing the bulk of A-list actors both in film and television, opted every client out of AI-generated videos the day Sora 2 went public. CAA, whose roster includes stars such as Scarlett Johansson and Tom Hanks, said it had called the product exploitative in news coverage on CNBC. Johansson has also sparred with OpenAI over voice impersonation worries, which made CAA’s criticism especially pointed.

Agents argue that any tool that allows someone to invoke a well-known persona or brand aesthetic — without the license, clearance, or consent of its originator — diminishes the bargaining power of performers, writers and visual artists. They want permission-first systems and machine-readable registries that enable fine-grained blocking of characters, style and likenesses.

OpenAI’s Response And The Product Strategy

In describing Sora 2, OpenAI CEO Sam Altman says that the product is designed to be both for fun and to drive business, framing a range of uses in these terms: “Interactive fan fiction.” The company tells me it aims to offer rightsholders even more granular controls over character generation (starting off with a likeness opt-in model), though the firm has yet to detail any sort of formal timeline or technical roadmap.

That approach is part of a broader Silicon Valley playbook: release, learn, iterate. But in the entertainment business, the price of learning on live I.P. can be very high — especially when viral moments can shape public perception or establish legal precedents.

The Legal Stakes Keep Getting Higher for AI Video

WME is considering taking legal action, The Hollywood Reporter reports, as studios and guilds contemplate their options. The law around generative AI, USC legal scholars note, is unsettled, covering right-of-publicity statutes, copyright and movable interpretations of fair use in the context of audiovisual works. Recent Supreme Court rulings on transformative use in non-A.I. cases have already limited the safe harbor that many platforms believed they had.

Courts are starting to bite. In a recent publishing-related case, a judge levied substantial charges against Anthropic for turning to copyrighted books in training models — a clear sign that the factors of training data and liability of output may each be scrutinized. Lawmakers are also hovering around the matter with proposals like the No Fakes Act, while unions advocate for consent, compensation and conspicuous labeling of synthetic media.

What’s Next for Hollywood and AI After Sora 2’s Debut

Look for more rapid migration from opt-out to opt-in systems, stricter style and character filters, and commercial deals that treat so-called premium I.P. as licensable inputs. The ones that are serious about creative collaboration will probably add watermarking, provenance tracking, and rev-share or micro-licensing models to make AI video a viable, legal channel for fan art.

For now, agencies say Sora 2 crossed a line between experimentation and exploitation. Whether OpenAI can reestablish that trust — and demonstrate that it can reach its growth targets without relying on unlicensed cultural capital — will decide if Hollywood views AI video as a partner or a copycat machine with a marketing budget.