Google is widening the scope of its privacy controls in Search, giving people more direct power to scrub highly sensitive personal details and nonconsensual explicit images from results. The updates center on its Results about you hub and new, streamlined reporting for intimate images, signaling a more aggressive stance on doxxing, identity theft, and image-based abuse.

Announced in conjunction with Safer Internet Day, the changes aim to fix two long-standing pain points: finding out when your private data shows up in Search and removing harmful images without wading through endless forms. While these tools do not erase content from the underlying websites, limiting discoverability in Search can dramatically reduce exposure and downstream harm.

What Changed in the Results About You Privacy Hub

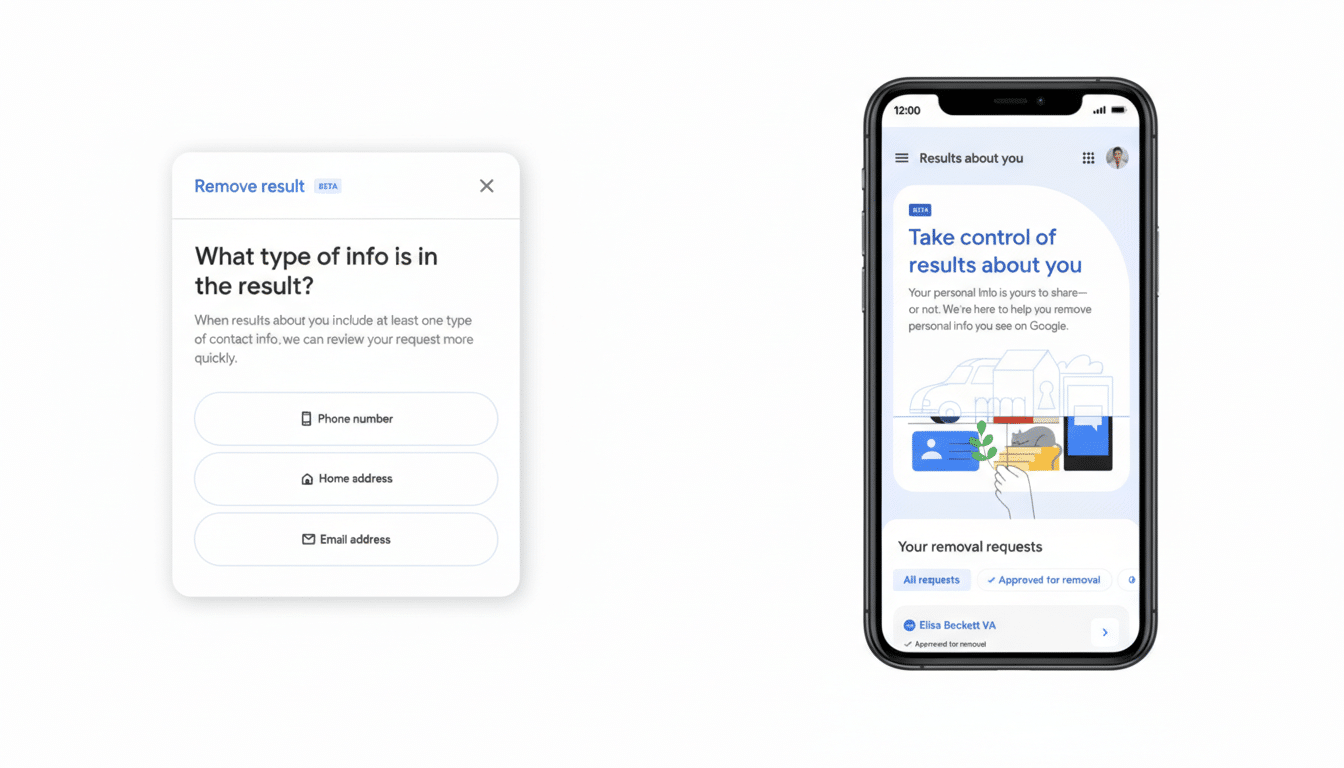

Results about you already let users request the removal of basic personal information such as home address, phone number, and email. It now extends to government ID numbers and documents, including driver’s licenses, passports, and Social Security numbers—data that is catnip for fraudsters after breaches and phishing attacks.

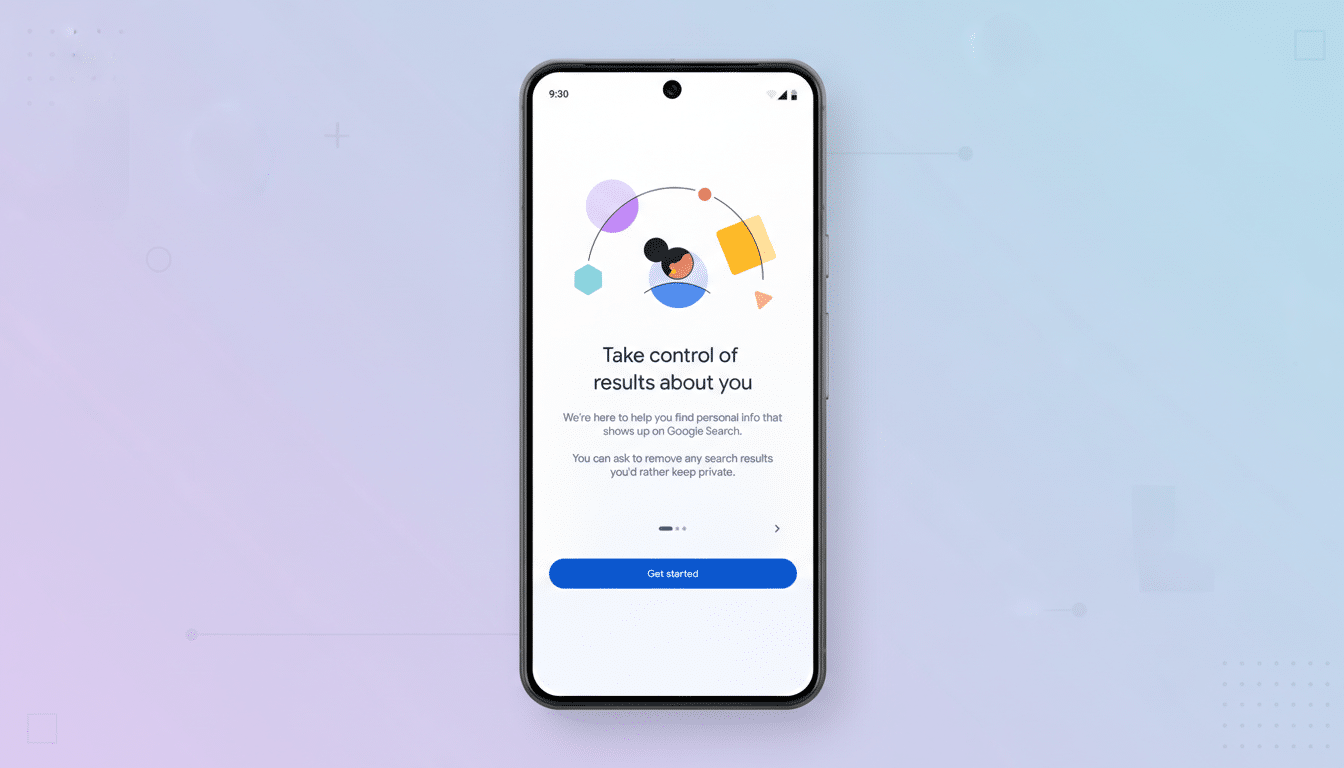

Access lives inside the Google app under your profile photo, where you can open Results about you, get started, and add the contact details you want monitored. A new step invites you to add government ID numbers you’d like flagged. Once set, Google will automatically scan Search results and alert you if it detects those elements, creating a monitoring loop instead of a one-off takedown request.

Google emphasizes that removing a result from Search does not delete the source page. Still, reducing visibility can limit the speed and scale at which bad actors find sensitive data. The rollout begins in the U.S., with additional regions to follow.

Simpler Removal for Nonconsensual Images

For intimate images that appear in Search without consent, the company has condensed the reporting flow to a few taps. From an image, users can hit the three-dot menu, choose remove result, and select It shows a sexual image of me. Crucially, you can now submit multiple images in one batch rather than filing individual reports.

All requests can be tracked from a single dashboard within the Results about you hub, cutting down on guesswork about status and outcomes. Google also added optional safeguards to proactively filter out similar explicit results that could emerge in related searches—a recognition that removing one image often isn’t enough to contain harm, especially with reposting and mirrors.

The move builds on earlier policy expansions to cover nonconsensual and AI-generated explicit imagery, a growing category as deepfake tools become easier to use. Advocacy groups such as the Cyber Civil Rights Initiative have long pushed for faster, consolidated reporting to blunt the viral spread of abusive content.

Why This Matters for Privacy, Safety, and Search Users

Identity data in public view fuels real-world losses. The Federal Trade Commission logged more than 1 million identity theft reports in the most recent year, with government documents or benefits fraud among the fastest-growing categories. Once IDs leak onto the open web or people-search sites, they are scraped, resold, and resurfaced—often indefinitely.

Online abuse is similarly pervasive. Pew Research Center reports that 41% of U.S. adults have experienced some form of online harassment, with severe harassment sharply higher for younger adults and women. Streamlining removals and adding monitoring reduces the time window in which victims are exposed, a critical factor for safety and mental health.

Limits and Practical Tips for Protecting Your Data

Search removal is necessary but not sufficient. If an offending page hosts your data or an image, contact the site directly to request deletion, especially if it is a forum, paste site, or people-finder service. Many data brokers provide opt-outs; residents in states with comprehensive privacy laws may have additional rights to delete or restrict sale.

Use Google’s monitoring alongside other tools:

- Set up Search alerts for your name and address variations.

- Watch for credit file changes with your bank or credit bureau alerts.

- Consider a password manager and breach notifications to limit knock-on risks after exposure.

How It Compares Globally to Erasure Rights Abroad

In regions governed by broader erasure rights, like the European Union’s right to be forgotten, individuals can request deindexing under legal standards. Google’s update doesn’t create a new legal right in the U.S., but it nudges the practical experience closer to those norms by automating discovery and centralizing action for high-risk data.

What to Watch Next as Google Rolls Out These Tools

Google says the upgraded tools will expand beyond the U.S. after the initial release. Expect further automation—such as broader proactive detection of exposed credentials and deepfake imagery—and tighter integrations with SafeSearch controls.

The privacy landscape is shifting fast as states enact new data rights and platforms adapt to AI-driven harms. For now, this update makes two tasks materially easier: finding out when sensitive personal information hits Search and removing it before it spreads further.