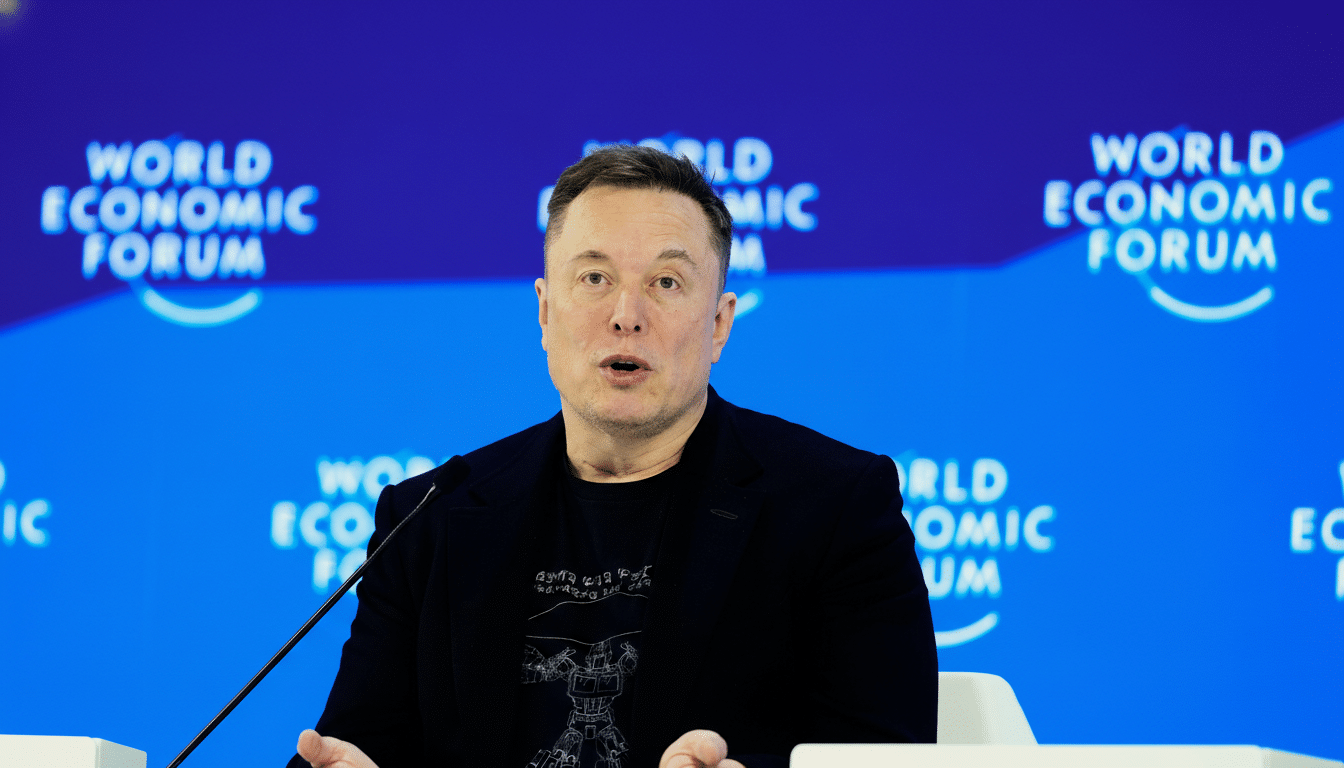

French police, working with Europol, searched the Paris office of X as prosecutors widened a criminal probe into the platform’s operations. Investigators also summoned owner Elon Musk and former chief executive Linda Yaccarino for questioning, escalating a case that now spans alleged data offenses and the handling of some of the most serious categories of illegal content.

Paris prosecutors said the investigation, opened earlier on suspicions of fraudulent extraction of data from an automated system by an organized group, now includes potential complicity in the possession and distribution of child sexual abuse material, privacy violations, and Holocaust denial. A spokesperson for the prosecutor’s office said the goal is to ensure the platform complies with French law within national jurisdiction. Several X staff members were also called to appear.

What Investigators Are Probing in the X Paris Case

The original data case centers on whether data was taken or siphoned unlawfully from information systems, a crime addressed under France’s penal code governing breaches of automated data processing systems. “Organized group” adds a serious-aggravation element, typically indicating coordinated activity rather than one-off access. Searches of corporate offices in such probes commonly target access logs, internal communications, moderation workflows, AI system controls, and policies around developer privileges and third-party tools.

Europol’s involvement signals potential cross-border dimensions—such as data stored or processed outside France, or suspected activity that touches multiple EU member states. In complex cybercrime and content cases, joint teams often mirror servers, collect audit trails, and trace how content moves across services to establish responsibility and intent.

Grok AI and Content Liability Under French Law

The widening inquiry comes amid criticism that X’s Grok AI has been misused to generate nonconsensual imagery, including material depicting abuse. While generative models are designed for a broad range of tasks, authorities are increasingly focused on whether platform design, guardrails, and distribution mechanisms meaningfully prevent harmful outputs and rapid amplification.

The legal frontier around synthetic content is evolving. French law criminalizes the dissemination of abusive images of minors, and prosecutors are testing how those statutes apply when AI tools are involved. Europol has warned that generative AI can be exploited to produce and circulate abusive material, and European hotlines have begun reporting more cases that involve synthetic or manipulated imagery. Even where imagery is generated, platforms may face exposure if tools or policies enable circulation at scale.

European Rules Raise the Stakes for X’s Compliance

As a very large online platform under the EU’s Digital Services Act, X must assess and mitigate systemic risks tied to illegal content, including child safety violations and hate speech. Failure to comply can trigger fines of up to 6% of global turnover and, in extreme scenarios, temporary service restrictions ordered by EU authorities. The European Commission has already opened formal proceedings into X over suspected DSA breaches related to illegal content and transparency obligations.

In France, privacy concerns linked to data extraction would potentially involve the data protection authority, CNIL, while hate and Holocaust denial allegations intersect with the Gayssot Act, which criminalizes Holocaust denial. The European Commission’s latest monitoring of the Code of Conduct on illegal hate speech found that platforms’ removal rates dropped to 47%, underscoring regulatory pressure to improve detection and response.

Potential Consequences for Musk and X in France

Being summoned for questioning does not mean charges are imminent. It allows investigators to clarify what executives knew, how policies were designed and enforced, and whether oversight was adequate. If prosecutors establish complicity or systemic failures, potential outcomes range from criminal charges against the company to court-ordered remedies, fines, and strict compliance undertakings. Individual liability hinges on proof of knowledge and responsibility under French law.

For X, immediate risks include orders to preserve evidence, changes to recommendation systems, tougher guardrails around AI tools, and expanded trust-and-safety resources. Advertisers and users typically watch such inflection points closely; when platforms falter on brand safety and illegal-content controls, ad spending often pulls back until risk indicators improve.

What to Watch Next as French and EU Probes Advance

Authorities will analyze seized digital materials, conduct interviews, and determine whether to bring charges or impose remedial measures. Parallel regulatory scrutiny under the DSA could shape any corrective action plan, including transparency reports, risk assessments, and more rigorous enforcement against illegal content.

The outcome will hinge on the evidence chain: how data was accessed or moved; whether moderation and AI controls were sufficient; and how quickly illegal content was detected and removed. For a platform operating at global scale, these questions are no longer abstract—they are compliance thresholds that determine legal exposure, business stability, and public trust.