YouTube has started deploying its much-discussed likeness detection system to select members of the YouTube Partner Program, promoting the feature out of pilot and into production. The tool is meant to identify AI-manipulated videos that present a realistic-looking likeness of a creator’s face or voice and simplify the process for creators to request offending videos be taken down, addressing an escalating danger of synthetic impersonation used for scams, endorsements, and misinformation.

The launch represents a rare product-level effort to, at scale, operationalize identity protection. With tens of thousands of hours being uploaded every day, automated triage is now essential — human reports just can’t keep up with the speed and sophistication of AI-generated clones.

- What the Likeness Detection Tool Does for Creators

- How Creators Get Access to YouTube’s Likeness Tools

- Policy and Legal Backdrop for YouTube’s Likeness Detection

- How the Likeness Detection System Probably Works

- Why It Matters at YouTube Scale for Creators

- Open Questions and Next Steps for Likeness Detection

What the Likeness Detection Tool Does for Creators

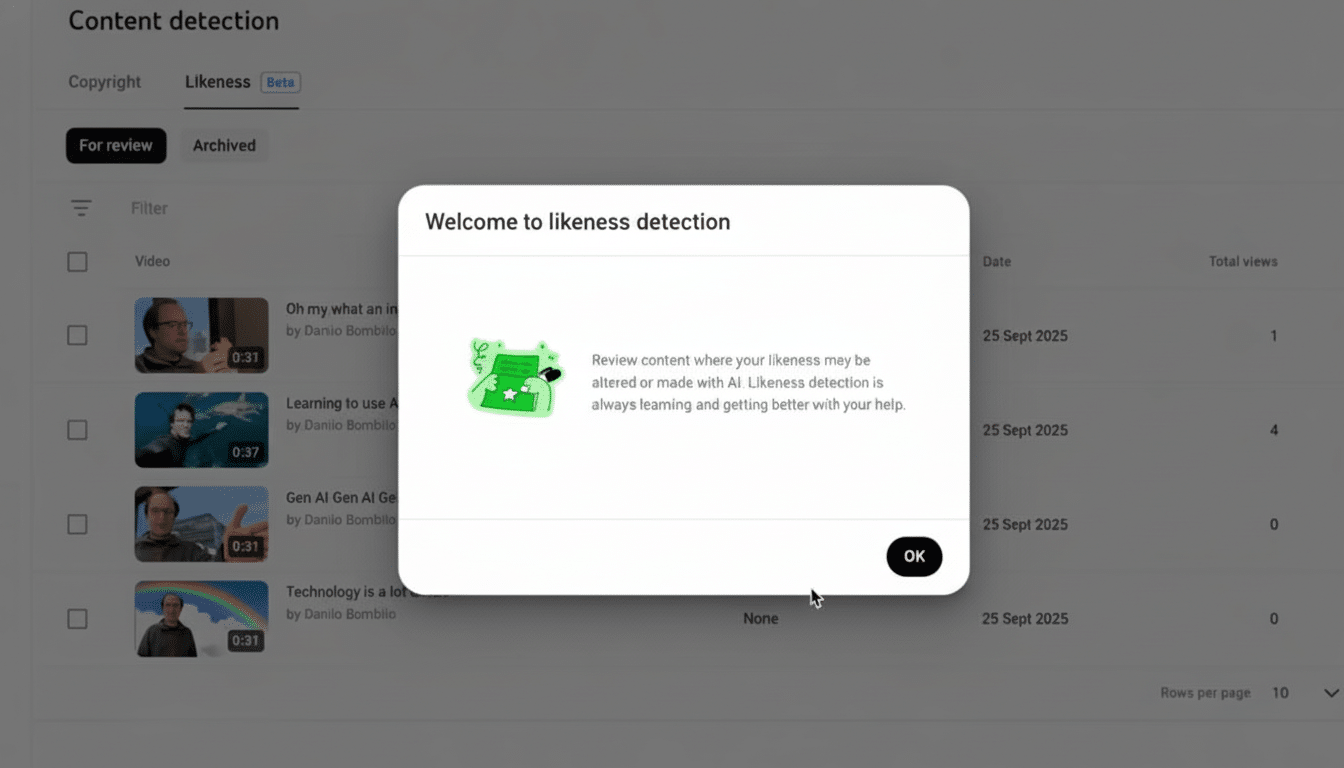

YouTube’s technology flags content that seems to impersonate a creator’s appearance or voice, collecting the information for display on a dashboard where creators can examine and act upon the data. From there they can either file a removal under YouTube’s privacy process, take copyright action if it applies, or save the detection for the record. The product goes after deepfakes and other synthetic media that insinuate endorsement from an individual or mislead viewers as to who is making a statement.

Recent episodes underscore why the feature is important. The hardware maker Elecrow was also singled out for employing an AI voice clone of creator Jeff Geerling, while various high-profile personalities — Tom Hanks and MrBeast among them — have sounded the alarm about deepfake ads. The new workflow allows creators a quicker way to dispute these uploads directly within Creator Studio in YouTube’s rights management and safety tools.

How Creators Get Access to YouTube’s Likeness Tools

Onboard creators through a dedicated Likeness tab, where they agree to data processing and undergo identity verification via digital QR code reading. You need a valid photo ID and a quick selfie video to create a trusted reference! As accepted, creators are able to view a queue of detected matches, choose when to escalate them, and monitor trends over time. You can opt out at any time, and YouTube will stop scanning in about a day after you turn it off.

That foregrounding of identity verification is important: it helps limit abuse by ensuring only the rightful owner can ask for removal from a photo or voice recording, meaning people won’t be able to improperly file requests against legitimate work.

Policy and Legal Backdrop for YouTube’s Likeness Detection

The system comes amid widescale shifts in policy and industry. YouTube had previously worked with the Creative Artists Agency to assist clients and creators in monitoring problems on the platform. In the United States, measures like the NO FAKES Act would formally enshrine protections for one’s voice and likeness, and the European Union’s AI Act requires fair labels on deepfakes. Meanwhile, the Federal Communications Commission has recently announced that AI voice robocalls designed to impersonate someone else would violate existing rules against such calls, further closing the noose on imitation-based scams.

YouTube has introduced synthetic media labels for photorealistic AI content, in another demonstration of the platform’s efforts toward transparency. Detecting likeness supplements such disclosures by enabling the most affected people to identify and challenge deceptive uses that escape these.

How the Likeness Detection System Probably Works

And while YouTube has not disclosed the details of its model, standard industry methods rely on embeddings — which are like mathematical fingerprints for faces and voices — to measure similarity against a confirmed reference.

This allows for robust matching even if a clone is pitch-shifted, filtered, or otherwise composited into new scenes. The model’s thresholds must compromise between precision and recall: if it is set too tight, artful fakes are missed; if it is set too loose, legitimate commentary, parody, or news reporting is over-flagged.

Notably, detection does not auto-delete. Human-in-the-loop review by the affected creator as well as YouTube’s policy team is critical to address context and avoid over-suppression of news coverage, satire, criticism, or other types of lawful speech.

Why It Matters at YouTube Scale for Creators

YouTube historically has relied on reactive reporting and copyright protections systems, such as Content ID, to safeguard intellectual property. Likeness, however, is a different right — more akin to privacy and publicity than copyright itself. By providing creators with proactive identification data and a bespoke removal process, YouTube recognizes that identity itself requires a first-class protection mechanism now that generative tools are beginning to commoditize believable voice and face synthesis.

The platform’s scale alone — often cited at around 500 hours of video uploaded every minute — requires automation to identify the most urgent cases. For creators, that can cut down on the whack-a-mole quality of hunting impersonations and lessen the reputational and financial damage from faked endorsements.

Open Questions and Next Steps for Likeness Detection

It will be key to watch details like the speed of global expansion beyond initial eligibility, and language and accent coverage for voice models, as well as how it treats newsworthy uses, documentary context, or parody. Then, too: what about non–Partner Program channels, minors, and creators who talent firms represent in proxy fashion?

Still, the launch represents a meaningful shift: protecting your identity is moving from policy pages and after-the-fact reports into a proactive, productized tier.

If successful, YouTube’s method could serve as a model for other platforms that are bracing themselves for the next round of synthetic media — and even as a baseline expectation from anyone seeking to find an audience online.