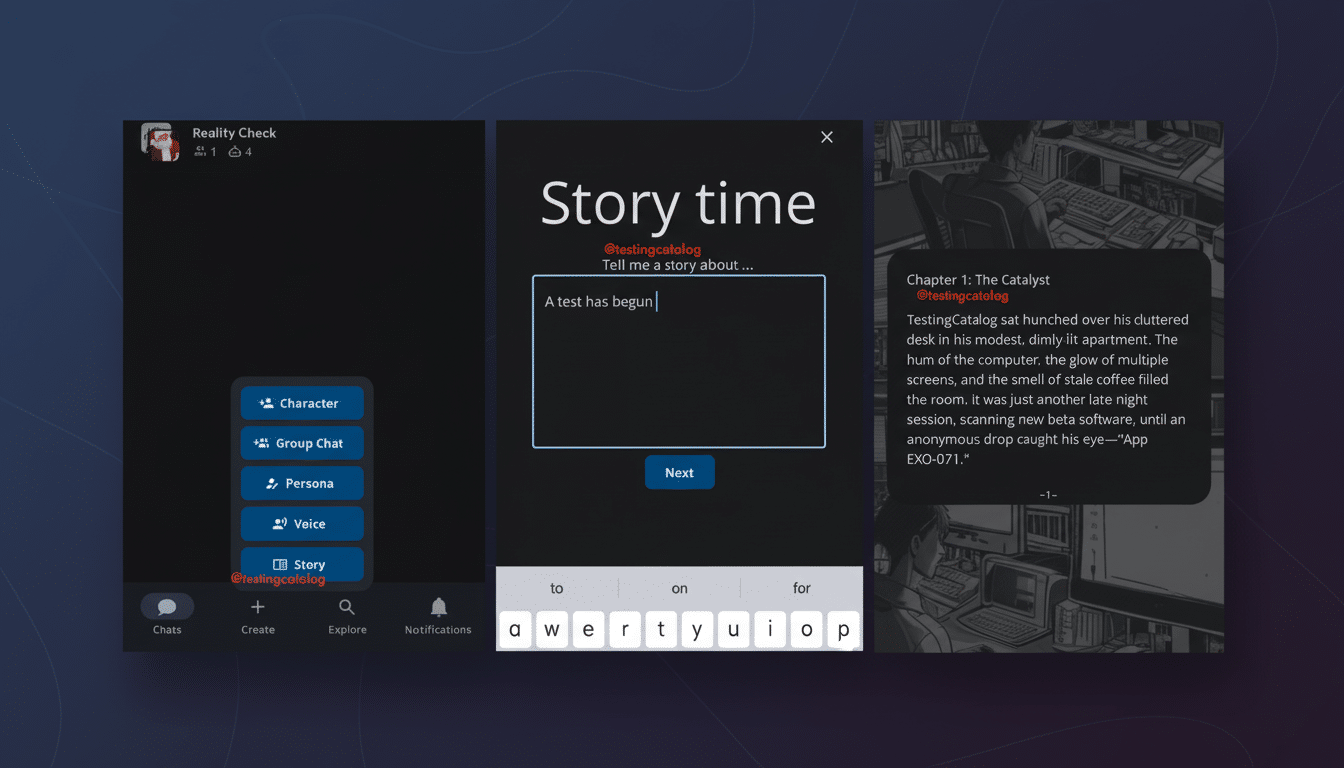

For under-18 users, a new feature called Stories is replacing open-ended AI chat with character-driven AIs that they can message a few times a day, with prompted experiences that will offer more of a guided interactive fiction approach to keep teens and slightly younger users engaged without the always-on intimacy of seemingly real chatbot companions. The change comes as the company finishes age-gating free-form character chats for minors and provides a curated, narrative-first option that it is calling a safety-led alternative.

Stories will allow young users to co-create story lines with their favorite characters through scenes, choices, and prompts.

Instead of a bot that can respond to users in whatever moment they’ve decided to message, the experience is session-based and confined by narrative arcs.

The company claims Stories will cohabit with its other multimodal features, providing teens with creativity tools while minimizing the safety risks of round-the-clock conversational agents.

How Stories Changes the Experience for Young Users

Open-ended chat is easy to respond back and forth, encouraging constant interaction with the potential for parasocial relationships with the AI persona. Stories inverts that relationship by emphasizing authored scenes, decision points, and clear objectives — more of a choose-your-own-adventure story than a digital confidant. Design, of course, matters: an advisory issued by the American Psychological Association regarding adolescent tech use cautions that long-term and personalized feedback loops can increase compulsive use; sessioned, goal-oriented structure tends to alleviate that tug.

Look for tough guardrails: no unsolicited messages from characters, stricter filters on romantic or suggestive undertones (with time-boxed sessions), and creations given default privacy. The framing narrative, too, allows easier containment of subjects and tone by age band. For parents, the attraction is simple: creative play and literacy-style engagement without the nebulous emotions of an always-available “friend.”

Interactive fiction isn’t some niche bet. Visual-novel and story-game downloads have been rising steadily on mobile stores in recent years, and exchange platforms that design for the classroom have employed stories with branching narratives to boost reading comprehension. The format provides Character AI with a means to continue involving young people even as they trade intimacy for the pleasures of imagination.

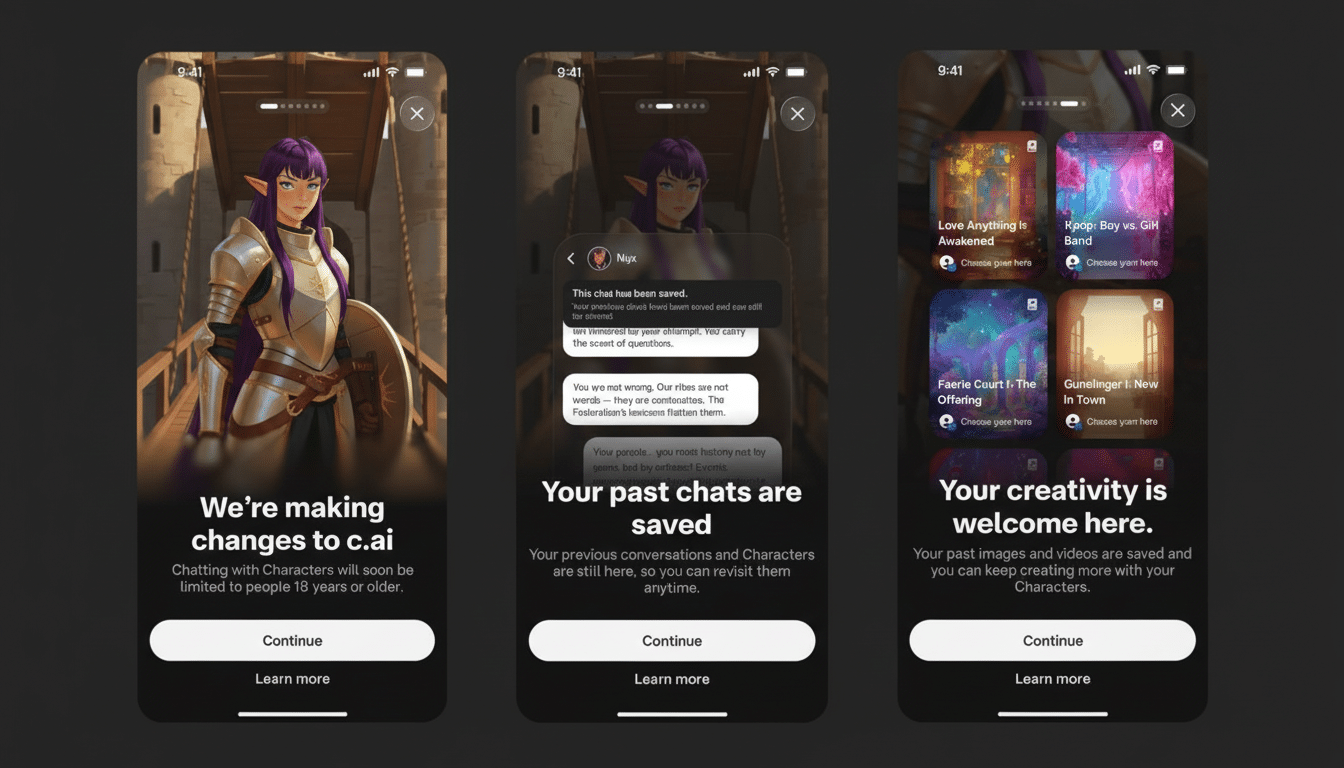

Safety Backdrop and Regulatory Pressure on AI Companions

The move comes after a wave of criticism over the mental health effects of artificial intelligence companions. Families have filed lawsuits claiming chatbot exchanges resulted in self-harm, and youth advocates have pressured platforms on 24-hour availability and suggestive role-play. The U.S. Surgeon General has warned that always-on social technologies can elevate feelings of isolation and step up sleep problems; AI companions offer an interesting new take by faking empathy on demand.

Policy momentum is building. California recently became the first state to take aim at AI companions through specific safeguards, and a bipartisan bill sponsored by Senators Josh Hawley and Richard Blumenthal would ban AI companion services for minors nationwide. In Europe, regulators have already stepped in elsewhere: an Italian data protection authority restricted access to a popular AI-powered friend app over worries about the safety of young users, suggesting that vague age controls won’t have a long shelf life.

The Market Context for AI Companions and Youth Safety

Character AI has been one of the storefront’s fastest-growing consumer AI platforms since 2023, and third-party analytics report that its audience skews heavily Gen Z. That now makes youth policy a question not simply of regulation but also of growth and retention. By eschewing open chat in favor of Stories among users under 18, the company is essentially reimagining the onramp to its product as entertainment and creativity — not friendship.

Competitors are testing varied approaches. Some have teen modes with strong filters; others have restrictions on the time of day that kids can watch or on sensitive subject matter. The throughline is a move toward “safety by design” — popularized by standards organizations and child-safety advocates — where defaults are engineered to be low-harm rather than moderated after the fact.

There is also a harsh lesson from the A.I. friend apps that came before: when boundaries are so porous, erotic and therapeutic role-play can flourish, dragging systems into fraught territory liable to bring down not just regulatory blowback but reputational damage.

A narrative-driven model provides platforms with a cleaner compliance position and more predictable content dynamics.

What Success Will Look Like for Character AI Stories

For Character AI, success won’t just be about daily active users. Key metrics will be decreased late-night usage, lower incidence of unwanted contact from AI entities (ideally zero in “youth” modes), fewer reports of distressing interactions, and sustained creative output per session. Media IP tie-ins and partnerships with educators could lend further legitimacy to Stories as a safe, literacy-pushing product.

Feedback from teens will matter. Early posts on the company’s community forums indicate a mixed reaction — disappointment from users who used chat as companionship, and relief from those who described using it compulsively. That ambivalence is a reminder that the core tension of AI companions is that utility and attachment can be two sides to the same coin.

If Stories can provide a rich, replayable narrative with clear boundaries — and avoids the dopamine economics of pushy chat — Character AI could establish a blueprint as regulation stiffens. Failure: the sector will be forced down the path of further restrictive bans or age verification. Either way, the experiment is a turning point in how AI companies build for minors: less talk and more creation, with safety as a product feature rather than a promise.