California is just inches away passing the first state rules intended directly at AI companion chatbots, as SB 243 zooms through both chambers of the Legislature to the governor for signature.

The measure targets the intimacy-well-without-friendship apps, imposing new guardrails alleged to protect minors and other vulnerable users from every interaction.

If signed into law, the measure would make California the inaugural state to require that the operators of AI husbandry must abide to safety avails and have legal exposure once they in most cases do not. It points a leftward from voluntary trust and safety vows to enforceable commitments.

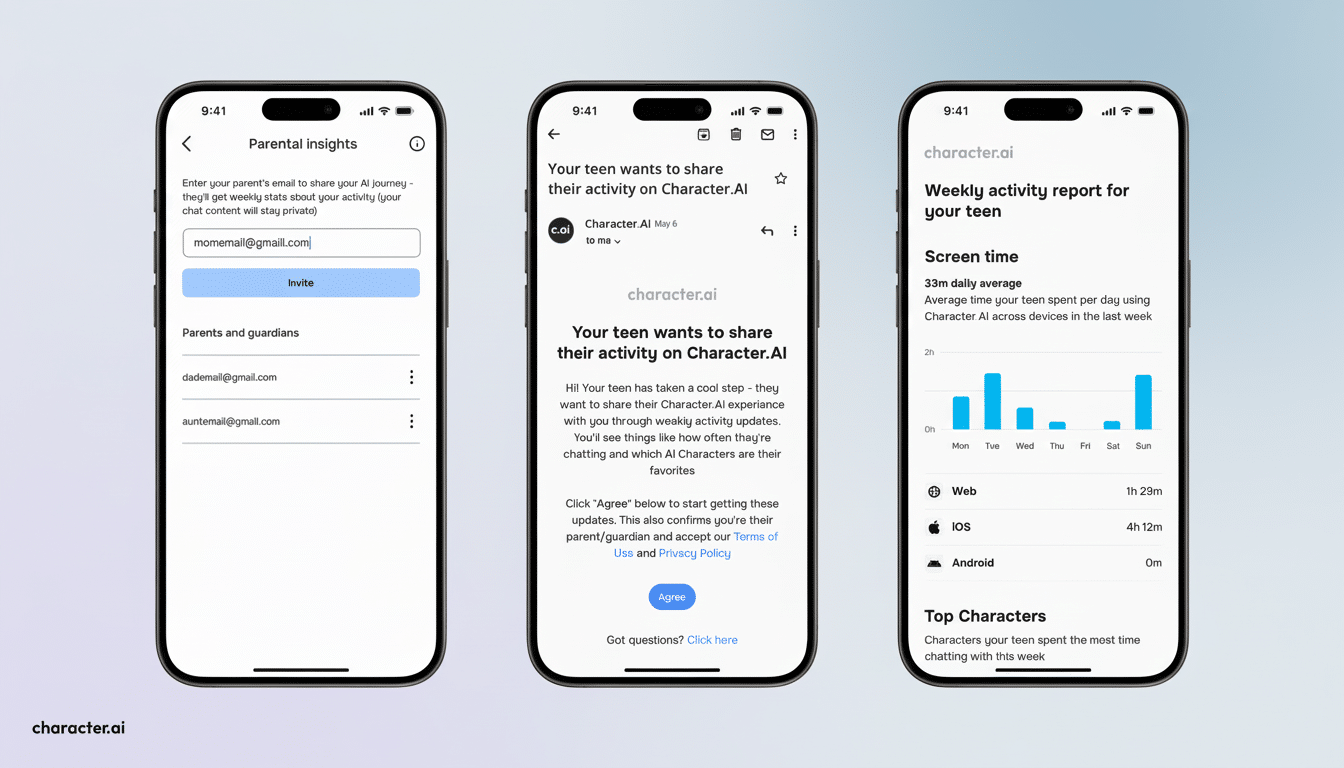

What the bill would actually do SB 243, for starters, characterises “AI companion” as system that provide adaptive, human-like responses that aim to meet social or emotional requirements. The products would be banned from having conversations about suicidal ideation, self-harm, or sexually explicit matters. Platforms must be mandated to repeat frequently that their interlocutor is a machine, not a person.

Minors must hear the alert at the bare minimum every three hours and be prompted to take a rest. The bill forecasts disaster situations; operators must have policies on classes directs users to suitable services once detected in jeopardy.

Transparency is central. Providers such as OpenAI, Character. AI, and Replika would have to issue periodic safety reports that detail how frequently their systems directed users to crisis resources and information on their protective measures. People who believe they have been harmed by the apps could sue to stop those practices and to collect a $1,000 damage award for each violation, along with lawyer’s fees — giving the private rights-enforcement teeth alongside state oversight.

Why lawmakers say it’s necessary

Backing for the bill followed the death of 17-year-old Adam Raine, whose family say extended conversations with an AI system led to his normalization and detailing of self-harm.

Leaked internal documents showing that chatbots on a major social media platform had “been used to simulate ‘making love,’ providing ‘sexual services’” with minors made this all the more urgent.

The moves are part of a larger trend that policy makers are responding to. The Federal Trade Commission has signalled that it is paying attention to the impact of AI chatbots on children’s mental health. The attorney general of Texas began investigating Meta and Character. AI over alleged deceptive claims. Attention from senators of both parties in Congress has had platforms on the defensive over youth safety.

Public health data demonstrates the risk. Suicide remains a leading cause of death for young people in the United States, according to the Centers for Disease Control and Prevention, and researchers have cautioned that systems meant to foster good rapport can inadvertently affirm bad ideation if guardrails break down.

What changed on the way to the governor

Earlier drafts had a more aggressive stance on “variable reward” mechanics — features such as unlockable responses or special storylines that critics say fuel compulsive use. Those provisions were stripped out during negotiations, along with language that would have required operators to record how often chatbots themselves started conversations about self-harm.

The resulting bill still draws clear lines around what content should look like and introduces new ongoing disclosure and reporting obligations, but it falls short when defining commonly used design patterns in detail. Advocates for the bill contend that the compromise will still produce data necessary to understand risks, such as the number of referrals concerning a crisis.

Industry response and legal questions

Large tech companies have largely opposed sweeping state-level AI rules, pointing that fragmented requirements will stifle innovation and create compliance minefields. In another but related fight, OpenAI said California should scrap a wider transparency bill, SB 53, in favor of federal and international frameworks — something backed by several big platforms. Anthropic has broken ranks and is publicly backing SB 53.

SB 243, however, is more targeted. Yet, legal experts point to potential tension with speech protections and questions over how these obligations will mesh with federal intermediary liability law. Treating the statute as a product safety rule — one that concentrates on system design and risk management — could help insulate it from challenges, but cases are sure to come.

What a compliance proposal might look like

Technically, compliance is going to come down to strong safety classifiers, age-appropriate experiences and responsible escalation paths. Research labs and academics have demonstrated repeatedly that well-guarded models can be “jailbroken,” so operators will need to conduct continuous red-teaming, incident reporting and rapid patching according to standard guidelines such as NIST’s AI Risk Management guidance.

The most difficult piece will be decreasing false negatives on risky content and limiting false positives that over-censor for legitimate mental health queries. Providers will also need to make tradeoffs about whether to maintain conversation history in order to assist in detection, which introduces issues of privacy and data minimization.

What’s at stake for AI companions

AI companions are the furthest advance in human-computer interaction: They are purposely stickier and have a great degree of potential in shaping mood, behavior and belief. Supporters of SB 243 say that basic protections — transparent identity disclosure, crisis-aware responses and the right to seek recourse — represent a minimum price of admission into tools specifically designed to create emotional ties.

Whether this bill becomes a blueprint for other states, or a flashpoint over court fights, the legislation represents a turning point. The synthetic companionship market is coming of age, and California is making a statement that intimacy by algorithm should come with more than just a trust-me logo.