Artificial intelligence is not only a software story but an arms race in hard infrastructure. Running frontier-style models would take oceans of compute, vast new data centers and, at a scale most utilities have never planned for, ready electricity. Nvidia’s Jensen Huang has suggested that global AI infrastructure spend might run into multiple trillions, and the rapid-fire pace of recent deals would imply that the market is starting to take those forecasts seriously.

Behind the headlines of chatbots and copilots lies a tangle of capital-intensive deals that lock down GPUs, power, land, cooling and network capacity. The largest among them aren’t just renting servers either — they are signing multi-year, co-designed compute reservations and linking data center growth to energy procurement strategy.

Cloud–AI partners change who provides compute

Microsoft’s initial bet on OpenAI — starting with a commitment of $1 billion and ramping up to a multibillion-dollar partnership — popularized a model in which cloud credits formed the currency needed for AI scale. Beyond cash, the deal shackled OpenAI’s training runs to Azure capacity and granted Microsoft early access to state-of-the-art models, a flywheel that lifted both companies’ ambitions.

That template has spread. Amazon hewed closely to the Anthropic model, providing both capital and priority access to AWS infrastructure and custom silicon such as Trainium and Inferentia. Google Cloud has won preferred-partner status from a handful of AI startups, as it fine-tunes training on TPU systems and Nvidia-based clusters. The common thread: access to compute is now as important a strategic lever as the models themselves.

These relationships are also becoming more fluid. Multicloud strategies and right-of-first-refusal clauses have been the subject of recent disclosures and public comments by leading AI labs — a tacit acknowledgment of just how scarce top-tier capacity is, and just how valuable it can be to leave those options open.

The Oracle megadeal reshuffles the leaderboard

Oracle has become the unlikely heavyweight in the market for cloud-based AI infrastructure. In regulatory filings, the company provided information on a cloud services contract said to be connected to OpenAI worth tens of billions of dollars — already more than the annual revenue that many service providers derive from their cloud. Shortly after, Oracle revealed an even larger five-year compute commitment, a headline number in the hundreds of billions.

These are capacity reservations rather than checks cashed on day one, but still. They send a signal that they are confident demand for AI training and inference will be sustained, and they give Oracle an influential voice at the top table of those who contribute scarce GPUs, networking gear, and floor space. Investors noticed, and so did rivals.

Hyperscale Buildouts Confront the Power Problem

Meta, which already operates one of the world’s largest fleets, has telegraphed a plan to spend hundreds of billions on U.S. infrastructure alone — from new AI-optimized campuses to 18-wheelers and cargo planes that shuttle machines among its data centers. Its Hyperion project in Louisiana covers thousands of acres, is focused on multi-gigawatt compute and includes agreements with nuclear generation to help stabilize its power profile. Another site, Prometheus in Ohio, relies on natural gas so it can get up and running fast.

The environmental and grid consequences are palpable. Just recently, the International Energy Agency calculated that they roughly consumed the electricity of a mid-sized country — and that demand is expected to almost double within a few years. U.S. grid operators such as PJM and ERCOT are cautioning about interconnection waits and transformer shortages, while the Uptime Institute is documenting increasing constraints around water, siting, and skilled labor.

Not every build is clean. xAI’s hybrid data center and generation plant in South Memphis has come under criticism from environmental groups and air-quality experts, highlighting the tension between speed to compute and compliance with emissions rules.

The $500B Stargate moonshot aims to remake AI infrastructure

One of the most ambitious ideas, known as Stargate, is a partnership involving SoftBank, OpenAI and Oracle to pour up to $500 billion into U.S. AI infrastructure. The pitch: pool funds for national construction and permitting in order to bust bottlenecks in compute, power and supply chains.

Skeptics wonder if the capital stack and governance are, in fact, driving some of these sorties. Bloomberg’s reporting has uncovered tension among allies, however — and yet the first phase continues on course in Abilene, Texas, with multiple data center buildings being planned. Pace aside, the project is an indicator of just how much investors are willing to invest in the foundation of a next-generation AI.

From GPUs to electrons: the new squeezes

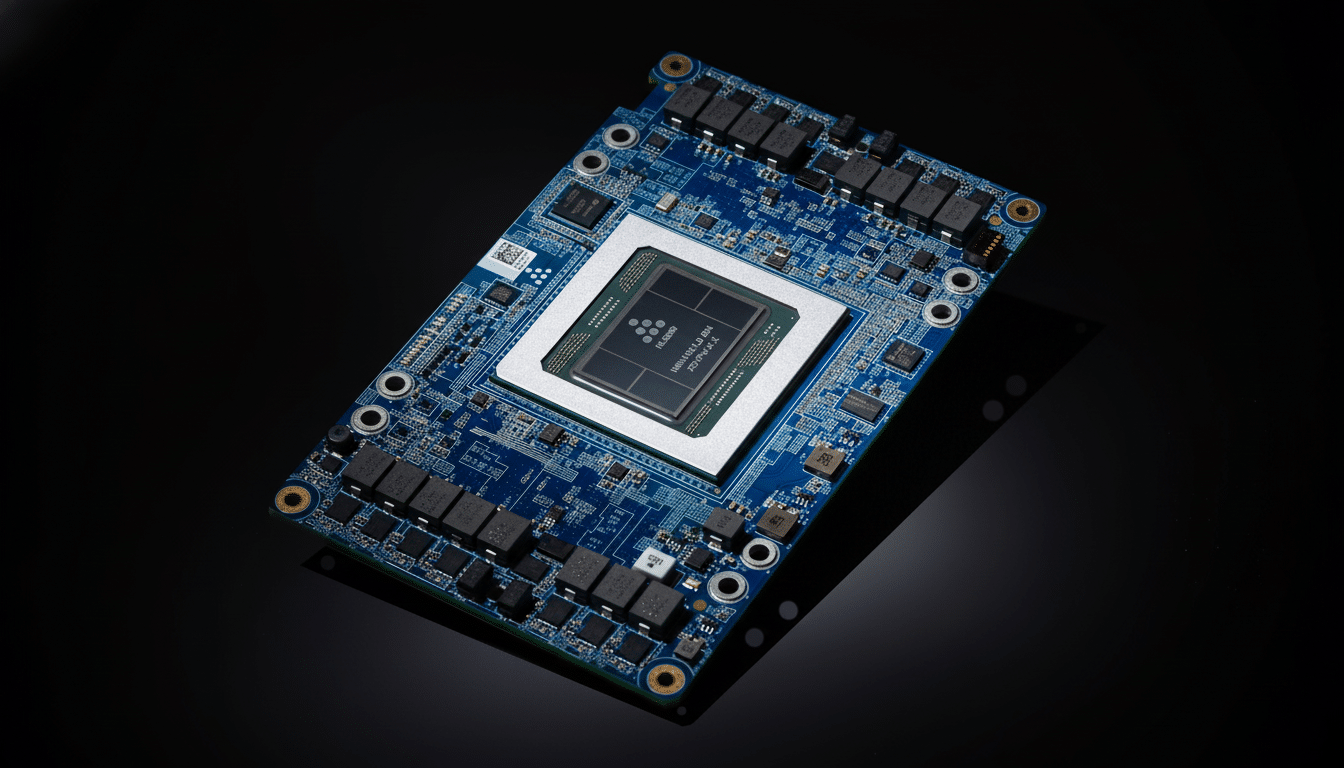

Scarcity of hardware is cascading down the stack. Nvidia still commands the H100, H200 and B systems, with deliveries depending on advanced packaging technology at TSMC and high-bandwidth memory offered by SK Hynix, Micron and Samsung. Optical interconnects from Broadcom and Marvell are now critical as clusters have expanded to tens of thousands of GPUs.

Power is the other currency. Hyperscalers are signing long-term power purchase agreements, supporting new transmission, and dabbling in self-generation — via gas turbines and battery storage — and in nuclear uprates and new small modular reactor deployments. Cooling is moving to rear-door heat exchangers and immersion to deal with rack densities that would have been a fantasy a few years ago.

What to watch next as AI infrastructure expansion accelerates

Anticipate more capacity reservations that package compute, energy and financing; closer co-design between model developers and chipmakers; a tsunami of siting decisions optimized for low-carbon, low-cost electrons. Regulators are circling — looking at such vertical cloud–AI tie-ups, supply-chain dependencies and the energy footprint. Enterprise customers, on the other hand, will demand transparency as to model performance and the carbon intensity of inference.

The short conclusion: Winning the race will be a matter not just of being the best models-wise, but also of good old-fashioned logistics — cash to pay for hardware, power to keep it running, and partnerships that can help scale all this faster than anyone else nearby.