Throughout Silicon Valley, there’s a crude playbook taking shape: cut headcount and put the money into GPUs, then ship another “next-gen” AI model that looks suspiciously like the last one. Investors cheer the efficiency talk, executives rave about automation, and users receive features that buckle under pressure, logic, or even simple reliability. It’s a get-rich-quick AI mentality that sees quarterly optics as more important than enduring capability.

The Playbook: Shave the Budget First, Then Pump Up Automation

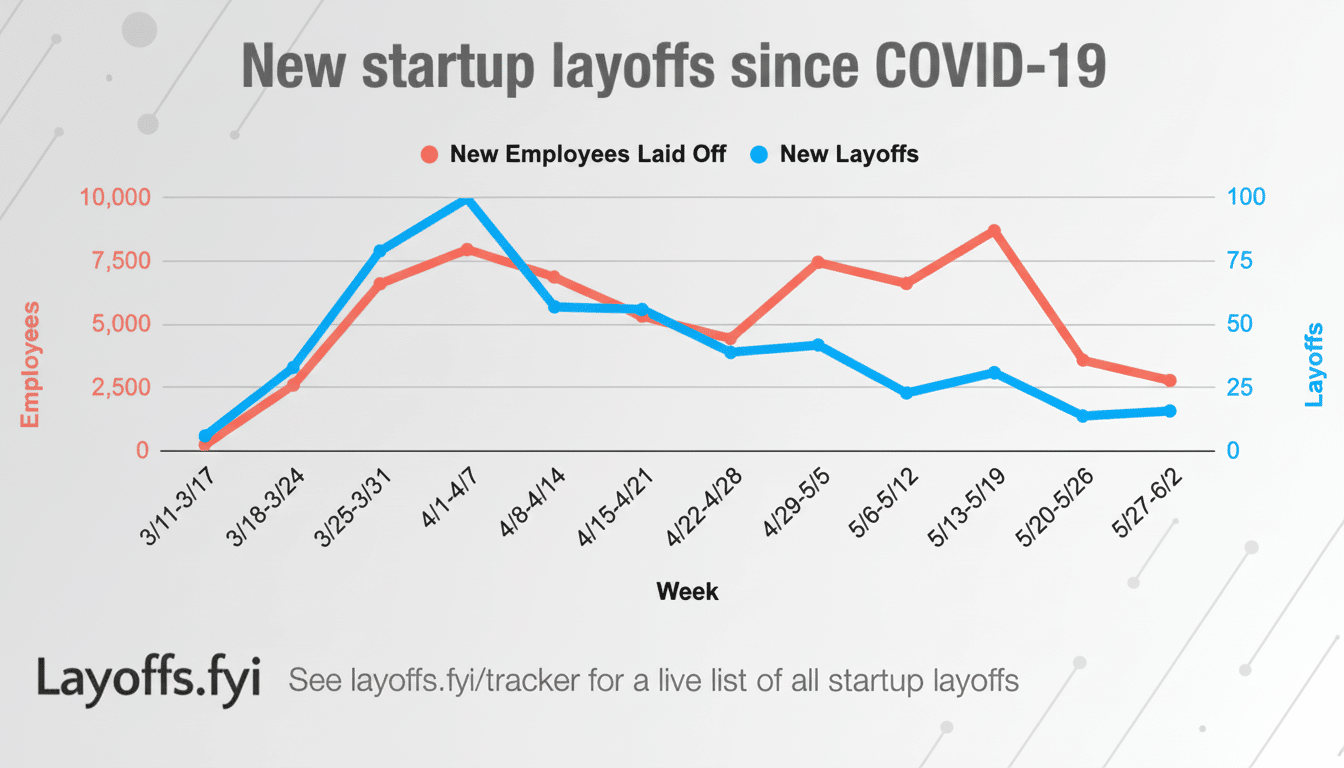

The sequencing isn’t subtle. Companies slash roles in support, moderation, QA, and editorial — some of the very teams working on keeping products safe and polished — and publicize generative “copilots” that can help with productivity. On earnings calls, “efficiency” and “leverage” become coded language for doing more with fewer employees, coupled with an AI upsell, clinicians and researchers say. Layoffs.fyi has followed hundreds of thousands of tech job cuts since 2023, at the same time as the largest platforms are pouring billions into AI infrastructure.

- The Playbook: Shave the Budget First, Then Pump Up Automation

- Why Even Mediocre Models Get Shipped Anyway

- The Costs of Firing the Humans That Make Your Life Really Easy

- Following the Money in AI: Who Profits and Why

- Cautionary Tales From the Most Recent AI Rollouts

- A Better Way Than Cut and Ship: How to Deploy Responsibly

- The Bottom Line: Efficiency Without Reliability Backfires

That works in the short term, for AI is currently pitched as a margin story: fewer humans plus higher-priced subscriptions. Microsoft, Google, and others have already packaged AI seats with enterprise plans, promising time savings on email, code, and spreadsheets. Whether that time is reliably saved in the real world is another question.

Why Even Mediocre Models Get Shipped Anyway

There are at least three forces that push incomplete models into production. First, benchmark theater: progress on leaderboards of narrow tasks can cover for poor performance in real-world workflows. As those benchmarks continue to saturate and training costs soar, there’s an imbalance between the skyrocketing level of improvements required to maintain interest, and a dev team needing to make incremental changes with high frequency in order to stay interesting.

Second, distribution beats quality. Search or app stores or office suites can shove “good enough” AI onto hundreds of millions of users overnight, get the telemetry and lock-in data even if the first version doesn’t dazzle.

Third, compute constraints. When budgets for inference are tight, companies limit context windows, reduce safety checks, or compress models to trade capability for latency and cost. The upshot: tools that demo well but stumble when it comes to longer, messier tasks.

The Costs of Firing the Humans That Make Your Life Really Easy

Human expertise so often consists in knowing which kinds of failure to look for. Gall’s Law: “A complex system that works is invariably found to have evolved from a simple system that worked. A complex system designed from scratch never works and cannot be patched up to make it work.” Often, when you remove human expertise from a process, you shift failure modes from visible and accessible into the realm of the insidious.

Fewer moderators and policy experts create more potential for harmful outputs and brand damage. Thinner QA adds hallucinations in customer-facing flows, generates support tickets, and creates trust decay. Pew Research Center has regularly found more skepticism than enthusiasm among Americans about AI, and aggressive rollouts without guardrails only feed that skepticism.

There are frameworks created to forestall this spiral — the NIST AI Risk Management Framework, model cards, incident tracking — but they involve commitment and cross-functional buy-in from the company. When those are the teams getting slashed, governance is theater.

Following the Money in AI: Who Profits and Why

The economic incentives are clear. Cloud giants monetize AI twice — selling compute to train models and bundling assistants into software suites at a premium. The boom in data centers at Nvidia helps underscore the rush; triple-digit percent growth figures make GPUs into the next oil. In the meantime, analysts like those at Goldman Sachs have calculated that hundreds of millions of current jobs are at least partially automatable — fodder for boardroom slides arguing that workforce “rebalancing” may be a wise move.

But exposure isn’t replacement. In deployment experiments, firms report lopsided productivity gains and quality wobbles when they deploy AI just beyond its skill band. Such returns, McKinsey and others note, are best found in highly defined tasks overseen by humans — the very areas put at risk when you slash costs indiscriminately.

Cautionary Tales From the Most Recent AI Rollouts

We have seen leading image generators pause features after misaligned outputs. Chatbots have generated quotes for legal briefs and medical questions, leading to public apologies and changes in policy. Code-completion productivity copilots boast, but enterprise teams add a quiet layer of human review to catch the innocent security and licensing risks models gate.

Even the most headline-winning models that perform great on demos can misread charts, botch multi-step instructions, or fail at domain-specific edge cases. The rift between stage sizzle and everyday reliability isn’t closing quickly enough to justify gutting the roles that help keep products safe, compliant, and useful.

A Better Way Than Cut and Ship: How to Deploy Responsibly

The churn has an alternative that is viable. First, slow down and raise the bar: ship on real-world evaluations, not just leaderboards; publish error taxonomies alongside accuracy rates. Second, keep people in the loop if consequences are high — safety, finance, health; really anything that involves legal or reputational risk.

Third, fund the unglamorous infrastructure: red-teaming, dataset governance, traceable training data, and post-deployment monitoring. Standards bodies like NIST and ISO have given us templates; use them. Lastly, align incentives by linking executive compensation to safety and customer satisfaction metrics — not just AI attach rates.

The Bottom Line: Efficiency Without Reliability Backfires

Big Tech can keep laying off staff and flooding the zone with average models, but that is a strategy that turns trust itself into short-term revenue.

The winners in the next act of AI will not be those first to give a TED Talk; they will be those companies who actually deliver systems that are measurably safer, verifiably useful, and built with enough human expertise so as to feel sufficiently accountable for deploying them into the messy edges where real work happens.